15 Hypothesis Examples

Chris Drew (PhD)

Dr. Chris Drew is the founder of the Helpful Professor. He holds a PhD in education and has published over 20 articles in scholarly journals. He is the former editor of the Journal of Learning Development in Higher Education. [Image Descriptor: Photo of Chris]

Learn about our Editorial Process

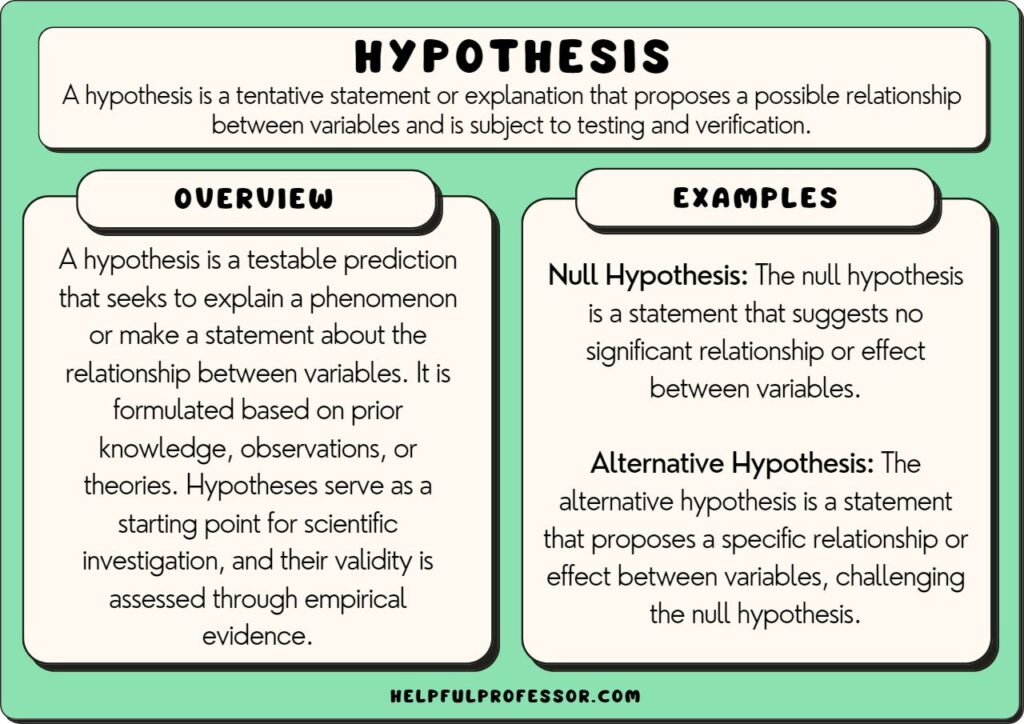

A hypothesis is defined as a testable prediction , and is used primarily in scientific experiments as a potential or predicted outcome that scientists attempt to prove or disprove (Atkinson et al., 2021; Tan, 2022).

In my types of hypothesis article, I outlined 13 different hypotheses, including the directional hypothesis (which makes a prediction about an effect of a treatment will be positive or negative) and the associative hypothesis (which makes a prediction about the association between two variables).

This article will dive into some interesting examples of hypotheses and examine potential ways you might test each one.

Hypothesis Examples

1. “inadequate sleep decreases memory retention”.

Field: Psychology

Type: Causal Hypothesis A causal hypothesis explores the effect of one variable on another. This example posits that a lack of adequate sleep causes decreased memory retention. In other words, if you are not getting enough sleep, your ability to remember and recall information may suffer.

How to Test:

To test this hypothesis, you might devise an experiment whereby your participants are divided into two groups: one receives an average of 8 hours of sleep per night for a week, while the other gets less than the recommended sleep amount.

During this time, all participants would daily study and recall new, specific information. You’d then measure memory retention of this information for both groups using standard memory tests and compare the results.

Should the group with less sleep have statistically significant poorer memory scores, the hypothesis would be supported.

Ensuring the integrity of the experiment requires taking into account factors such as individual health differences, stress levels, and daily nutrition.

Relevant Study: Sleep loss, learning capacity and academic performance (Curcio, Ferrara & De Gennaro, 2006)

2. “Increase in Temperature Leads to Increase in Kinetic Energy”

Field: Physics

Type: Deductive Hypothesis The deductive hypothesis applies the logic of deductive reasoning – it moves from a general premise to a more specific conclusion. This specific hypothesis assumes that as temperature increases, the kinetic energy of particles also increases – that is, when you heat something up, its particles move around more rapidly.

This hypothesis could be examined by heating a gas in a controlled environment and capturing the movement of its particles as a function of temperature.

You’d gradually increase the temperature and measure the kinetic energy of the gas particles with each increment. If the kinetic energy consistently rises with the temperature, your hypothesis gets supporting evidence.

Variables such as pressure and volume of the gas would need to be held constant to ensure validity of results.

3. “Children Raised in Bilingual Homes Develop Better Cognitive Skills”

Field: Psychology/Linguistics

Type: Comparative Hypothesis The comparative hypothesis posits a difference between two or more groups based on certain variables. In this context, you might propose that children raised in bilingual homes have superior cognitive skills compared to those raised in monolingual homes.

Testing this hypothesis could involve identifying two groups of children: those raised in bilingual homes, and those raised in monolingual homes.

Cognitive skills in both groups would be evaluated using a standard cognitive ability test at different stages of development. The examination would be repeated over a significant time period for consistency.

If the group raised in bilingual homes persistently scores higher than the other, the hypothesis would thereby be supported.

The challenge for the researcher would be controlling for other variables that could impact cognitive development, such as socio-economic status, education level of parents, and parenting styles.

Relevant Study: The cognitive benefits of being bilingual (Marian & Shook, 2012)

4. “High-Fiber Diet Leads to Lower Incidences of Cardiovascular Diseases”

Field: Medicine/Nutrition

Type: Alternative Hypothesis The alternative hypothesis suggests an alternative to a null hypothesis. In this context, the implied null hypothesis could be that diet has no effect on cardiovascular health, which the alternative hypothesis contradicts by suggesting that a high-fiber diet leads to fewer instances of cardiovascular diseases.

To test this hypothesis, a longitudinal study could be conducted on two groups of participants; one adheres to a high-fiber diet, while the other follows a diet low in fiber.

After a fixed period, the cardiovascular health of participants in both groups could be analyzed and compared. If the group following a high-fiber diet has a lower number of recorded cases of cardiovascular diseases, it would provide evidence supporting the hypothesis.

Control measures should be implemented to exclude the influence of other lifestyle and genetic factors that contribute to cardiovascular health.

Relevant Study: Dietary fiber, inflammation, and cardiovascular disease (King, 2005)

5. “Gravity Influences the Directional Growth of Plants”

Field: Agronomy / Botany

Type: Explanatory Hypothesis An explanatory hypothesis attempts to explain a phenomenon. In this case, the hypothesis proposes that gravity affects how plants direct their growth – both above-ground (toward sunlight) and below-ground (towards water and other resources).

The testing could be conducted by growing plants in a rotating cylinder to create artificial gravity.

Observations on the direction of growth, over a specified period, can provide insights into the influencing factors. If plants consistently direct their growth in a manner that indicates the influence of gravitational pull, the hypothesis is substantiated.

It is crucial to ensure that other growth-influencing factors, such as light and water, are uniformly distributed so that only gravity influences the directional growth.

6. “The Implementation of Gamified Learning Improves Students’ Motivation”

Field: Education

Type: Relational Hypothesis The relational hypothesis describes the relation between two variables. Here, the hypothesis is that the implementation of gamified learning has a positive effect on the motivation of students.

To validate this proposition, two sets of classes could be compared: one that implements a learning approach with game-based elements, and another that follows a traditional learning approach.

The students’ motivation levels could be gauged by monitoring their engagement, performance, and feedback over a considerable timeframe.

If the students engaged in the gamified learning context present higher levels of motivation and achievement, the hypothesis would be supported.

Control measures ought to be put into place to account for individual differences, including prior knowledge and attitudes towards learning.

Relevant Study: Does educational gamification improve students’ motivation? (Chapman & Rich, 2018)

7. “Mathematics Anxiety Negatively Affects Performance”

Field: Educational Psychology

Type: Research Hypothesis The research hypothesis involves making a prediction that will be tested. In this case, the hypothesis proposes that a student’s anxiety about math can negatively influence their performance in math-related tasks.

To assess this hypothesis, researchers must first measure the mathematics anxiety levels of a sample of students using a validated instrument, such as the Mathematics Anxiety Rating Scale.

Then, the students’ performance in mathematics would be evaluated through standard testing. If there’s a negative correlation between the levels of math anxiety and math performance (meaning as anxiety increases, performance decreases), the hypothesis would be supported.

It would be crucial to control for relevant factors such as overall academic performance and previous mathematical achievement.

8. “Disruption of Natural Sleep Cycle Impairs Worker Productivity”

Field: Organizational Psychology

Type: Operational Hypothesis The operational hypothesis involves defining the variables in measurable terms. In this example, the hypothesis posits that disrupting the natural sleep cycle, for instance through shift work or irregular working hours, can lessen productivity among workers.

To test this hypothesis, you could collect data from workers who maintain regular working hours and those with irregular schedules.

Measuring productivity could involve examining the worker’s ability to complete tasks, the quality of their work, and their efficiency.

If workers with interrupted sleep cycles demonstrate lower productivity compared to those with regular sleep patterns, it would lend support to the hypothesis.

Consideration should be given to potential confounding variables such as job type, worker age, and overall health.

9. “Regular Physical Activity Reduces the Risk of Depression”

Field: Health Psychology

Type: Predictive Hypothesis A predictive hypothesis involves making a prediction about the outcome of a study based on the observed relationship between variables. In this case, it is hypothesized that individuals who engage in regular physical activity are less likely to suffer from depression.

Longitudinal studies would suit to test this hypothesis, tracking participants’ levels of physical activity and their mental health status over time.

The level of physical activity could be self-reported or monitored, while mental health status could be assessed using standard diagnostic tools or surveys.

If data analysis shows that participants maintaining regular physical activity have a lower incidence of depression, this would endorse the hypothesis.

However, care should be taken to control other lifestyle and behavioral factors that could intervene with the results.

Relevant Study: Regular physical exercise and its association with depression (Kim, 2022)

10. “Regular Meditation Enhances Emotional Stability”

Type: Empirical Hypothesis In the empirical hypothesis, predictions are based on amassed empirical evidence . This particular hypothesis theorizes that frequent meditation leads to improved emotional stability, resonating with numerous studies linking meditation to a variety of psychological benefits.

Earlier studies reported some correlations, but to test this hypothesis directly, you’d organize an experiment where one group meditates regularly over a set period while a control group doesn’t.

Both groups’ emotional stability levels would be measured at the start and end of the experiment using a validated emotional stability assessment.

If regular meditators display noticeable improvements in emotional stability compared to the control group, the hypothesis gains credit.

You’d have to ensure a similar emotional baseline for all participants at the start to avoid skewed results.

11. “Children Exposed to Reading at an Early Age Show Superior Academic Progress”

Type: Directional Hypothesis The directional hypothesis predicts the direction of an expected relationship between variables. Here, the hypothesis anticipates that early exposure to reading positively affects a child’s academic advancement.

A longitudinal study tracking children’s reading habits from an early age and their consequent academic performance could validate this hypothesis.

Parents could report their children’s exposure to reading at home, while standardized school exam results would provide a measure of academic achievement.

If the children exposed to early reading consistently perform better acadically, it gives weight to the hypothesis.

However, it would be important to control for variables that might impact academic performance, such as socioeconomic background, parental education level, and school quality.

12. “Adopting Energy-efficient Technologies Reduces Carbon Footprint of Industries”

Field: Environmental Science

Type: Descriptive Hypothesis A descriptive hypothesis predicts the existence of an association or pattern related to variables. In this scenario, the hypothesis suggests that industries adopting energy-efficient technologies will resultantly show a reduced carbon footprint.

Global industries making use of energy-efficient technologies could track their carbon emissions over time. At the same time, others not implementing such technologies continue their regular tracking.

After a defined time, the carbon emission data of both groups could be compared. If industries that adopted energy-efficient technologies demonstrate a notable reduction in their carbon footprints, the hypothesis would hold strong.

In the experiment, you would exclude variations brought by factors such as industry type, size, and location.

13. “Reduced Screen Time Improves Sleep Quality”

Type: Simple Hypothesis The simple hypothesis is a prediction about the relationship between two variables, excluding any other variables from consideration. This example posits that by reducing time spent on devices like smartphones and computers, an individual should experience improved sleep quality.

A sample group would need to reduce their daily screen time for a pre-determined period. Sleep quality before and after the reduction could be measured using self-report sleep diaries and objective measures like actigraphy, monitoring movement and wakefulness during sleep.

If the data shows that sleep quality improved post the screen time reduction, the hypothesis would be validated.

Other aspects affecting sleep quality, like caffeine intake, should be controlled during the experiment.

Relevant Study: Screen time use impacts low‐income preschool children’s sleep quality, tiredness, and ability to fall asleep (Waller et al., 2021)

14. Engaging in Brain-Training Games Improves Cognitive Functioning in Elderly

Field: Gerontology

Type: Inductive Hypothesis Inductive hypotheses are based on observations leading to broader generalizations and theories. In this context, the hypothesis deduces from observed instances that engaging in brain-training games can help improve cognitive functioning in the elderly.

A longitudinal study could be conducted where an experimental group of elderly people partakes in regular brain-training games.

Their cognitive functioning could be assessed at the start of the study and at regular intervals using standard neuropsychological tests.

If the group engaging in brain-training games shows better cognitive functioning scores over time compared to a control group not playing these games, the hypothesis would be supported.

15. Farming Practices Influence Soil Erosion Rates

Type: Null Hypothesis A null hypothesis is a negative statement assuming no relationship or difference between variables. The hypothesis in this context asserts there’s no effect of different farming practices on the rates of soil erosion.

Comparing soil erosion rates in areas with different farming practices over a considerable timeframe could help test this hypothesis.

If, statistically, the farming practices do not lead to differences in soil erosion rates, the null hypothesis is accepted.

However, if marked variation appears, the null hypothesis is rejected, meaning farming practices do influence soil erosion rates. It would be crucial to control for external factors like weather, soil type, and natural vegetation.

The variety of hypotheses mentioned above underscores the diversity of research constructs inherent in different fields, each with its unique purpose and way of testing.

While researchers may develop hypotheses primarily as tools to define and narrow the focus of the study, these hypotheses also serve as valuable guiding forces for the data collection and analysis procedures, making the research process more efficient and direction-focused.

Hypotheses serve as a compass for any form of academic research. The diverse examples provided, from Psychology to Educational Studies, Environmental Science to Gerontology, clearly demonstrate how certain hypotheses suit specific fields more aptly than others.

It is important to underline that although these varied hypotheses differ in their structure and methods of testing, each endorses the fundamental value of empiricism in research. Evidence-based decision making remains at the heart of scholarly inquiry, regardless of the research field, thus aligning all hypotheses to the core purpose of scientific investigation.

Testing hypotheses is an essential part of the scientific method . By doing so, researchers can either confirm their predictions, giving further validity to an existing theory, or they might uncover new insights that could potentially shift the field’s understanding of a particular phenomenon. In either case, hypotheses serve as the stepping stones for scientific exploration and discovery.

Atkinson, P., Delamont, S., Cernat, A., Sakshaug, J. W., & Williams, R. A. (2021). SAGE research methods foundations . SAGE Publications Ltd.

Curcio, G., Ferrara, M., & De Gennaro, L. (2006). Sleep loss, learning capacity and academic performance. Sleep medicine reviews , 10 (5), 323-337.

Kim, J. H. (2022). Regular physical exercise and its association with depression: A population-based study short title: Exercise and depression. Psychiatry Research , 309 , 114406.

King, D. E. (2005). Dietary fiber, inflammation, and cardiovascular disease. Molecular nutrition & food research , 49 (6), 594-600.

Marian, V., & Shook, A. (2012, September). The cognitive benefits of being bilingual. In Cerebrum: the Dana forum on brain science (Vol. 2012). Dana Foundation.

Tan, W. C. K. (2022). Research Methods: A Practical Guide For Students And Researchers (Second Edition) . World Scientific Publishing Company.

Waller, N. A., Zhang, N., Cocci, A. H., D’Agostino, C., Wesolek‐Greenson, S., Wheelock, K., … & Resnicow, K. (2021). Screen time use impacts low‐income preschool children’s sleep quality, tiredness, and ability to fall asleep. Child: care, health and development, 47 (5), 618-626.

- Chris Drew (PhD) https://helpfulprofessor.com/author/chris-drew-phd-2/ 10 Reasons you’re Perpetually Single

- Chris Drew (PhD) https://helpfulprofessor.com/author/chris-drew-phd-2/ 20 Montessori Toddler Bedrooms (Design Inspiration)

- Chris Drew (PhD) https://helpfulprofessor.com/author/chris-drew-phd-2/ 21 Montessori Homeschool Setups

- Chris Drew (PhD) https://helpfulprofessor.com/author/chris-drew-phd-2/ 101 Hidden Talents Examples

Leave a Comment Cancel Reply

Your email address will not be published. Required fields are marked *

Educational resources and simple solutions for your research journey

What is a Research Hypothesis: How to Write it, Types, and Examples

Any research begins with a research question and a research hypothesis . A research question alone may not suffice to design the experiment(s) needed to answer it. A hypothesis is central to the scientific method. But what is a hypothesis ? A hypothesis is a testable statement that proposes a possible explanation to a phenomenon, and it may include a prediction. Next, you may ask what is a research hypothesis ? Simply put, a research hypothesis is a prediction or educated guess about the relationship between the variables that you want to investigate.

It is important to be thorough when developing your research hypothesis. Shortcomings in the framing of a hypothesis can affect the study design and the results. A better understanding of the research hypothesis definition and characteristics of a good hypothesis will make it easier for you to develop your own hypothesis for your research. Let’s dive in to know more about the types of research hypothesis , how to write a research hypothesis , and some research hypothesis examples .

Table of Contents

What is a hypothesis ?

A hypothesis is based on the existing body of knowledge in a study area. Framed before the data are collected, a hypothesis states the tentative relationship between independent and dependent variables, along with a prediction of the outcome.

What is a research hypothesis ?

Young researchers starting out their journey are usually brimming with questions like “ What is a hypothesis ?” “ What is a research hypothesis ?” “How can I write a good research hypothesis ?”

A research hypothesis is a statement that proposes a possible explanation for an observable phenomenon or pattern. It guides the direction of a study and predicts the outcome of the investigation. A research hypothesis is testable, i.e., it can be supported or disproven through experimentation or observation.

Characteristics of a good hypothesis

Here are the characteristics of a good hypothesis :

- Clearly formulated and free of language errors and ambiguity

- Concise and not unnecessarily verbose

- Has clearly defined variables

- Testable and stated in a way that allows for it to be disproven

- Can be tested using a research design that is feasible, ethical, and practical

- Specific and relevant to the research problem

- Rooted in a thorough literature search

- Can generate new knowledge or understanding.

How to create an effective research hypothesis

A study begins with the formulation of a research question. A researcher then performs background research. This background information forms the basis for building a good research hypothesis . The researcher then performs experiments, collects, and analyzes the data, interprets the findings, and ultimately, determines if the findings support or negate the original hypothesis.

Let’s look at each step for creating an effective, testable, and good research hypothesis :

- Identify a research problem or question: Start by identifying a specific research problem.

- Review the literature: Conduct an in-depth review of the existing literature related to the research problem to grasp the current knowledge and gaps in the field.

- Formulate a clear and testable hypothesis : Based on the research question, use existing knowledge to form a clear and testable hypothesis . The hypothesis should state a predicted relationship between two or more variables that can be measured and manipulated. Improve the original draft till it is clear and meaningful.

- State the null hypothesis: The null hypothesis is a statement that there is no relationship between the variables you are studying.

- Define the population and sample: Clearly define the population you are studying and the sample you will be using for your research.

- Select appropriate methods for testing the hypothesis: Select appropriate research methods, such as experiments, surveys, or observational studies, which will allow you to test your research hypothesis .

Remember that creating a research hypothesis is an iterative process, i.e., you might have to revise it based on the data you collect. You may need to test and reject several hypotheses before answering the research problem.

How to write a research hypothesis

When you start writing a research hypothesis , you use an “if–then” statement format, which states the predicted relationship between two or more variables. Clearly identify the independent variables (the variables being changed) and the dependent variables (the variables being measured), as well as the population you are studying. Review and revise your hypothesis as needed.

An example of a research hypothesis in this format is as follows:

“ If [athletes] follow [cold water showers daily], then their [endurance] increases.”

Population: athletes

Independent variable: daily cold water showers

Dependent variable: endurance

You may have understood the characteristics of a good hypothesis . But note that a research hypothesis is not always confirmed; a researcher should be prepared to accept or reject the hypothesis based on the study findings.

Research hypothesis checklist

Following from above, here is a 10-point checklist for a good research hypothesis :

- Testable: A research hypothesis should be able to be tested via experimentation or observation.

- Specific: A research hypothesis should clearly state the relationship between the variables being studied.

- Based on prior research: A research hypothesis should be based on existing knowledge and previous research in the field.

- Falsifiable: A research hypothesis should be able to be disproven through testing.

- Clear and concise: A research hypothesis should be stated in a clear and concise manner.

- Logical: A research hypothesis should be logical and consistent with current understanding of the subject.

- Relevant: A research hypothesis should be relevant to the research question and objectives.

- Feasible: A research hypothesis should be feasible to test within the scope of the study.

- Reflects the population: A research hypothesis should consider the population or sample being studied.

- Uncomplicated: A good research hypothesis is written in a way that is easy for the target audience to understand.

By following this research hypothesis checklist , you will be able to create a research hypothesis that is strong, well-constructed, and more likely to yield meaningful results.

Types of research hypothesis

Different types of research hypothesis are used in scientific research:

1. Null hypothesis:

A null hypothesis states that there is no change in the dependent variable due to changes to the independent variable. This means that the results are due to chance and are not significant. A null hypothesis is denoted as H0 and is stated as the opposite of what the alternative hypothesis states.

Example: “ The newly identified virus is not zoonotic .”

2. Alternative hypothesis:

This states that there is a significant difference or relationship between the variables being studied. It is denoted as H1 or Ha and is usually accepted or rejected in favor of the null hypothesis.

Example: “ The newly identified virus is zoonotic .”

3. Directional hypothesis :

This specifies the direction of the relationship or difference between variables; therefore, it tends to use terms like increase, decrease, positive, negative, more, or less.

Example: “ The inclusion of intervention X decreases infant mortality compared to the original treatment .”

4. Non-directional hypothesis:

While it does not predict the exact direction or nature of the relationship between the two variables, a non-directional hypothesis states the existence of a relationship or difference between variables but not the direction, nature, or magnitude of the relationship. A non-directional hypothesis may be used when there is no underlying theory or when findings contradict previous research.

Example, “ Cats and dogs differ in the amount of affection they express .”

5. Simple hypothesis :

A simple hypothesis only predicts the relationship between one independent and another independent variable.

Example: “ Applying sunscreen every day slows skin aging .”

6 . Complex hypothesis :

A complex hypothesis states the relationship or difference between two or more independent and dependent variables.

Example: “ Applying sunscreen every day slows skin aging, reduces sun burn, and reduces the chances of skin cancer .” (Here, the three dependent variables are slowing skin aging, reducing sun burn, and reducing the chances of skin cancer.)

7. Associative hypothesis:

An associative hypothesis states that a change in one variable results in the change of the other variable. The associative hypothesis defines interdependency between variables.

Example: “ There is a positive association between physical activity levels and overall health .”

8 . Causal hypothesis:

A causal hypothesis proposes a cause-and-effect interaction between variables.

Example: “ Long-term alcohol use causes liver damage .”

Note that some of the types of research hypothesis mentioned above might overlap. The types of hypothesis chosen will depend on the research question and the objective of the study.

Research hypothesis examples

Here are some good research hypothesis examples :

“The use of a specific type of therapy will lead to a reduction in symptoms of depression in individuals with a history of major depressive disorder.”

“Providing educational interventions on healthy eating habits will result in weight loss in overweight individuals.”

“Plants that are exposed to certain types of music will grow taller than those that are not exposed to music.”

“The use of the plant growth regulator X will lead to an increase in the number of flowers produced by plants.”

Characteristics that make a research hypothesis weak are unclear variables, unoriginality, being too general or too vague, and being untestable. A weak hypothesis leads to weak research and improper methods.

Some bad research hypothesis examples (and the reasons why they are “bad”) are as follows:

“This study will show that treatment X is better than any other treatment . ” (This statement is not testable, too broad, and does not consider other treatments that may be effective.)

“This study will prove that this type of therapy is effective for all mental disorders . ” (This statement is too broad and not testable as mental disorders are complex and different disorders may respond differently to different types of therapy.)

“Plants can communicate with each other through telepathy . ” (This statement is not testable and lacks a scientific basis.)

Importance of testable hypothesis

If a research hypothesis is not testable, the results will not prove or disprove anything meaningful. The conclusions will be vague at best. A testable hypothesis helps a researcher focus on the study outcome and understand the implication of the question and the different variables involved. A testable hypothesis helps a researcher make precise predictions based on prior research.

To be considered testable, there must be a way to prove that the hypothesis is true or false; further, the results of the hypothesis must be reproducible.

Frequently Asked Questions (FAQs) on research hypothesis

1. What is the difference between research question and research hypothesis ?

A research question defines the problem and helps outline the study objective(s). It is an open-ended statement that is exploratory or probing in nature. Therefore, it does not make predictions or assumptions. It helps a researcher identify what information to collect. A research hypothesis , however, is a specific, testable prediction about the relationship between variables. Accordingly, it guides the study design and data analysis approach.

2. When to reject null hypothesis ?

A null hypothesis should be rejected when the evidence from a statistical test shows that it is unlikely to be true. This happens when the test statistic (e.g., p -value) is less than the defined significance level (e.g., 0.05). Rejecting the null hypothesis does not necessarily mean that the alternative hypothesis is true; it simply means that the evidence found is not compatible with the null hypothesis.

3. How can I be sure my hypothesis is testable?

A testable hypothesis should be specific and measurable, and it should state a clear relationship between variables that can be tested with data. To ensure that your hypothesis is testable, consider the following:

- Clearly define the key variables in your hypothesis. You should be able to measure and manipulate these variables in a way that allows you to test the hypothesis.

- The hypothesis should predict a specific outcome or relationship between variables that can be measured or quantified.

- You should be able to collect the necessary data within the constraints of your study.

- It should be possible for other researchers to replicate your study, using the same methods and variables.

- Your hypothesis should be testable by using appropriate statistical analysis techniques, so you can draw conclusions, and make inferences about the population from the sample data.

- The hypothesis should be able to be disproven or rejected through the collection of data.

4. How do I revise my research hypothesis if my data does not support it?

If your data does not support your research hypothesis , you will need to revise it or develop a new one. You should examine your data carefully and identify any patterns or anomalies, re-examine your research question, and/or revisit your theory to look for any alternative explanations for your results. Based on your review of the data, literature, and theories, modify your research hypothesis to better align it with the results you obtained. Use your revised hypothesis to guide your research design and data collection. It is important to remain objective throughout the process.

5. I am performing exploratory research. Do I need to formulate a research hypothesis?

As opposed to “confirmatory” research, where a researcher has some idea about the relationship between the variables under investigation, exploratory research (or hypothesis-generating research) looks into a completely new topic about which limited information is available. Therefore, the researcher will not have any prior hypotheses. In such cases, a researcher will need to develop a post-hoc hypothesis. A post-hoc research hypothesis is generated after these results are known.

6. How is a research hypothesis different from a research question?

A research question is an inquiry about a specific topic or phenomenon, typically expressed as a question. It seeks to explore and understand a particular aspect of the research subject. In contrast, a research hypothesis is a specific statement or prediction that suggests an expected relationship between variables. It is formulated based on existing knowledge or theories and guides the research design and data analysis.

7. Can a research hypothesis change during the research process?

Yes, research hypotheses can change during the research process. As researchers collect and analyze data, new insights and information may emerge that require modification or refinement of the initial hypotheses. This can be due to unexpected findings, limitations in the original hypotheses, or the need to explore additional dimensions of the research topic. Flexibility is crucial in research, allowing for adaptation and adjustment of hypotheses to align with the evolving understanding of the subject matter.

8. How many hypotheses should be included in a research study?

The number of research hypotheses in a research study varies depending on the nature and scope of the research. It is not necessary to have multiple hypotheses in every study. Some studies may have only one primary hypothesis, while others may have several related hypotheses. The number of hypotheses should be determined based on the research objectives, research questions, and the complexity of the research topic. It is important to ensure that the hypotheses are focused, testable, and directly related to the research aims.

9. Can research hypotheses be used in qualitative research?

Yes, research hypotheses can be used in qualitative research, although they are more commonly associated with quantitative research. In qualitative research, hypotheses may be formulated as tentative or exploratory statements that guide the investigation. Instead of testing hypotheses through statistical analysis, qualitative researchers may use the hypotheses to guide data collection and analysis, seeking to uncover patterns, themes, or relationships within the qualitative data. The emphasis in qualitative research is often on generating insights and understanding rather than confirming or rejecting specific research hypotheses through statistical testing.

Editage All Access is a subscription-based platform that unifies the best AI tools and services designed to speed up, simplify, and streamline every step of a researcher’s journey. The Editage All Access Pack is a one-of-a-kind subscription that unlocks full access to an AI writing assistant, literature recommender, journal finder, scientific illustration tool, and exclusive discounts on professional publication services from Editage.

Based on 22+ years of experience in academia, Editage All Access empowers researchers to put their best research forward and move closer to success. Explore our top AI Tools pack, AI Tools + Publication Services pack, or Build Your Own Plan. Find everything a researcher needs to succeed, all in one place – Get All Access now starting at just $14 a month !

Related Posts

Back to School – Lock-in All Access Pack for a Year at the Best Price

Journal Turnaround Time: Researcher.Life and Scholarly Intelligence Join Hands to Empower Researchers with Publication Time Insights

- Ethical Theory

Comparative Hypotheses for Technology Analysis

- In book: Global Encyclopedia of Public Administration, Public Policy, and Governance (pp.1-8)

- Publisher: Springer Nature Switzerland

- Italian National Research Council

Discover the world's research

- 25+ million members

- 160+ million publication pages

- 2.3+ billion citations

- SENSORS-BASEL

- J ENG TECHNOL MANAGE

- TECHNOL FORECAST SOC

- Recruit researchers

- Join for free

- Login Email Tip: Most researchers use their institutional email address as their ResearchGate login Password Forgot password? Keep me logged in Log in or Continue with Google Welcome back! Please log in. Email · Hint Tip: Most researchers use their institutional email address as their ResearchGate login Password Forgot password? Keep me logged in Log in or Continue with Google No account? Sign up

Ohio State nav bar

The Ohio State University

- BuckeyeLink

- Find People

- Search Ohio State

Research Questions & Hypotheses

Generally, in quantitative studies, reviewers expect hypotheses rather than research questions. However, both research questions and hypotheses serve different purposes and can be beneficial when used together.

Research Questions

Clarify the research’s aim (farrugia et al., 2010).

- Research often begins with an interest in a topic, but a deep understanding of the subject is crucial to formulate an appropriate research question.

- Descriptive: “What factors most influence the academic achievement of senior high school students?”

- Comparative: “What is the performance difference between teaching methods A and B?”

- Relationship-based: “What is the relationship between self-efficacy and academic achievement?”

- Increasing knowledge about a subject can be achieved through systematic literature reviews, in-depth interviews with patients (and proxies), focus groups, and consultations with field experts.

- Some funding bodies, like the Canadian Institute for Health Research, recommend conducting a systematic review or a pilot study before seeking grants for full trials.

- The presence of multiple research questions in a study can complicate the design, statistical analysis, and feasibility.

- It’s advisable to focus on a single primary research question for the study.

- The primary question, clearly stated at the end of a grant proposal’s introduction, usually specifies the study population, intervention, and other relevant factors.

- The FINER criteria underscore aspects that can enhance the chances of a successful research project, including specifying the population of interest, aligning with scientific and public interest, clinical relevance, and contribution to the field, while complying with ethical and national research standards.

| Feasible | ||

| Interesting | ||

| Novel | ||

| Ethical | ||

| Relevant |

- The P ICOT approach is crucial in developing the study’s framework and protocol, influencing inclusion and exclusion criteria and identifying patient groups for inclusion.

| Population (patients) | ||

| Intervention (for intervention studies only) | ||

| Comparison group | ||

| Outcome of interest | ||

| Time |

- Defining the specific population, intervention, comparator, and outcome helps in selecting the right outcome measurement tool.

- The more precise the population definition and stricter the inclusion and exclusion criteria, the more significant the impact on the interpretation, applicability, and generalizability of the research findings.

- A restricted study population enhances internal validity but may limit the study’s external validity and generalizability to clinical practice.

- A broadly defined study population may better reflect clinical practice but could increase bias and reduce internal validity.

- An inadequately formulated research question can negatively impact study design, potentially leading to ineffective outcomes and affecting publication prospects.

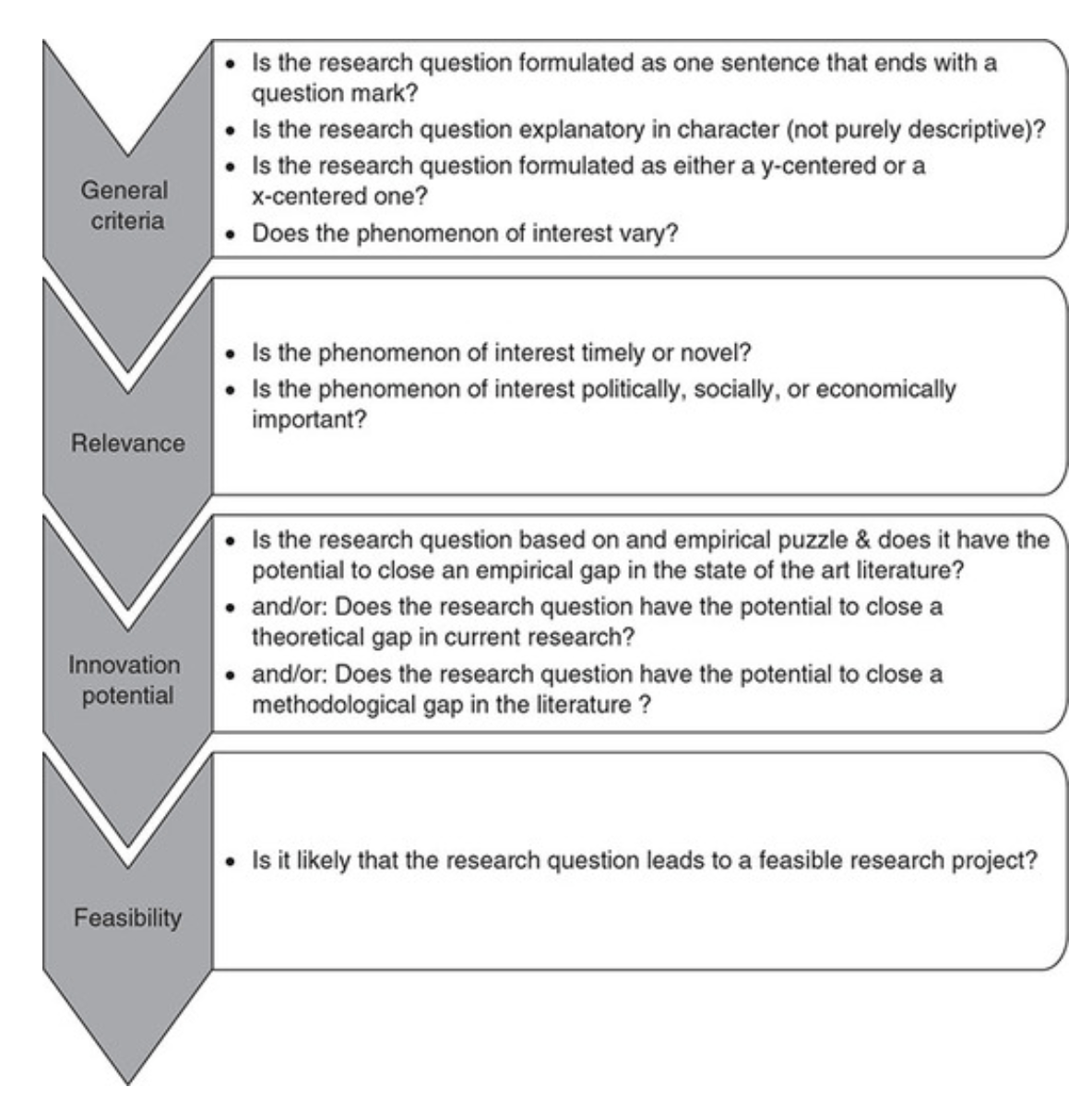

Checklist: Good research questions for social science projects (Panke, 2018)

Research Hypotheses

Present the researcher’s predictions based on specific statements.

- These statements define the research problem or issue and indicate the direction of the researcher’s predictions.

- Formulating the research question and hypothesis from existing data (e.g., a database) can lead to multiple statistical comparisons and potentially spurious findings due to chance.

- The research or clinical hypothesis, derived from the research question, shapes the study’s key elements: sampling strategy, intervention, comparison, and outcome variables.

- Hypotheses can express a single outcome or multiple outcomes.

- After statistical testing, the null hypothesis is either rejected or not rejected based on whether the study’s findings are statistically significant.

- Hypothesis testing helps determine if observed findings are due to true differences and not chance.

- Hypotheses can be 1-sided (specific direction of difference) or 2-sided (presence of a difference without specifying direction).

- 2-sided hypotheses are generally preferred unless there’s a strong justification for a 1-sided hypothesis.

- A solid research hypothesis, informed by a good research question, influences the research design and paves the way for defining clear research objectives.

Types of Research Hypothesis

- In a Y-centered research design, the focus is on the dependent variable (DV) which is specified in the research question. Theories are then used to identify independent variables (IV) and explain their causal relationship with the DV.

- Example: “An increase in teacher-led instructional time (IV) is likely to improve student reading comprehension scores (DV), because extensive guided practice under expert supervision enhances learning retention and skill mastery.”

- Hypothesis Explanation: The dependent variable (student reading comprehension scores) is the focus, and the hypothesis explores how changes in the independent variable (teacher-led instructional time) affect it.

- In X-centered research designs, the independent variable is specified in the research question. Theories are used to determine potential dependent variables and the causal mechanisms at play.

- Example: “Implementing technology-based learning tools (IV) is likely to enhance student engagement in the classroom (DV), because interactive and multimedia content increases student interest and participation.”

- Hypothesis Explanation: The independent variable (technology-based learning tools) is the focus, with the hypothesis exploring its impact on a potential dependent variable (student engagement).

- Probabilistic hypotheses suggest that changes in the independent variable are likely to lead to changes in the dependent variable in a predictable manner, but not with absolute certainty.

- Example: “The more teachers engage in professional development programs (IV), the more their teaching effectiveness (DV) is likely to improve, because continuous training updates pedagogical skills and knowledge.”

- Hypothesis Explanation: This hypothesis implies a probable relationship between the extent of professional development (IV) and teaching effectiveness (DV).

- Deterministic hypotheses state that a specific change in the independent variable will lead to a specific change in the dependent variable, implying a more direct and certain relationship.

- Example: “If the school curriculum changes from traditional lecture-based methods to project-based learning (IV), then student collaboration skills (DV) are expected to improve because project-based learning inherently requires teamwork and peer interaction.”

- Hypothesis Explanation: This hypothesis presumes a direct and definite outcome (improvement in collaboration skills) resulting from a specific change in the teaching method.

- Example : “Students who identify as visual learners will score higher on tests that are presented in a visually rich format compared to tests presented in a text-only format.”

- Explanation : This hypothesis aims to describe the potential difference in test scores between visual learners taking visually rich tests and text-only tests, without implying a direct cause-and-effect relationship.

- Example : “Teaching method A will improve student performance more than method B.”

- Explanation : This hypothesis compares the effectiveness of two different teaching methods, suggesting that one will lead to better student performance than the other. It implies a direct comparison but does not necessarily establish a causal mechanism.

- Example : “Students with higher self-efficacy will show higher levels of academic achievement.”

- Explanation : This hypothesis predicts a relationship between the variable of self-efficacy and academic achievement. Unlike a causal hypothesis, it does not necessarily suggest that one variable causes changes in the other, but rather that they are related in some way.

Tips for developing research questions and hypotheses for research studies

- Perform a systematic literature review (if one has not been done) to increase knowledge and familiarity with the topic and to assist with research development.

- Learn about current trends and technological advances on the topic.

- Seek careful input from experts, mentors, colleagues, and collaborators to refine your research question as this will aid in developing the research question and guide the research study.

- Use the FINER criteria in the development of the research question.

- Ensure that the research question follows PICOT format.

- Develop a research hypothesis from the research question.

- Ensure that the research question and objectives are answerable, feasible, and clinically relevant.

If your research hypotheses are derived from your research questions, particularly when multiple hypotheses address a single question, it’s recommended to use both research questions and hypotheses. However, if this isn’t the case, using hypotheses over research questions is advised. It’s important to note these are general guidelines, not strict rules. If you opt not to use hypotheses, consult with your supervisor for the best approach.

Farrugia, P., Petrisor, B. A., Farrokhyar, F., & Bhandari, M. (2010). Practical tips for surgical research: Research questions, hypotheses and objectives. Canadian journal of surgery. Journal canadien de chirurgie , 53 (4), 278–281.

Hulley, S. B., Cummings, S. R., Browner, W. S., Grady, D., & Newman, T. B. (2007). Designing clinical research. Philadelphia.

Panke, D. (2018). Research design & method selection: Making good choices in the social sciences. Research Design & Method Selection , 1-368.

- Research Process

- Manuscript Preparation

- Manuscript Review

- Publication Process

- Publication Recognition

- Language Editing Services

- Translation Services

Step-by-Step Guide: How to Craft a Strong Research Hypothesis

- 4 minute read

- 363.3K views

Table of Contents

A research hypothesis is a concise statement about the expected result of an experiment or project. In many ways, a research hypothesis represents the starting point for a scientific endeavor, as it establishes a tentative assumption that is eventually substantiated or falsified, ultimately improving our certainty about the subject investigated.

To help you with this and ease the process, in this article, we discuss the purpose of research hypotheses and list the most essential qualities of a compelling hypothesis. Let’s find out!

How to Craft a Research Hypothesis

Crafting a research hypothesis begins with a comprehensive literature review to identify a knowledge gap in your field. Once you find a question or problem, come up with a possible answer or explanation, which becomes your hypothesis. Now think about the specific methods of experimentation that can prove or disprove the hypothesis, which ultimately lead to the results of the study.

Enlisted below are some standard formats in which you can formulate a hypothesis¹ :

- A hypothesis can use the if/then format when it seeks to explore the correlation between two variables in a study primarily.

Example: If administered drug X, then patients will experience reduced fatigue from cancer treatment.

- A hypothesis can adopt when X/then Y format when it primarily aims to expose a connection between two variables

Example: When workers spend a significant portion of their waking hours in sedentary work , then they experience a greater frequency of digestive problems.

- A hypothesis can also take the form of a direct statement.

Example: Drug X and drug Y reduce the risk of cognitive decline through the same chemical pathways

What are the Features of an Effective Hypothesis?

Hypotheses in research need to satisfy specific criteria to be considered scientifically rigorous. Here are the most notable qualities of a strong hypothesis:

- Testability: Ensure the hypothesis allows you to work towards observable and testable results.

- Brevity and objectivity: Present your hypothesis as a brief statement and avoid wordiness.

- Clarity and Relevance: The hypothesis should reflect a clear idea of what we know and what we expect to find out about a phenomenon and address the significant knowledge gap relevant to a field of study.

Understanding Null and Alternative Hypotheses in Research

There are two types of hypotheses used commonly in research that aid statistical analyses. These are known as the null hypothesis and the alternative hypothesis . A null hypothesis is a statement assumed to be factual in the initial phase of the study.

For example, if a researcher is testing the efficacy of a new drug, then the null hypothesis will posit that the drug has no benefits compared to an inactive control or placebo . Suppose the data collected through a drug trial leads a researcher to reject the null hypothesis. In that case, it is considered to substantiate the alternative hypothesis in the above example, that the new drug provides benefits compared to the placebo.

Let’s take a closer look at the null hypothesis and alternative hypothesis with two more examples:

Null Hypothesis:

The rate of decline in the number of species in habitat X in the last year is the same as in the last 100 years when controlled for all factors except the recent wildfires.

In the next experiment, the researcher will experimentally reject this null hypothesis in order to confirm the following alternative hypothesis :

The rate of decline in the number of species in habitat X in the last year is different from the rate of decline in the last 100 years when controlled for all factors other than the recent wildfires.

In the pair of null and alternative hypotheses stated above, a statistical comparison of the rate of species decline over a century and the preceding year will help the research experimentally test the null hypothesis, helping to draw scientifically valid conclusions about two factors—wildfires and species decline.

We also recommend that researchers pay attention to contextual echoes and connections when writing research hypotheses. Research hypotheses are often closely linked to the introduction ² , such as the context of the study, and can similarly influence the reader’s judgment of the relevance and validity of the research hypothesis.

Seasoned experts, such as professionals at Elsevier Language Services, guide authors on how to best embed a hypothesis within an article so that it communicates relevance and credibility. Contact us if you want help in ensuring readers find your hypothesis robust and unbiased.

References

- Hypotheses – The University Writing Center. (n.d.). https://writingcenter.tamu.edu/writing-speaking-guides/hypotheses

- Shaping the research question and hypothesis. (n.d.). Students. https://students.unimelb.edu.au/academic-skills/graduate-research-services/writing-thesis-sections-part-2/shaping-the-research-question-and-hypothesis

Systematic Literature Review or Literature Review?

How to Write an Effective Problem Statement for Your Research Paper

You may also like.

Submission 101: What format should be used for academic papers?

Page-Turner Articles are More Than Just Good Arguments: Be Mindful of Tone and Structure!

A Must-see for Researchers! How to Ensure Inclusivity in Your Scientific Writing

Make Hook, Line, and Sinker: The Art of Crafting Engaging Introductions

Can Describing Study Limitations Improve the Quality of Your Paper?

A Guide to Crafting Shorter, Impactful Sentences in Academic Writing

6 Steps to Write an Excellent Discussion in Your Manuscript

How to Write Clear and Crisp Civil Engineering Papers? Here are 5 Key Tips to Consider

Input your search keywords and press Enter.

What Is A Research (Scientific) Hypothesis? A plain-language explainer + examples

By: Derek Jansen (MBA) | Reviewed By: Dr Eunice Rautenbach | June 2020

If you’re new to the world of research, or it’s your first time writing a dissertation or thesis, you’re probably noticing that the words “research hypothesis” and “scientific hypothesis” are used quite a bit, and you’re wondering what they mean in a research context .

“Hypothesis” is one of those words that people use loosely, thinking they understand what it means. However, it has a very specific meaning within academic research. So, it’s important to understand the exact meaning before you start hypothesizing.

Research Hypothesis 101

- What is a hypothesis ?

- What is a research hypothesis (scientific hypothesis)?

- Requirements for a research hypothesis

- Definition of a research hypothesis

- The null hypothesis

What is a hypothesis?

Let’s start with the general definition of a hypothesis (not a research hypothesis or scientific hypothesis), according to the Cambridge Dictionary:

Hypothesis: an idea or explanation for something that is based on known facts but has not yet been proved.

In other words, it’s a statement that provides an explanation for why or how something works, based on facts (or some reasonable assumptions), but that has not yet been specifically tested . For example, a hypothesis might look something like this:

Hypothesis: sleep impacts academic performance.

This statement predicts that academic performance will be influenced by the amount and/or quality of sleep a student engages in – sounds reasonable, right? It’s based on reasonable assumptions , underpinned by what we currently know about sleep and health (from the existing literature). So, loosely speaking, we could call it a hypothesis, at least by the dictionary definition.

But that’s not good enough…

Unfortunately, that’s not quite sophisticated enough to describe a research hypothesis (also sometimes called a scientific hypothesis), and it wouldn’t be acceptable in a dissertation, thesis or research paper . In the world of academic research, a statement needs a few more criteria to constitute a true research hypothesis .

What is a research hypothesis?

A research hypothesis (also called a scientific hypothesis) is a statement about the expected outcome of a study (for example, a dissertation or thesis). To constitute a quality hypothesis, the statement needs to have three attributes – specificity , clarity and testability .

Let’s take a look at these more closely.

Need a helping hand?

Hypothesis Essential #1: Specificity & Clarity

A good research hypothesis needs to be extremely clear and articulate about both what’ s being assessed (who or what variables are involved ) and the expected outcome (for example, a difference between groups, a relationship between variables, etc.).

Let’s stick with our sleepy students example and look at how this statement could be more specific and clear.

Hypothesis: Students who sleep at least 8 hours per night will, on average, achieve higher grades in standardised tests than students who sleep less than 8 hours a night.

As you can see, the statement is very specific as it identifies the variables involved (sleep hours and test grades), the parties involved (two groups of students), as well as the predicted relationship type (a positive relationship). There’s no ambiguity or uncertainty about who or what is involved in the statement, and the expected outcome is clear.

Contrast that to the original hypothesis we looked at – “Sleep impacts academic performance” – and you can see the difference. “Sleep” and “academic performance” are both comparatively vague , and there’s no indication of what the expected relationship direction is (more sleep or less sleep). As you can see, specificity and clarity are key.

Hypothesis Essential #2: Testability (Provability)

A statement must be testable to qualify as a research hypothesis. In other words, there needs to be a way to prove (or disprove) the statement. If it’s not testable, it’s not a hypothesis – simple as that.

For example, consider the hypothesis we mentioned earlier:

Hypothesis: Students who sleep at least 8 hours per night will, on average, achieve higher grades in standardised tests than students who sleep less than 8 hours a night.

We could test this statement by undertaking a quantitative study involving two groups of students, one that gets 8 or more hours of sleep per night for a fixed period, and one that gets less. We could then compare the standardised test results for both groups to see if there’s a statistically significant difference.

Again, if you compare this to the original hypothesis we looked at – “Sleep impacts academic performance” – you can see that it would be quite difficult to test that statement, primarily because it isn’t specific enough. How much sleep? By who? What type of academic performance?

So, remember the mantra – if you can’t test it, it’s not a hypothesis 🙂

Defining A Research Hypothesis

You’re still with us? Great! Let’s recap and pin down a clear definition of a hypothesis.

A research hypothesis (or scientific hypothesis) is a statement about an expected relationship between variables, or explanation of an occurrence, that is clear, specific and testable.

So, when you write up hypotheses for your dissertation or thesis, make sure that they meet all these criteria. If you do, you’ll not only have rock-solid hypotheses but you’ll also ensure a clear focus for your entire research project.

What about the null hypothesis?

You may have also heard the terms null hypothesis , alternative hypothesis, or H-zero thrown around. At a simple level, the null hypothesis is the counter-proposal to the original hypothesis.

For example, if the hypothesis predicts that there is a relationship between two variables (for example, sleep and academic performance), the null hypothesis would predict that there is no relationship between those variables.

At a more technical level, the null hypothesis proposes that no statistical significance exists in a set of given observations and that any differences are due to chance alone.

And there you have it – hypotheses in a nutshell.

If you have any questions, be sure to leave a comment below and we’ll do our best to help you. If you need hands-on help developing and testing your hypotheses, consider our private coaching service , where we hold your hand through the research journey.

Psst... there’s more!

This post was based on one of our popular Research Bootcamps . If you're working on a research project, you'll definitely want to check this out ...

17 Comments

Very useful information. I benefit more from getting more information in this regard.

Very great insight,educative and informative. Please give meet deep critics on many research data of public international Law like human rights, environment, natural resources, law of the sea etc

In a book I read a distinction is made between null, research, and alternative hypothesis. As far as I understand, alternative and research hypotheses are the same. Can you please elaborate? Best Afshin

This is a self explanatory, easy going site. I will recommend this to my friends and colleagues.

Very good definition. How can I cite your definition in my thesis? Thank you. Is nul hypothesis compulsory in a research?

It’s a counter-proposal to be proven as a rejection

Please what is the difference between alternate hypothesis and research hypothesis?

It is a very good explanation. However, it limits hypotheses to statistically tasteable ideas. What about for qualitative researches or other researches that involve quantitative data that don’t need statistical tests?

In qualitative research, one typically uses propositions, not hypotheses.

could you please elaborate it more

I’ve benefited greatly from these notes, thank you.

This is very helpful

well articulated ideas are presented here, thank you for being reliable sources of information

Excellent. Thanks for being clear and sound about the research methodology and hypothesis (quantitative research)

I have only a simple question regarding the null hypothesis. – Is the null hypothesis (Ho) known as the reversible hypothesis of the alternative hypothesis (H1? – How to test it in academic research?

this is very important note help me much more

Hi” best wishes to you and your very nice blog”

Trackbacks/Pingbacks

- What Is Research Methodology? Simple Definition (With Examples) - Grad Coach - […] Contrasted to this, a quantitative methodology is typically used when the research aims and objectives are confirmatory in nature. For example,…

Submit a Comment Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Print Friendly

Ed Tech as Applied Research: A Framework in Seven Hypotheses

Key takeaways.

- Seven hypotheses explore the feasibility of educational technology — typically considered as supporting teaching and learning — as applied research by providing an initial framework built on traditional research processes.

- New knowledge results from research , usually about fundamental questions, but ed tech pursues applied research about practical problems using qualitative and quantitative methods and local standards.

- Seeing ed tech as research emphasizes the collaborative nature of our work by helping shape our conversations about the knowledge we create, its standards, and its methods.

Edward R. O'Neill, Senior Instructional Designer, Yale University

Those of us who provide educational technology services may see ourselves in many different frameworks: as IT service providers, as resources to be allocated, as technology experts, as support staff — to name only a few. I might be troubleshooting, tinkering, keeping clients happy, performing one of my job duties, or solving problems, easy and hard.

We may also see ourselves as part of human services more broadly, supporting human agency and growth. However we think about this, we support the university’s mission — and by extension some of our culture’s loftiest goals. Teachers teach, learners learn, and educational technologists support their teaching and learning: the dissemination of knowledge to students. But what of knowledge's creation and dissemination beyond our institutions' walls? What relation does ed tech bear to research and publication?

Much has been said and written about ed tech as a service. The framework offered here sees ed tech as scholarship and draws practical consequences from this way of seeing. To explore the aptness of this framework, I offer the following seven hypotheses.

1. Ed tech supports and replicates the university's mission, using the methods characteristic of scholarship in general and research in particular.

We who work in educational technology support the university's mission to preserve, create, and disseminate knowledge. The university disseminates knowledge through a range of activities from publication to teaching, and ed tech's special role is to support the teaching part, although no hard line separates sharing knowledge with scholars, students, and the general public.

To support this mission, ed tech must pursue its own, smaller, practical version of the university's mission.

- We must gather and keep, discover and share knowledge about educational technology so that we can recommend the right tool for each task and support these tools effectively and efficiently.

- Like other forms of research, we must do this transparently, using standards that evolve through discussion and experience.

- Even supporting the tools we recommend involves disseminating knowledge: helping faculty and students learn to use these tools (or others of their choosing) is itself a kind of teaching.

In short, ed tech is research and teaching of an applied and local sort.

Our knowledge in ed tech is practical : it aims to solve immediate problems. One key practical problem we face is, What is the best tool to achieve a specific goal? (Ed tech fits means to ends.) Answering this kind of question is perfectly feasible using methods native to higher education. When we hew to the methods and values of higher ed, we draw closer to those we serve: the faculty. We come to understand faculty better, as they do us, by sharing common values, methods, and habits of mind and of doing — we form a community.

In higher ed, new knowledge results from a process called research . But where scientists and scholars in higher ed usually pursue basic research about fundamental questions using precise methods and widely shared disciplinary standards, in educational technology, we pursue applied research about practical problems using a variety of general methods and standards that are on the one hand professional and on the other hand local and institutional.

Said differently, educational technology is applied research using mixed methods and local standards.

2. Ed tech work fits into the three phases of the research process.

Our ways of creating ed tech knowledge map well onto the methods used in higher education for research. Broadly construed, research involves three main phases: exploring , defining , and testing . The testing process is often construed as hypothesis testing . Whether they're scientists or humanists, scholars explore a problem, define its terms, and then develop testable hypotheses. When we in ed tech know the phase of research we have reached and what hypotheses we want to test, it's easier to track our progress, regularize our work, and target an explicit and shared set of standards –– all things we must do to be effective, efficient, and transparent members of our communities.

An example is helpful. In sociology, Erving Goffman either created or inspired a new approach (variously called the dramaturgical approach, ethnomethodology, conversational analysis, or discourse analysis) by the way he explored, defined, and tested a specific phenomenon. 1

- Exploring. Goffman was curious about why people interact the way they do. Why do they talk this way or that, wear these clothes or those in specific contexts, such as a rural village or a psychiatric hospital?

- Defining . Goffman saw these questions as problems of "social order": how do people organize their actions and interactions? Goffman extended this traditional area in sociology to the micro-level of small gestures and ways of speaking. He defined his research as answering the question "How is social order maintained?"

- Testing and Hypothesis Testing. For the purposes of testing, Goffman collected observational data about face-to-face social interactions and behavioral reports from memoirs and newspapers. He also recorded conversations and analyzed the transcripts. Goffman developed specific explanations (his hypotheses), and then "tested" them against his collected observations, reports, and transcripts.

In ed tech, we also explore, define, and test. Our explorations revolve around practices, however.

- Exploring. What tools are people using? For what purposes? With what results? Here and elsewhere?

- Defining. What kinds of tools and functions are involved? What kinds of purposes? For example, is videoconferencing good for collaboration? For assessment? For rehearsal and feedback?

For these two phases of research we draw on the work of our colleagues at other institutions, as well as that of researchers in the areas of education, psychology, organizational behavior, and more.

When it comes to tools and learning processes, our categories need to be useful and shareable, parsimonious and rigorous without becoming abstract. We can't speak our own private language, nor a recondite professional jargon. We need few enough categories to avoid being overwhelmed, and we need clear lines to avoid becoming theological.

- Testing. Finally, we need to test the tools and verify that they work well enough for the purposes at hand. This requires asking, does this tool do what people say? Reliably? Is it usable enough to hand off to instructors and students? Is it so complex that the support time will eat us alive? What evidence do we have, and how sure are we of that evidence?

Calling what we do "research" does not imply we need a controlled double-blind study to confirm what we know: We need to be sure that we are effective and that the degree of certainty escalates with the investment of time, labor, and money.

From another angle, ed tech as research is akin to scientific teaching as a process that constantly tests its own effectiveness as a kind of hypothesis testing. Ed tech activity of this kind will also support scientific teaching better because it not only "walks the walk," it makes us better at practical hypothesis testing.

3. Ed tech can use familiar research methods as well as a broad understanding of learning.

Our methods are both qualitative and quantitative — in a word, mixed. We collect both numbers and descriptions. Our toolkit should encompass a range, including these basic tools:

- Literature review: reading research and publications about others' practices, experiences, and results

- Interviews: calling, e-mailing, and chatting with faculty and students

- Statistics: counting what we do, hear, and see

- Field experiments: testing of a tool by professors to see how it works for them

- Equipment calibration and testing: using the tool ourselves to see if it works to our standards

- Natural experiments: finding similar situations that use different approaches to create a semi-controlled experiment

- Observational studies: watching teachers and learners at work to discover what happens with interesting tools "in the wild"

- Ethnographies: interviewing users and capturing the data as audio, video, or notes in order to get a rich account of the experience of teaching or learning with a specific tool

- Semi-controlled experiments: finding two similar classes or sections, one using a tool and the other not (or using a different tool)

- Meta-analyses: comparing the results of disparate research studies or our own observations

Some research methods fit better in one phase of the research process than do others. Each phase also has its key activities: verbs that further specify the acts of exploration and definition.

| Phase | Activities | Research Methods |

|---|---|---|

| EXPLORE | Observe Collect Summarize Record | Lit review Interview Field experiments Natural experiment Observational studies |

| DEFINE | Characterize Analyze Categorize | Lit review Debate Professional norms |

| TEST | Check Verify Measure Correlate Compare | Testing Observational studies Interviews Controlled experiments |

Since our research is about how tools fit purposes, we need some notions about the purpose of ed tech: supporting learning. Without committing to one specific theory of learning, we can specify four elements that help define semi-agnostically how we see learning: what it is, how it unfolds in time, and big and small ways to enable it.

- Basic definitions. What is learning itself? What are the main frameworks that have been used effectively to understand and support learning? E.g., goal orientation, motivation, working memory.

- Process elements. What are the important moments in the instructional process? E.g., defining the learning objective, the student practicing or rehearsing and getting feedback, assessing whether the student has learned, etc.

- Whole strategies. What instructional strategies have been found effective? E.g., authentic learning, problem-based learning, inquiry-based learning.

- Valued supportive behaviors. What activities that are deeply valued in the context of higher education support learning? E.g., collaboration, writing, dialogue, and debate.

4. Knowing the phases of the research process lets us share where we are in that process for any given set of tools.

As we progress from exploring tools to defining their uses and testing them, we constantly gather and share our knowledge. Ideally, there is no single moment at which we suddenly need to know something precise without any background whatever. Instead, we will be most successful when we collect and share our knowledge gradually as we go, tracking where we are in the exploring, defining, testing process for each category of tools and purposes. If we do not know at any given moment what tools are used to support collaboration or assessment and how well they work for that purpose, then we will have a much harder time testing tools according to our own standards — which need to be explicit from the start, as well as constantly refined.

For each kind of tool and purpose, we should know which phase of the process of research (exploration, definition, testing) we have done, are in, and need to do . A robust system would map all our important dimensions against each other: phases, purposes, and tools. E.g.,

"These are the tools we're exploring, those are the tools we are testing, and here are the purposes we think they're good for."

"These are tools we have tested and that meet our standards, and here are the purposes we see them as fit for, along with our methods and evidence."

Not all tools are neatly focused toward specific ends. Some tools will likely be so basic that their purpose is merely utilitarian or back-end, such as file sharing, sending messages, or social interaction. Some megatools enfold many functions, such as the LMS, blogging platforms, and content management systems. These megatools support broadly valued activities in higher education. Ed tech knowledge about how tools fit purposes thus has a definite shape.

| Questions | Category |

|---|---|

| What is it? | Tools |

| What does it do? | live synchronous communication, multimedia production, etc. |

| Why would I need it? | specific teaching and learning, and more general activities of organizing and communicating |

| Does it work? | testing and the evidence we find and collect ourselves |

| How do I get it? | Availability |

| Who supports it? | Support |

The structure of our knowledge makes that knowledge amenable to gathering and sharing by various methods. But a good tool for managing this information would go a long way, and being experts in fitting tools to purposes, we will likely conclude that the tool that lets us track and share our knowledge is a robust but elegant content management system (CMS). Such a system would support a narrow range of utterances:

"This is a tool we are exploring/testing/have tested for this or that learning purpose." Tool, phase, and purpose. "This is information we have found about a specific tool from a specific research method: interview, lit review, observation, etc." Data, research method, tool, and purpose.

"Here is a reference to a specific tool we plan to explore, along with possible purposes."

Such a system would work as a kind of dashboard. It would also allow us to track our work internally and simultaneously publish whatever elements we are ready to share. Moving knowledge from system to system is inefficient, however, and when support comes first, sharing our knowledge will always take a back seat. Therefore the knowledge management system and the knowledge sharing system would ideally be one and the same.