Statistical Analysis in Research: Meaning, Methods and Types

Home » Videos » Statistical Analysis in Research: Meaning, Methods and Types

The scientific method is an empirical approach to acquiring new knowledge by making skeptical observations and analyses to develop a meaningful interpretation. It is the basis of research and the primary pillar of modern science. Researchers seek to understand the relationships between factors associated with the phenomena of interest. In some cases, research works with vast chunks of data, making it difficult to observe or manipulate each data point. As a result, statistical analysis in research becomes a means of evaluating relationships and interconnections between variables with tools and analytical techniques for working with large data. Since researchers use statistical power analysis to assess the probability of finding an effect in such an investigation, the method is relatively accurate. Hence, statistical analysis in research eases analytical methods by focusing on the quantifiable aspects of phenomena.

What is Statistical Analysis in Research? A Simplified Definition

Statistical analysis uses quantitative data to investigate patterns, relationships, and patterns to understand real-life and simulated phenomena. The approach is a key analytical tool in various fields, including academia, business, government, and science in general. This statistical analysis in research definition implies that the primary focus of the scientific method is quantitative research. Notably, the investigator targets the constructs developed from general concepts as the researchers can quantify their hypotheses and present their findings in simple statistics.

When a business needs to learn how to improve its product, they collect statistical data about the production line and customer satisfaction. Qualitative data is valuable and often identifies the most common themes in the stakeholders’ responses. On the other hand, the quantitative data creates a level of importance, comparing the themes based on their criticality to the affected persons. For instance, descriptive statistics highlight tendency, frequency, variation, and position information. While the mean shows the average number of respondents who value a certain aspect, the variance indicates the accuracy of the data. In any case, statistical analysis creates simplified concepts used to understand the phenomenon under investigation. It is also a key component in academia as the primary approach to data representation, especially in research projects, term papers and dissertations.

Most Useful Statistical Analysis Methods in Research

Using statistical analysis methods in research is inevitable, especially in academic assignments, projects, and term papers. It’s always advisable to seek assistance from your professor or you can try research paper writing by CustomWritings before you start your academic project or write statistical analysis in research paper. Consulting an expert when developing a topic for your thesis or short mid-term assignment increases your chances of getting a better grade. Most importantly, it improves your understanding of research methods with insights on how to enhance the originality and quality of personalized essays. Professional writers can also help select the most suitable statistical analysis method for your thesis, influencing the choice of data and type of study.

Descriptive Statistics

Descriptive statistics is a statistical method summarizing quantitative figures to understand critical details about the sample and population. A description statistic is a figure that quantifies a specific aspect of the data. For instance, instead of analyzing the behavior of a thousand students, research can identify the most common actions among them. By doing this, the person utilizes statistical analysis in research, particularly descriptive statistics.

- Measures of central tendency . Central tendency measures are the mean, mode, and media or the averages denoting specific data points. They assess the centrality of the probability distribution, hence the name. These measures describe the data in relation to the center.

- Measures of frequency . These statistics document the number of times an event happens. They include frequency, count, ratios, rates, and proportions. Measures of frequency can also show how often a score occurs.

- Measures of dispersion/variation . These descriptive statistics assess the intervals between the data points. The objective is to view the spread or disparity between the specific inputs. Measures of variation include the standard deviation, variance, and range. They indicate how the spread may affect other statistics, such as the mean.

- Measures of position . Sometimes researchers can investigate relationships between scores. Measures of position, such as percentiles, quartiles, and ranks, demonstrate this association. They are often useful when comparing the data to normalized information.

Inferential Statistics

Inferential statistics is critical in statistical analysis in quantitative research. This approach uses statistical tests to draw conclusions about the population. Examples of inferential statistics include t-tests, F-tests, ANOVA, p-value, Mann-Whitney U test, and Wilcoxon W test. This

Common Statistical Analysis in Research Types

Although inferential and descriptive statistics can be classified as types of statistical analysis in research, they are mostly considered analytical methods. Types of research are distinguishable by the differences in the methodology employed in analyzing, assembling, classifying, manipulating, and interpreting data. The categories may also depend on the type of data used.

Predictive Analysis

Predictive research analyzes past and present data to assess trends and predict future events. An excellent example of predictive analysis is a market survey that seeks to understand customers’ spending habits to weigh the possibility of a repeat or future purchase. Such studies assess the likelihood of an action based on trends.

Prescriptive Analysis

On the other hand, a prescriptive analysis targets likely courses of action. It’s decision-making research designed to identify optimal solutions to a problem. Its primary objective is to test or assess alternative measures.

Causal Analysis

Causal research investigates the explanation behind the events. It explores the relationship between factors for causation. Thus, researchers use causal analyses to analyze root causes, possible problems, and unknown outcomes.

Mechanistic Analysis

This type of research investigates the mechanism of action. Instead of focusing only on the causes or possible outcomes, researchers may seek an understanding of the processes involved. In such cases, they use mechanistic analyses to document, observe, or learn the mechanisms involved.

Exploratory Data Analysis

Similarly, an exploratory study is extensive with a wider scope and minimal limitations. This type of research seeks insight into the topic of interest. An exploratory researcher does not try to generalize or predict relationships. Instead, they look for information about the subject before conducting an in-depth analysis.

The Importance of Statistical Analysis in Research

As a matter of fact, statistical analysis provides critical information for decision-making. Decision-makers require past trends and predictive assumptions to inform their actions. In most cases, the data is too complex or lacks meaningful inferences. Statistical tools for analyzing such details help save time and money, deriving only valuable information for assessment. An excellent statistical analysis in research example is a randomized control trial (RCT) for the Covid-19 vaccine. You can download a sample of such a document online to understand the significance such analyses have to the stakeholders. A vaccine RCT assesses the effectiveness, side effects, duration of protection, and other benefits. Hence, statistical analysis in research is a helpful tool for understanding data.

Sources and links For the articles and videos I use different databases, such as Eurostat, OECD World Bank Open Data, Data Gov and others. You are free to use the video I have made on your site using the link or the embed code. If you have any questions, don’t hesitate to write to me!

Support statistics and data, if you have reached the end and like this project, you can donate a coffee to “statistics and data”..

Copyright © 2022 Statistics and Data

Frequently asked questions

What is statistical analysis.

Statistical analysis is the main method for analyzing quantitative research data . It uses probabilities and models to test predictions about a population from sample data.

Frequently asked questions: Statistics

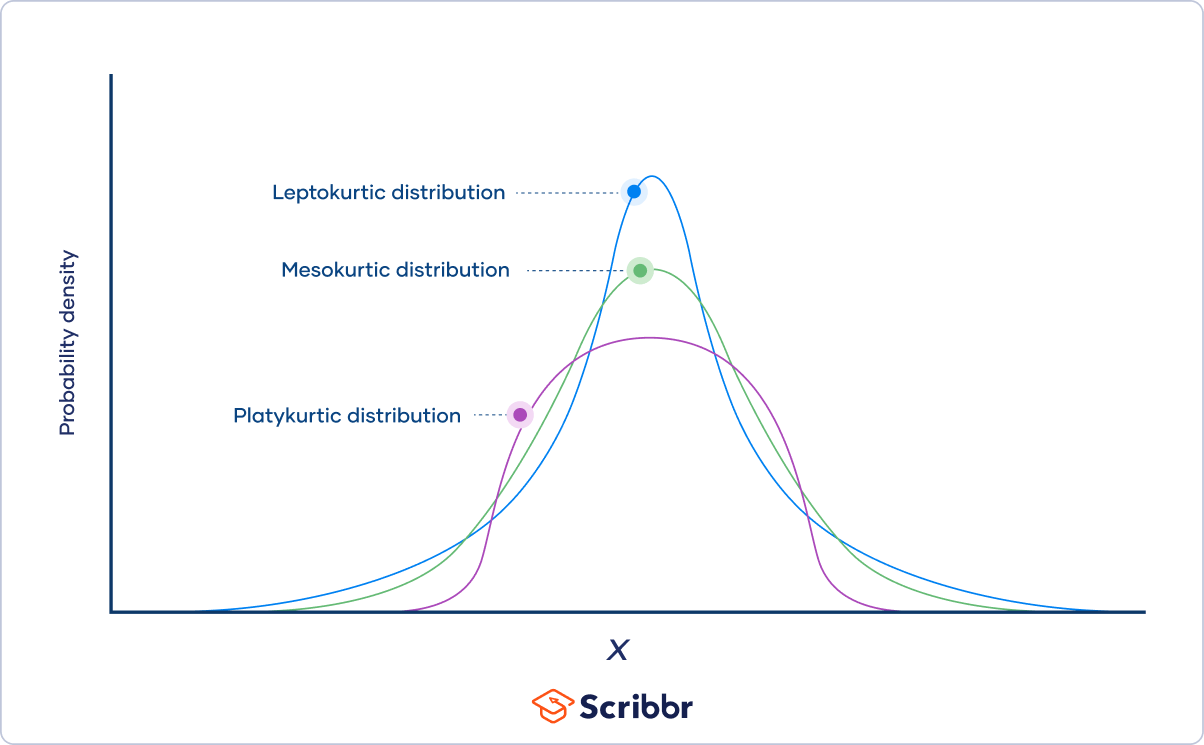

As the degrees of freedom increase, Student’s t distribution becomes less leptokurtic , meaning that the probability of extreme values decreases. The distribution becomes more and more similar to a standard normal distribution .

The three categories of kurtosis are:

- Mesokurtosis : An excess kurtosis of 0. Normal distributions are mesokurtic.

- Platykurtosis : A negative excess kurtosis. Platykurtic distributions are thin-tailed, meaning that they have few outliers .

- Leptokurtosis : A positive excess kurtosis. Leptokurtic distributions are fat-tailed, meaning that they have many outliers.

Probability distributions belong to two broad categories: discrete probability distributions and continuous probability distributions . Within each category, there are many types of probability distributions.

Probability is the relative frequency over an infinite number of trials.

For example, the probability of a coin landing on heads is .5, meaning that if you flip the coin an infinite number of times, it will land on heads half the time.

Since doing something an infinite number of times is impossible, relative frequency is often used as an estimate of probability. If you flip a coin 1000 times and get 507 heads, the relative frequency, .507, is a good estimate of the probability.

Categorical variables can be described by a frequency distribution. Quantitative variables can also be described by a frequency distribution, but first they need to be grouped into interval classes .

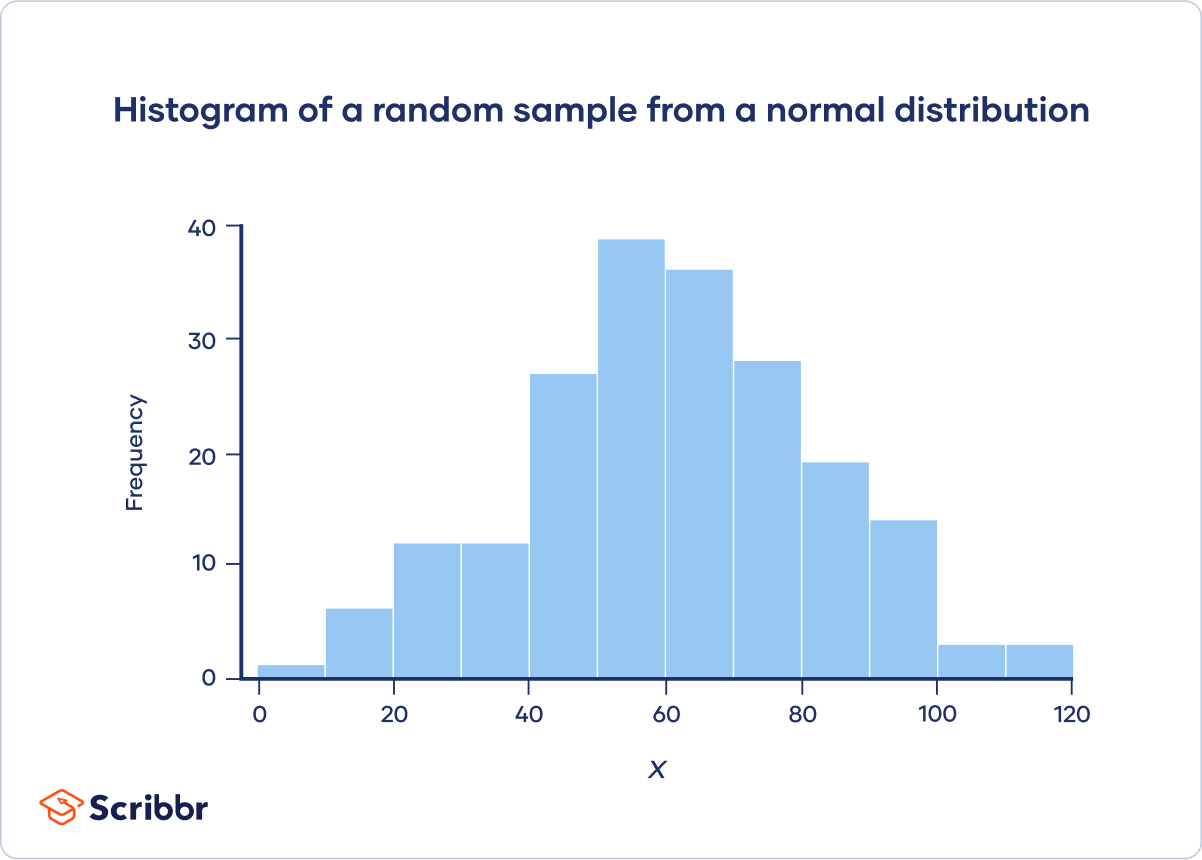

A histogram is an effective way to tell if a frequency distribution appears to have a normal distribution .

Plot a histogram and look at the shape of the bars. If the bars roughly follow a symmetrical bell or hill shape, like the example below, then the distribution is approximately normally distributed.

You can use the CHISQ.INV.RT() function to find a chi-square critical value in Excel.

For example, to calculate the chi-square critical value for a test with df = 22 and α = .05, click any blank cell and type:

=CHISQ.INV.RT(0.05,22)

You can use the qchisq() function to find a chi-square critical value in R.

For example, to calculate the chi-square critical value for a test with df = 22 and α = .05:

qchisq(p = .05, df = 22, lower.tail = FALSE)

You can use the chisq.test() function to perform a chi-square test of independence in R. Give the contingency table as a matrix for the “x” argument. For example:

m = matrix(data = c(89, 84, 86, 9, 8, 24), nrow = 3, ncol = 2)

chisq.test(x = m)

You can use the CHISQ.TEST() function to perform a chi-square test of independence in Excel. It takes two arguments, CHISQ.TEST(observed_range, expected_range), and returns the p value.

Chi-square goodness of fit tests are often used in genetics. One common application is to check if two genes are linked (i.e., if the assortment is independent). When genes are linked, the allele inherited for one gene affects the allele inherited for another gene.

Suppose that you want to know if the genes for pea texture (R = round, r = wrinkled) and color (Y = yellow, y = green) are linked. You perform a dihybrid cross between two heterozygous ( RY / ry ) pea plants. The hypotheses you’re testing with your experiment are:

- This would suggest that the genes are unlinked.

- This would suggest that the genes are linked.

You observe 100 peas:

- 78 round and yellow peas

- 6 round and green peas

- 4 wrinkled and yellow peas

- 12 wrinkled and green peas

Step 1: Calculate the expected frequencies

To calculate the expected values, you can make a Punnett square. If the two genes are unlinked, the probability of each genotypic combination is equal.

| RRYY | RrYy | RRYy | RrYY | |

| RrYy | rryy | Rryy | rrYy | |

| RRYy | Rryy | RRyy | RrYy | |

| RrYY | rrYy | RrYy | rrYY |

The expected phenotypic ratios are therefore 9 round and yellow: 3 round and green: 3 wrinkled and yellow: 1 wrinkled and green.

From this, you can calculate the expected phenotypic frequencies for 100 peas:

| Round and yellow | 78 | 100 * (9/16) = 56.25 |

| Round and green | 6 | 100 * (3/16) = 18.75 |

| Wrinkled and yellow | 4 | 100 * (3/16) = 18.75 |

| Wrinkled and green | 12 | 100 * (1/16) = 6.21 |

Step 2: Calculate chi-square

| − | − | ||||

| Round and yellow | 78 | 56.25 | 21.75 | 473.06 | 8.41 |

| Round and green | 6 | 18.75 | −12.75 | 162.56 | 8.67 |

| Wrinkled and yellow | 4 | 18.75 | −14.75 | 217.56 | 11.6 |

| Wrinkled and green | 12 | 6.21 | 5.79 | 33.52 | 5.4 |

Χ 2 = 8.41 + 8.67 + 11.6 + 5.4 = 34.08

Step 3: Find the critical chi-square value

Since there are four groups (round and yellow, round and green, wrinkled and yellow, wrinkled and green), there are three degrees of freedom .

For a test of significance at α = .05 and df = 3, the Χ 2 critical value is 7.82.

Step 4: Compare the chi-square value to the critical value

Χ 2 = 34.08

Critical value = 7.82

The Χ 2 value is greater than the critical value .

Step 5: Decide whether the reject the null hypothesis

The Χ 2 value is greater than the critical value, so we reject the null hypothesis that the population of offspring have an equal probability of inheriting all possible genotypic combinations. There is a significant difference between the observed and expected genotypic frequencies ( p < .05).

The data supports the alternative hypothesis that the offspring do not have an equal probability of inheriting all possible genotypic combinations, which suggests that the genes are linked

You can use the chisq.test() function to perform a chi-square goodness of fit test in R. Give the observed values in the “x” argument, give the expected values in the “p” argument, and set “rescale.p” to true. For example:

chisq.test(x = c(22,30,23), p = c(25,25,25), rescale.p = TRUE)

You can use the CHISQ.TEST() function to perform a chi-square goodness of fit test in Excel. It takes two arguments, CHISQ.TEST(observed_range, expected_range), and returns the p value .

Both correlations and chi-square tests can test for relationships between two variables. However, a correlation is used when you have two quantitative variables and a chi-square test of independence is used when you have two categorical variables.

Both chi-square tests and t tests can test for differences between two groups. However, a t test is used when you have a dependent quantitative variable and an independent categorical variable (with two groups). A chi-square test of independence is used when you have two categorical variables.

The two main chi-square tests are the chi-square goodness of fit test and the chi-square test of independence .

A chi-square distribution is a continuous probability distribution . The shape of a chi-square distribution depends on its degrees of freedom , k . The mean of a chi-square distribution is equal to its degrees of freedom ( k ) and the variance is 2 k . The range is 0 to ∞.

As the degrees of freedom ( k ) increases, the chi-square distribution goes from a downward curve to a hump shape. As the degrees of freedom increases further, the hump goes from being strongly right-skewed to being approximately normal.

To find the quartiles of a probability distribution, you can use the distribution’s quantile function.

You can use the quantile() function to find quartiles in R. If your data is called “data”, then “quantile(data, prob=c(.25,.5,.75), type=1)” will return the three quartiles.

You can use the QUARTILE() function to find quartiles in Excel. If your data is in column A, then click any blank cell and type “=QUARTILE(A:A,1)” for the first quartile, “=QUARTILE(A:A,2)” for the second quartile, and “=QUARTILE(A:A,3)” for the third quartile.

You can use the PEARSON() function to calculate the Pearson correlation coefficient in Excel. If your variables are in columns A and B, then click any blank cell and type “PEARSON(A:A,B:B)”.

There is no function to directly test the significance of the correlation.

You can use the cor() function to calculate the Pearson correlation coefficient in R. To test the significance of the correlation, you can use the cor.test() function.

You should use the Pearson correlation coefficient when (1) the relationship is linear and (2) both variables are quantitative and (3) normally distributed and (4) have no outliers.

The Pearson correlation coefficient ( r ) is the most common way of measuring a linear correlation. It is a number between –1 and 1 that measures the strength and direction of the relationship between two variables.

This table summarizes the most important differences between normal distributions and Poisson distributions :

| Characteristic | Normal | Poisson |

|---|---|---|

| Continuous | ||

| Mean (µ) and standard deviation (σ) | Lambda (λ) | |

| Shape | Bell-shaped | Depends on λ |

| Symmetrical | Asymmetrical (right-skewed). As λ increases, the asymmetry decreases. | |

| Range | −∞ to ∞ | 0 to ∞ |

When the mean of a Poisson distribution is large (>10), it can be approximated by a normal distribution.

In the Poisson distribution formula, lambda (λ) is the mean number of events within a given interval of time or space. For example, λ = 0.748 floods per year.

The e in the Poisson distribution formula stands for the number 2.718. This number is called Euler’s constant. You can simply substitute e with 2.718 when you’re calculating a Poisson probability. Euler’s constant is a very useful number and is especially important in calculus.

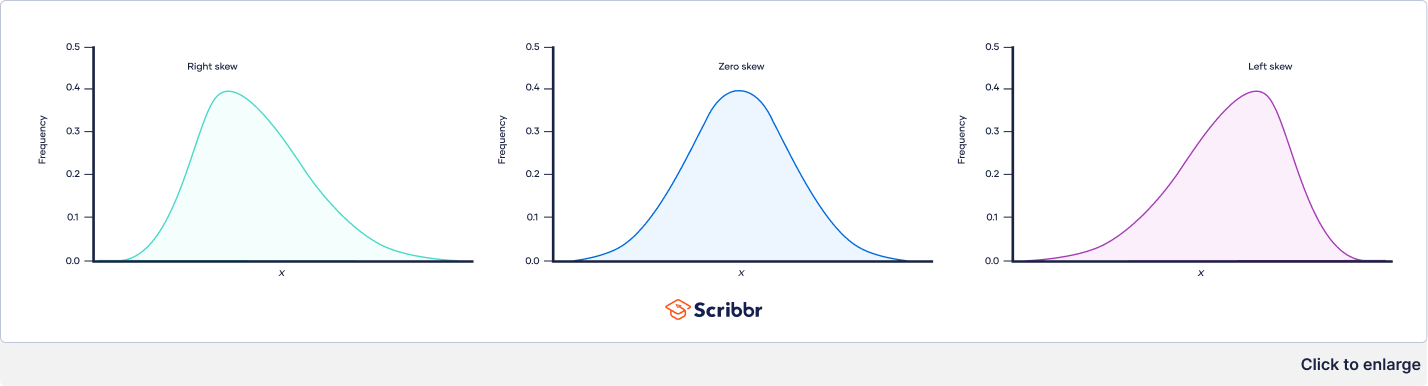

The three types of skewness are:

- Right skew (also called positive skew ) . A right-skewed distribution is longer on the right side of its peak than on its left.

- Left skew (also called negative skew). A left-skewed distribution is longer on the left side of its peak than on its right.

- Zero skew. It is symmetrical and its left and right sides are mirror images.

Skewness and kurtosis are both important measures of a distribution’s shape.

- Skewness measures the asymmetry of a distribution.

- Kurtosis measures the heaviness of a distribution’s tails relative to a normal distribution .

A research hypothesis is your proposed answer to your research question. The research hypothesis usually includes an explanation (“ x affects y because …”).

A statistical hypothesis, on the other hand, is a mathematical statement about a population parameter. Statistical hypotheses always come in pairs: the null and alternative hypotheses . In a well-designed study , the statistical hypotheses correspond logically to the research hypothesis.

The alternative hypothesis is often abbreviated as H a or H 1 . When the alternative hypothesis is written using mathematical symbols, it always includes an inequality symbol (usually ≠, but sometimes < or >).

The null hypothesis is often abbreviated as H 0 . When the null hypothesis is written using mathematical symbols, it always includes an equality symbol (usually =, but sometimes ≥ or ≤).

The t distribution was first described by statistician William Sealy Gosset under the pseudonym “Student.”

To calculate a confidence interval of a mean using the critical value of t , follow these four steps:

- Choose the significance level based on your desired confidence level. The most common confidence level is 95%, which corresponds to α = .05 in the two-tailed t table .

- Find the critical value of t in the two-tailed t table.

- Multiply the critical value of t by s / √ n .

- Add this value to the mean to calculate the upper limit of the confidence interval, and subtract this value from the mean to calculate the lower limit.

To test a hypothesis using the critical value of t , follow these four steps:

- Calculate the t value for your sample.

- Find the critical value of t in the t table .

- Determine if the (absolute) t value is greater than the critical value of t .

- Reject the null hypothesis if the sample’s t value is greater than the critical value of t . Otherwise, don’t reject the null hypothesis .

You can use the T.INV() function to find the critical value of t for one-tailed tests in Excel, and you can use the T.INV.2T() function for two-tailed tests.

You can use the qt() function to find the critical value of t in R. The function gives the critical value of t for the one-tailed test. If you want the critical value of t for a two-tailed test, divide the significance level by two.

You can use the RSQ() function to calculate R² in Excel. If your dependent variable is in column A and your independent variable is in column B, then click any blank cell and type “RSQ(A:A,B:B)”.

You can use the summary() function to view the R² of a linear model in R. You will see the “R-squared” near the bottom of the output.

There are two formulas you can use to calculate the coefficient of determination (R²) of a simple linear regression .

The coefficient of determination (R²) is a number between 0 and 1 that measures how well a statistical model predicts an outcome. You can interpret the R² as the proportion of variation in the dependent variable that is predicted by the statistical model.

There are three main types of missing data .

Missing completely at random (MCAR) data are randomly distributed across the variable and unrelated to other variables .

Missing at random (MAR) data are not randomly distributed but they are accounted for by other observed variables.

Missing not at random (MNAR) data systematically differ from the observed values.

To tidy up your missing data , your options usually include accepting, removing, or recreating the missing data.

- Acceptance: You leave your data as is

- Listwise or pairwise deletion: You delete all cases (participants) with missing data from analyses

- Imputation: You use other data to fill in the missing data

Missing data are important because, depending on the type, they can sometimes bias your results. This means your results may not be generalizable outside of your study because your data come from an unrepresentative sample .

Missing data , or missing values, occur when you don’t have data stored for certain variables or participants.

In any dataset, there’s usually some missing data. In quantitative research , missing values appear as blank cells in your spreadsheet.

There are two steps to calculating the geometric mean :

- Multiply all values together to get their product.

- Find the n th root of the product ( n is the number of values).

Before calculating the geometric mean, note that:

- The geometric mean can only be found for positive values.

- If any value in the data set is zero, the geometric mean is zero.

The arithmetic mean is the most commonly used type of mean and is often referred to simply as “the mean.” While the arithmetic mean is based on adding and dividing values, the geometric mean multiplies and finds the root of values.

Even though the geometric mean is a less common measure of central tendency , it’s more accurate than the arithmetic mean for percentage change and positively skewed data. The geometric mean is often reported for financial indices and population growth rates.

The geometric mean is an average that multiplies all values and finds a root of the number. For a dataset with n numbers, you find the n th root of their product.

Outliers are extreme values that differ from most values in the dataset. You find outliers at the extreme ends of your dataset.

It’s best to remove outliers only when you have a sound reason for doing so.

Some outliers represent natural variations in the population , and they should be left as is in your dataset. These are called true outliers.

Other outliers are problematic and should be removed because they represent measurement errors , data entry or processing errors, or poor sampling.

You can choose from four main ways to detect outliers :

- Sorting your values from low to high and checking minimum and maximum values

- Visualizing your data with a box plot and looking for outliers

- Using the interquartile range to create fences for your data

- Using statistical procedures to identify extreme values

Outliers can have a big impact on your statistical analyses and skew the results of any hypothesis test if they are inaccurate.

These extreme values can impact your statistical power as well, making it hard to detect a true effect if there is one.

No, the steepness or slope of the line isn’t related to the correlation coefficient value. The correlation coefficient only tells you how closely your data fit on a line, so two datasets with the same correlation coefficient can have very different slopes.

To find the slope of the line, you’ll need to perform a regression analysis .

Correlation coefficients always range between -1 and 1.

The sign of the coefficient tells you the direction of the relationship: a positive value means the variables change together in the same direction, while a negative value means they change together in opposite directions.

The absolute value of a number is equal to the number without its sign. The absolute value of a correlation coefficient tells you the magnitude of the correlation: the greater the absolute value, the stronger the correlation.

These are the assumptions your data must meet if you want to use Pearson’s r :

- Both variables are on an interval or ratio level of measurement

- Data from both variables follow normal distributions

- Your data have no outliers

- Your data is from a random or representative sample

- You expect a linear relationship between the two variables

A correlation coefficient is a single number that describes the strength and direction of the relationship between your variables.

Different types of correlation coefficients might be appropriate for your data based on their levels of measurement and distributions . The Pearson product-moment correlation coefficient (Pearson’s r ) is commonly used to assess a linear relationship between two quantitative variables.

There are various ways to improve power:

- Increase the potential effect size by manipulating your independent variable more strongly,

- Increase sample size,

- Increase the significance level (alpha),

- Reduce measurement error by increasing the precision and accuracy of your measurement devices and procedures,

- Use a one-tailed test instead of a two-tailed test for t tests and z tests.

A power analysis is a calculation that helps you determine a minimum sample size for your study. It’s made up of four main components. If you know or have estimates for any three of these, you can calculate the fourth component.

- Statistical power : the likelihood that a test will detect an effect of a certain size if there is one, usually set at 80% or higher.

- Sample size : the minimum number of observations needed to observe an effect of a certain size with a given power level.

- Significance level (alpha) : the maximum risk of rejecting a true null hypothesis that you are willing to take, usually set at 5%.

- Expected effect size : a standardized way of expressing the magnitude of the expected result of your study, usually based on similar studies or a pilot study.

Null and alternative hypotheses are used in statistical hypothesis testing . The null hypothesis of a test always predicts no effect or no relationship between variables, while the alternative hypothesis states your research prediction of an effect or relationship.

The risk of making a Type II error is inversely related to the statistical power of a test. Power is the extent to which a test can correctly detect a real effect when there is one.

To (indirectly) reduce the risk of a Type II error, you can increase the sample size or the significance level to increase statistical power.

The risk of making a Type I error is the significance level (or alpha) that you choose. That’s a value that you set at the beginning of your study to assess the statistical probability of obtaining your results ( p value ).

The significance level is usually set at 0.05 or 5%. This means that your results only have a 5% chance of occurring, or less, if the null hypothesis is actually true.

To reduce the Type I error probability, you can set a lower significance level.

In statistics, a Type I error means rejecting the null hypothesis when it’s actually true, while a Type II error means failing to reject the null hypothesis when it’s actually false.

In statistics, power refers to the likelihood of a hypothesis test detecting a true effect if there is one. A statistically powerful test is more likely to reject a false negative (a Type II error).

If you don’t ensure enough power in your study, you may not be able to detect a statistically significant result even when it has practical significance. Your study might not have the ability to answer your research question.

While statistical significance shows that an effect exists in a study, practical significance shows that the effect is large enough to be meaningful in the real world.

Statistical significance is denoted by p -values whereas practical significance is represented by effect sizes .

There are dozens of measures of effect sizes . The most common effect sizes are Cohen’s d and Pearson’s r . Cohen’s d measures the size of the difference between two groups while Pearson’s r measures the strength of the relationship between two variables .

Effect size tells you how meaningful the relationship between variables or the difference between groups is.

A large effect size means that a research finding has practical significance, while a small effect size indicates limited practical applications.

Using descriptive and inferential statistics , you can make two types of estimates about the population : point estimates and interval estimates.

- A point estimate is a single value estimate of a parameter . For instance, a sample mean is a point estimate of a population mean.

- An interval estimate gives you a range of values where the parameter is expected to lie. A confidence interval is the most common type of interval estimate.

Both types of estimates are important for gathering a clear idea of where a parameter is likely to lie.

Standard error and standard deviation are both measures of variability . The standard deviation reflects variability within a sample, while the standard error estimates the variability across samples of a population.

The standard error of the mean , or simply standard error , indicates how different the population mean is likely to be from a sample mean. It tells you how much the sample mean would vary if you were to repeat a study using new samples from within a single population.

To figure out whether a given number is a parameter or a statistic , ask yourself the following:

- Does the number describe a whole, complete population where every member can be reached for data collection ?

- Is it possible to collect data for this number from every member of the population in a reasonable time frame?

If the answer is yes to both questions, the number is likely to be a parameter. For small populations, data can be collected from the whole population and summarized in parameters.

If the answer is no to either of the questions, then the number is more likely to be a statistic.

The arithmetic mean is the most commonly used mean. It’s often simply called the mean or the average. But there are some other types of means you can calculate depending on your research purposes:

- Weighted mean: some values contribute more to the mean than others.

- Geometric mean : values are multiplied rather than summed up.

- Harmonic mean: reciprocals of values are used instead of the values themselves.

You can find the mean , or average, of a data set in two simple steps:

- Find the sum of the values by adding them all up.

- Divide the sum by the number of values in the data set.

This method is the same whether you are dealing with sample or population data or positive or negative numbers.

The median is the most informative measure of central tendency for skewed distributions or distributions with outliers. For example, the median is often used as a measure of central tendency for income distributions, which are generally highly skewed.

Because the median only uses one or two values, it’s unaffected by extreme outliers or non-symmetric distributions of scores. In contrast, the mean and mode can vary in skewed distributions.

To find the median , first order your data. Then calculate the middle position based on n , the number of values in your data set.

A data set can often have no mode, one mode or more than one mode – it all depends on how many different values repeat most frequently.

Your data can be:

- without any mode

- unimodal, with one mode,

- bimodal, with two modes,

- trimodal, with three modes, or

- multimodal, with four or more modes.

To find the mode :

- If your data is numerical or quantitative, order the values from low to high.

- If it is categorical, sort the values by group, in any order.

Then you simply need to identify the most frequently occurring value.

The interquartile range is the best measure of variability for skewed distributions or data sets with outliers. Because it’s based on values that come from the middle half of the distribution, it’s unlikely to be influenced by outliers .

The two most common methods for calculating interquartile range are the exclusive and inclusive methods.

The exclusive method excludes the median when identifying Q1 and Q3, while the inclusive method includes the median as a value in the data set in identifying the quartiles.

For each of these methods, you’ll need different procedures for finding the median, Q1 and Q3 depending on whether your sample size is even- or odd-numbered. The exclusive method works best for even-numbered sample sizes, while the inclusive method is often used with odd-numbered sample sizes.

While the range gives you the spread of the whole data set, the interquartile range gives you the spread of the middle half of a data set.

Homoscedasticity, or homogeneity of variances, is an assumption of equal or similar variances in different groups being compared.

This is an important assumption of parametric statistical tests because they are sensitive to any dissimilarities. Uneven variances in samples result in biased and skewed test results.

Statistical tests such as variance tests or the analysis of variance (ANOVA) use sample variance to assess group differences of populations. They use the variances of the samples to assess whether the populations they come from significantly differ from each other.

Variance is the average squared deviations from the mean, while standard deviation is the square root of this number. Both measures reflect variability in a distribution, but their units differ:

- Standard deviation is expressed in the same units as the original values (e.g., minutes or meters).

- Variance is expressed in much larger units (e.g., meters squared).

Although the units of variance are harder to intuitively understand, variance is important in statistical tests .

The empirical rule, or the 68-95-99.7 rule, tells you where most of the values lie in a normal distribution :

- Around 68% of values are within 1 standard deviation of the mean.

- Around 95% of values are within 2 standard deviations of the mean.

- Around 99.7% of values are within 3 standard deviations of the mean.

The empirical rule is a quick way to get an overview of your data and check for any outliers or extreme values that don’t follow this pattern.

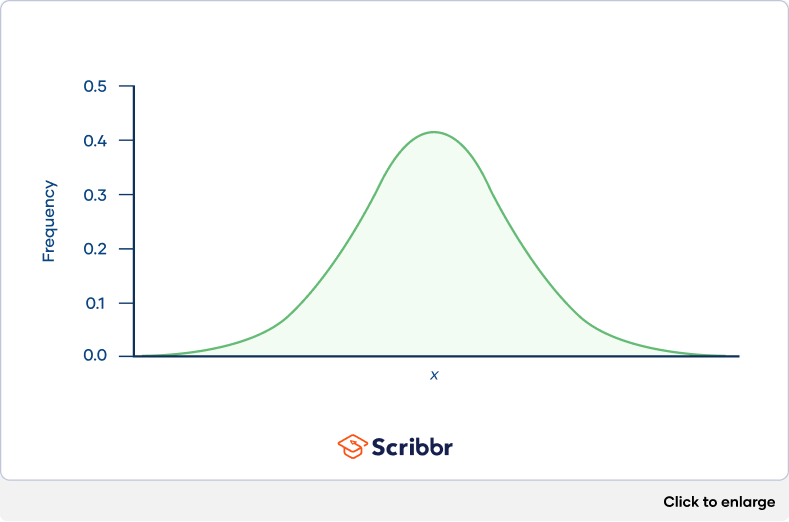

In a normal distribution , data are symmetrically distributed with no skew. Most values cluster around a central region, with values tapering off as they go further away from the center.

The measures of central tendency (mean, mode, and median) are exactly the same in a normal distribution.

The standard deviation is the average amount of variability in your data set. It tells you, on average, how far each score lies from the mean .

In normal distributions, a high standard deviation means that values are generally far from the mean, while a low standard deviation indicates that values are clustered close to the mean.

No. Because the range formula subtracts the lowest number from the highest number, the range is always zero or a positive number.

In statistics, the range is the spread of your data from the lowest to the highest value in the distribution. It is the simplest measure of variability .

While central tendency tells you where most of your data points lie, variability summarizes how far apart your points from each other.

Data sets can have the same central tendency but different levels of variability or vice versa . Together, they give you a complete picture of your data.

Variability is most commonly measured with the following descriptive statistics :

- Range : the difference between the highest and lowest values

- Interquartile range : the range of the middle half of a distribution

- Standard deviation : average distance from the mean

- Variance : average of squared distances from the mean

Variability tells you how far apart points lie from each other and from the center of a distribution or a data set.

Variability is also referred to as spread, scatter or dispersion.

While interval and ratio data can both be categorized, ranked, and have equal spacing between adjacent values, only ratio scales have a true zero.

For example, temperature in Celsius or Fahrenheit is at an interval scale because zero is not the lowest possible temperature. In the Kelvin scale, a ratio scale, zero represents a total lack of thermal energy.

A critical value is the value of the test statistic which defines the upper and lower bounds of a confidence interval , or which defines the threshold of statistical significance in a statistical test. It describes how far from the mean of the distribution you have to go to cover a certain amount of the total variation in the data (i.e. 90%, 95%, 99%).

If you are constructing a 95% confidence interval and are using a threshold of statistical significance of p = 0.05, then your critical value will be identical in both cases.

The t -distribution gives more probability to observations in the tails of the distribution than the standard normal distribution (a.k.a. the z -distribution).

In this way, the t -distribution is more conservative than the standard normal distribution: to reach the same level of confidence or statistical significance , you will need to include a wider range of the data.

A t -score (a.k.a. a t -value) is equivalent to the number of standard deviations away from the mean of the t -distribution .

The t -score is the test statistic used in t -tests and regression tests. It can also be used to describe how far from the mean an observation is when the data follow a t -distribution.

The t -distribution is a way of describing a set of observations where most observations fall close to the mean , and the rest of the observations make up the tails on either side. It is a type of normal distribution used for smaller sample sizes, where the variance in the data is unknown.

The t -distribution forms a bell curve when plotted on a graph. It can be described mathematically using the mean and the standard deviation .

In statistics, ordinal and nominal variables are both considered categorical variables .

Even though ordinal data can sometimes be numerical, not all mathematical operations can be performed on them.

Ordinal data has two characteristics:

- The data can be classified into different categories within a variable.

- The categories have a natural ranked order.

However, unlike with interval data, the distances between the categories are uneven or unknown.

Nominal and ordinal are two of the four levels of measurement . Nominal level data can only be classified, while ordinal level data can be classified and ordered.

Nominal data is data that can be labelled or classified into mutually exclusive categories within a variable. These categories cannot be ordered in a meaningful way.

For example, for the nominal variable of preferred mode of transportation, you may have the categories of car, bus, train, tram or bicycle.

If your confidence interval for a difference between groups includes zero, that means that if you run your experiment again you have a good chance of finding no difference between groups.

If your confidence interval for a correlation or regression includes zero, that means that if you run your experiment again there is a good chance of finding no correlation in your data.

In both of these cases, you will also find a high p -value when you run your statistical test, meaning that your results could have occurred under the null hypothesis of no relationship between variables or no difference between groups.

If you want to calculate a confidence interval around the mean of data that is not normally distributed , you have two choices:

- Find a distribution that matches the shape of your data and use that distribution to calculate the confidence interval.

- Perform a transformation on your data to make it fit a normal distribution, and then find the confidence interval for the transformed data.

The standard normal distribution , also called the z -distribution, is a special normal distribution where the mean is 0 and the standard deviation is 1.

Any normal distribution can be converted into the standard normal distribution by turning the individual values into z -scores. In a z -distribution, z -scores tell you how many standard deviations away from the mean each value lies.

The z -score and t -score (aka z -value and t -value) show how many standard deviations away from the mean of the distribution you are, assuming your data follow a z -distribution or a t -distribution .

These scores are used in statistical tests to show how far from the mean of the predicted distribution your statistical estimate is. If your test produces a z -score of 2.5, this means that your estimate is 2.5 standard deviations from the predicted mean.

The predicted mean and distribution of your estimate are generated by the null hypothesis of the statistical test you are using. The more standard deviations away from the predicted mean your estimate is, the less likely it is that the estimate could have occurred under the null hypothesis .

To calculate the confidence interval , you need to know:

- The point estimate you are constructing the confidence interval for

- The critical values for the test statistic

- The standard deviation of the sample

- The sample size

Then you can plug these components into the confidence interval formula that corresponds to your data. The formula depends on the type of estimate (e.g. a mean or a proportion) and on the distribution of your data.

The confidence level is the percentage of times you expect to get close to the same estimate if you run your experiment again or resample the population in the same way.

The confidence interval consists of the upper and lower bounds of the estimate you expect to find at a given level of confidence.

For example, if you are estimating a 95% confidence interval around the mean proportion of female babies born every year based on a random sample of babies, you might find an upper bound of 0.56 and a lower bound of 0.48. These are the upper and lower bounds of the confidence interval. The confidence level is 95%.

The mean is the most frequently used measure of central tendency because it uses all values in the data set to give you an average.

For data from skewed distributions, the median is better than the mean because it isn’t influenced by extremely large values.

The mode is the only measure you can use for nominal or categorical data that can’t be ordered.

The measures of central tendency you can use depends on the level of measurement of your data.

- For a nominal level, you can only use the mode to find the most frequent value.

- For an ordinal level or ranked data, you can also use the median to find the value in the middle of your data set.

- For interval or ratio levels, in addition to the mode and median, you can use the mean to find the average value.

Measures of central tendency help you find the middle, or the average, of a data set.

The 3 most common measures of central tendency are the mean, median and mode.

- The mode is the most frequent value.

- The median is the middle number in an ordered data set.

- The mean is the sum of all values divided by the total number of values.

Some variables have fixed levels. For example, gender and ethnicity are always nominal level data because they cannot be ranked.

However, for other variables, you can choose the level of measurement . For example, income is a variable that can be recorded on an ordinal or a ratio scale:

- At an ordinal level , you could create 5 income groupings and code the incomes that fall within them from 1–5.

- At a ratio level , you would record exact numbers for income.

If you have a choice, the ratio level is always preferable because you can analyze data in more ways. The higher the level of measurement, the more precise your data is.

The level at which you measure a variable determines how you can analyze your data.

Depending on the level of measurement , you can perform different descriptive statistics to get an overall summary of your data and inferential statistics to see if your results support or refute your hypothesis .

Levels of measurement tell you how precisely variables are recorded. There are 4 levels of measurement, which can be ranked from low to high:

- Nominal : the data can only be categorized.

- Ordinal : the data can be categorized and ranked.

- Interval : the data can be categorized and ranked, and evenly spaced.

- Ratio : the data can be categorized, ranked, evenly spaced and has a natural zero.

No. The p -value only tells you how likely the data you have observed is to have occurred under the null hypothesis .

If the p -value is below your threshold of significance (typically p < 0.05), then you can reject the null hypothesis, but this does not necessarily mean that your alternative hypothesis is true.

The alpha value, or the threshold for statistical significance , is arbitrary – which value you use depends on your field of study.

In most cases, researchers use an alpha of 0.05, which means that there is a less than 5% chance that the data being tested could have occurred under the null hypothesis.

P -values are usually automatically calculated by the program you use to perform your statistical test. They can also be estimated using p -value tables for the relevant test statistic .

P -values are calculated from the null distribution of the test statistic. They tell you how often a test statistic is expected to occur under the null hypothesis of the statistical test, based on where it falls in the null distribution.

If the test statistic is far from the mean of the null distribution, then the p -value will be small, showing that the test statistic is not likely to have occurred under the null hypothesis.

A p -value , or probability value, is a number describing how likely it is that your data would have occurred under the null hypothesis of your statistical test .

The test statistic you use will be determined by the statistical test.

You can choose the right statistical test by looking at what type of data you have collected and what type of relationship you want to test.

The test statistic will change based on the number of observations in your data, how variable your observations are, and how strong the underlying patterns in the data are.

For example, if one data set has higher variability while another has lower variability, the first data set will produce a test statistic closer to the null hypothesis , even if the true correlation between two variables is the same in either data set.

The formula for the test statistic depends on the statistical test being used.

Generally, the test statistic is calculated as the pattern in your data (i.e. the correlation between variables or difference between groups) divided by the variance in the data (i.e. the standard deviation ).

- Univariate statistics summarize only one variable at a time.

- Bivariate statistics compare two variables .

- Multivariate statistics compare more than two variables .

The 3 main types of descriptive statistics concern the frequency distribution, central tendency, and variability of a dataset.

- Distribution refers to the frequencies of different responses.

- Measures of central tendency give you the average for each response.

- Measures of variability show you the spread or dispersion of your dataset.

Descriptive statistics summarize the characteristics of a data set. Inferential statistics allow you to test a hypothesis or assess whether your data is generalizable to the broader population.

In statistics, model selection is a process researchers use to compare the relative value of different statistical models and determine which one is the best fit for the observed data.

The Akaike information criterion is one of the most common methods of model selection. AIC weights the ability of the model to predict the observed data against the number of parameters the model requires to reach that level of precision.

AIC model selection can help researchers find a model that explains the observed variation in their data while avoiding overfitting.

In statistics, a model is the collection of one or more independent variables and their predicted interactions that researchers use to try to explain variation in their dependent variable.

You can test a model using a statistical test . To compare how well different models fit your data, you can use Akaike’s information criterion for model selection.

The Akaike information criterion is calculated from the maximum log-likelihood of the model and the number of parameters (K) used to reach that likelihood. The AIC function is 2K – 2(log-likelihood) .

Lower AIC values indicate a better-fit model, and a model with a delta-AIC (the difference between the two AIC values being compared) of more than -2 is considered significantly better than the model it is being compared to.

The Akaike information criterion is a mathematical test used to evaluate how well a model fits the data it is meant to describe. It penalizes models which use more independent variables (parameters) as a way to avoid over-fitting.

AIC is most often used to compare the relative goodness-of-fit among different models under consideration and to then choose the model that best fits the data.

A factorial ANOVA is any ANOVA that uses more than one categorical independent variable . A two-way ANOVA is a type of factorial ANOVA.

Some examples of factorial ANOVAs include:

- Testing the combined effects of vaccination (vaccinated or not vaccinated) and health status (healthy or pre-existing condition) on the rate of flu infection in a population.

- Testing the effects of marital status (married, single, divorced, widowed), job status (employed, self-employed, unemployed, retired), and family history (no family history, some family history) on the incidence of depression in a population.

- Testing the effects of feed type (type A, B, or C) and barn crowding (not crowded, somewhat crowded, very crowded) on the final weight of chickens in a commercial farming operation.

In ANOVA, the null hypothesis is that there is no difference among group means. If any group differs significantly from the overall group mean, then the ANOVA will report a statistically significant result.

Significant differences among group means are calculated using the F statistic, which is the ratio of the mean sum of squares (the variance explained by the independent variable) to the mean square error (the variance left over).

If the F statistic is higher than the critical value (the value of F that corresponds with your alpha value, usually 0.05), then the difference among groups is deemed statistically significant.

The only difference between one-way and two-way ANOVA is the number of independent variables . A one-way ANOVA has one independent variable, while a two-way ANOVA has two.

- One-way ANOVA : Testing the relationship between shoe brand (Nike, Adidas, Saucony, Hoka) and race finish times in a marathon.

- Two-way ANOVA : Testing the relationship between shoe brand (Nike, Adidas, Saucony, Hoka), runner age group (junior, senior, master’s), and race finishing times in a marathon.

All ANOVAs are designed to test for differences among three or more groups. If you are only testing for a difference between two groups, use a t-test instead.

Multiple linear regression is a regression model that estimates the relationship between a quantitative dependent variable and two or more independent variables using a straight line.

Linear regression most often uses mean-square error (MSE) to calculate the error of the model. MSE is calculated by:

- measuring the distance of the observed y-values from the predicted y-values at each value of x;

- squaring each of these distances;

- calculating the mean of each of the squared distances.

Linear regression fits a line to the data by finding the regression coefficient that results in the smallest MSE.

Simple linear regression is a regression model that estimates the relationship between one independent variable and one dependent variable using a straight line. Both variables should be quantitative.

For example, the relationship between temperature and the expansion of mercury in a thermometer can be modeled using a straight line: as temperature increases, the mercury expands. This linear relationship is so certain that we can use mercury thermometers to measure temperature.

A regression model is a statistical model that estimates the relationship between one dependent variable and one or more independent variables using a line (or a plane in the case of two or more independent variables).

A regression model can be used when the dependent variable is quantitative, except in the case of logistic regression, where the dependent variable is binary.

A t-test should not be used to measure differences among more than two groups, because the error structure for a t-test will underestimate the actual error when many groups are being compared.

If you want to compare the means of several groups at once, it’s best to use another statistical test such as ANOVA or a post-hoc test.

A one-sample t-test is used to compare a single population to a standard value (for example, to determine whether the average lifespan of a specific town is different from the country average).

A paired t-test is used to compare a single population before and after some experimental intervention or at two different points in time (for example, measuring student performance on a test before and after being taught the material).

A t-test measures the difference in group means divided by the pooled standard error of the two group means.

In this way, it calculates a number (the t-value) illustrating the magnitude of the difference between the two group means being compared, and estimates the likelihood that this difference exists purely by chance (p-value).

Your choice of t-test depends on whether you are studying one group or two groups, and whether you care about the direction of the difference in group means.

If you are studying one group, use a paired t-test to compare the group mean over time or after an intervention, or use a one-sample t-test to compare the group mean to a standard value. If you are studying two groups, use a two-sample t-test .

If you want to know only whether a difference exists, use a two-tailed test . If you want to know if one group mean is greater or less than the other, use a left-tailed or right-tailed one-tailed test .

A t-test is a statistical test that compares the means of two samples . It is used in hypothesis testing , with a null hypothesis that the difference in group means is zero and an alternate hypothesis that the difference in group means is different from zero.

Statistical significance is a term used by researchers to state that it is unlikely their observations could have occurred under the null hypothesis of a statistical test . Significance is usually denoted by a p -value , or probability value.

Statistical significance is arbitrary – it depends on the threshold, or alpha value, chosen by the researcher. The most common threshold is p < 0.05, which means that the data is likely to occur less than 5% of the time under the null hypothesis .

When the p -value falls below the chosen alpha value, then we say the result of the test is statistically significant.

A test statistic is a number calculated by a statistical test . It describes how far your observed data is from the null hypothesis of no relationship between variables or no difference among sample groups.

The test statistic tells you how different two or more groups are from the overall population mean , or how different a linear slope is from the slope predicted by a null hypothesis . Different test statistics are used in different statistical tests.

Statistical tests commonly assume that:

- the data are normally distributed

- the groups that are being compared have similar variance

- the data are independent

If your data does not meet these assumptions you might still be able to use a nonparametric statistical test , which have fewer requirements but also make weaker inferences.

Ask our team

Want to contact us directly? No problem. We are always here for you.

- Email [email protected]

- Start live chat

- Call +1 (510) 822-8066

- WhatsApp +31 20 261 6040

Our team helps students graduate by offering:

- A world-class citation generator

- Plagiarism Checker software powered by Turnitin

- Innovative Citation Checker software

- Professional proofreading services

- Over 300 helpful articles about academic writing, citing sources, plagiarism, and more

Scribbr specializes in editing study-related documents . We proofread:

- PhD dissertations

- Research proposals

- Personal statements

- Admission essays

- Motivation letters

- Reflection papers

- Journal articles

- Capstone projects

Scribbr’s Plagiarism Checker is powered by elements of Turnitin’s Similarity Checker , namely the plagiarism detection software and the Internet Archive and Premium Scholarly Publications content databases .

The add-on AI detector is powered by Scribbr’s proprietary software.

The Scribbr Citation Generator is developed using the open-source Citation Style Language (CSL) project and Frank Bennett’s citeproc-js . It’s the same technology used by dozens of other popular citation tools, including Mendeley and Zotero.

You can find all the citation styles and locales used in the Scribbr Citation Generator in our publicly accessible repository on Github .

What Is Statistical Analysis?

Statistical analysis helps you pull meaningful insights from data. The process involves working with data and deducing numbers to tell quantitative stories.

Statistical analysis is a technique we use to find patterns in data and make inferences about those patterns to describe variability in the results of a data set or an experiment.

In its simplest form, statistical analysis answers questions about:

- Quantification — how big/small/tall/wide is it?

- Variability — growth, increase, decline

- The confidence level of these variabilities

What Are the 2 Types of Statistical Analysis?

- Descriptive Statistics: Descriptive statistical analysis describes the quality of the data by summarizing large data sets into single measures.

- Inferential Statistics: Inferential statistical analysis allows you to draw conclusions from your sample data set and make predictions about a population using statistical tests.

What’s the Purpose of Statistical Analysis?

Using statistical analysis, you can determine trends in the data by calculating your data set’s mean or median. You can also analyze the variation between different data points from the mean to get the standard deviation . Furthermore, to test the validity of your statistical analysis conclusions, you can use hypothesis testing techniques, like P-value, to determine the likelihood that the observed variability could have occurred by chance.

More From Abdishakur Hassan The 7 Best Thematic Map Types for Geospatial Data

Statistical Analysis Methods

There are two major types of statistical data analysis: descriptive and inferential.

Descriptive Statistical Analysis

Descriptive statistical analysis describes the quality of the data by summarizing large data sets into single measures.

Within the descriptive analysis branch, there are two main types: measures of central tendency (i.e. mean, median and mode) and measures of dispersion or variation (i.e. variance , standard deviation and range).

For example, you can calculate the average exam results in a class using central tendency or, in particular, the mean. In that case, you’d sum all student results and divide by the number of tests. You can also calculate the data set’s spread by calculating the variance. To calculate the variance, subtract each exam result in the data set from the mean, square the answer, add everything together and divide by the number of tests.

Inferential Statistics

On the other hand, inferential statistical analysis allows you to draw conclusions from your sample data set and make predictions about a population using statistical tests.

There are two main types of inferential statistical analysis: hypothesis testing and regression analysis. We use hypothesis testing to test and validate assumptions in order to draw conclusions about a population from the sample data. Popular tests include Z-test, F-Test, ANOVA test and confidence intervals . On the other hand, regression analysis primarily estimates the relationship between a dependent variable and one or more independent variables. There are numerous types of regression analysis but the most popular ones include linear and logistic regression .

Statistical Analysis Steps

In the era of big data and data science, there is a rising demand for a more problem-driven approach. As a result, we must approach statistical analysis holistically. We may divide the entire process into five different and significant stages by using the well-known PPDAC model of statistics: Problem, Plan, Data, Analysis and Conclusion.

In the first stage, you define the problem you want to tackle and explore questions about the problem.

2. Plan

Next is the planning phase. You can check whether data is available or if you need to collect data for your problem. You also determine what to measure and how to measure it.

The third stage involves data collection, understanding the data and checking its quality.

4. Analysis

Statistical data analysis is the fourth stage. Here you process and explore the data with the help of tables, graphs and other data visualizations. You also develop and scrutinize your hypothesis in this stage of analysis.

5. Conclusion

The final step involves interpretations and conclusions from your analysis. It also covers generating new ideas for the next iteration. Thus, statistical analysis is not a one-time event but an iterative process.

Statistical Analysis Uses

Statistical analysis is useful for research and decision making because it allows us to understand the world around us and draw conclusions by testing our assumptions. Statistical analysis is important for various applications, including:

- Statistical quality control and analysis in product development

- Clinical trials

- Customer satisfaction surveys and customer experience research

- Marketing operations management

- Process improvement and optimization

- Training needs

More on Statistical Analysis From Built In Experts Intro to Descriptive Statistics for Machine Learning

Benefits of Statistical Analysis

Here are some of the reasons why statistical analysis is widespread in many applications and why it’s necessary:

Understand Data

Statistical analysis gives you a better understanding of the data and what they mean. These types of analyses provide information that would otherwise be difficult to obtain by merely looking at the numbers without considering their relationship.

Find Causal Relationships

Statistical analysis can help you investigate causation or establish the precise meaning of an experiment, like when you’re looking for a relationship between two variables.

Make Data-Informed Decisions

Businesses are constantly looking to find ways to improve their services and products . Statistical analysis allows you to make data-informed decisions about your business or future actions by helping you identify trends in your data, whether positive or negative.

Determine Probability

Statistical analysis is an approach to understanding how the probability of certain events affects the outcome of an experiment. It helps scientists and engineers decide how much confidence they can have in the results of their research, how to interpret their data and what questions they can feasibly answer.

You’ve Got Questions. Our Experts Have Answers. Confidence Intervals, Explained!

What Are the Risks of Statistical Analysis?

Statistical analysis can be valuable and effective, but it’s an imperfect approach. Even if the analyst or researcher performs a thorough statistical analysis, there may still be known or unknown problems that can affect the results. Therefore, statistical analysis is not a one-size-fits-all process. If you want to get good results, you need to know what you’re doing. It can take a lot of time to figure out which type of statistical analysis will work best for your situation .

Thus, you should remember that our conclusions drawn from statistical analysis don’t always guarantee correct results. This can be dangerous when making business decisions. In marketing , for example, we may come to the wrong conclusion about a product . Therefore, the conclusions we draw from statistical data analysis are often approximated; testing for all factors affecting an observation is impossible.

Recent Big Data Articles

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

- My Bibliography

- Collections

- Citation manager

Save citation to file

Email citation, add to collections.

- Create a new collection

- Add to an existing collection

Add to My Bibliography

Your saved search, create a file for external citation management software, your rss feed.

- Search in PubMed

- Search in NLM Catalog

- Add to Search

Comprehensive guidelines for appropriate statistical analysis methods in research

Affiliations.

- 1 Department of Anesthesiology and Pain Medicine, Daegu Catholic University School of Medicine, Daegu, Korea.

- 2 Department of Medical Statistics, Daegu Catholic University School of Medicine, Daegu, Korea.

- PMID: 39210669

- DOI: 10.4097/kja.24016

Background: The selection of statistical analysis methods in research is a critical and nuanced task that requires a scientific and rational approach. Aligning the chosen method with the specifics of the research design and hypothesis is paramount, as it can significantly impact the reliability and quality of the research outcomes.

Methods: This study explores a comprehensive guideline for systematically choosing appropriate statistical analysis methods, with a particular focus on the statistical hypothesis testing stage and categorization of variables. By providing a detailed examination of these aspects, this study aims to provide researchers with a solid foundation for informed methodological decision making. Moving beyond theoretical considerations, this study delves into the practical realm by examining the null and alternative hypotheses tailored to specific statistical methods of analysis. The dynamic relationship between these hypotheses and statistical methods is thoroughly explored, and a carefully crafted flowchart for selecting the statistical analysis method is proposed.

Results: Based on the flowchart, we examined whether exemplary research papers appropriately used statistical methods that align with the variables chosen and hypotheses built for the research. This iterative process ensures the adaptability and relevance of this flowchart across diverse research contexts, contributing to both theoretical insights and tangible tools for methodological decision-making.

Conclusions: This study emphasizes the importance of a scientific and rational approach for the selection of statistical analysis methods. By providing comprehensive guidelines, insights into the null and alternative hypotheses, and a practical flowchart, this study aims to empower researchers and enhance the overall quality and reliability of scientific studies.

Keywords: Algorithms; Biostatistics; Data analysis; Guideline; Statistical data interpretation; Statistical model..

PubMed Disclaimer

- Citation Manager

NCBI Literature Resources

MeSH PMC Bookshelf Disclaimer

The PubMed wordmark and PubMed logo are registered trademarks of the U.S. Department of Health and Human Services (HHS). Unauthorized use of these marks is strictly prohibited.

- Submit your COVID-19 Pandemic Research

- Research Leap Manual on Academic Writing

- Conduct Your Survey Easily

- Research Tools for Primary and Secondary Research

- Useful and Reliable Article Sources for Researchers

- Tips on writing a Research Paper

- Stuck on Your Thesis Statement?

- Out of the Box

- How to Organize the Format of Your Writing

- Argumentative Versus Persuasive. Comparing the 2 Types of Academic Writing Styles

- Very Quick Academic Writing Tips and Advices

- Top 4 Quick Useful Tips for Your Introduction

- Have You Chosen the Right Topic for Your Research Paper?

- Follow These Easy 8 Steps to Write an Effective Paper

- 7 Errors in your thesis statement

- How do I even Write an Academic Paper?

- Useful Tips for Successful Academic Writing

A Taxonomy of Knowledge Management Systems in the Micro-Enterprise

Immigrant entrepreneurship in europe: insights from a bibliometric analysis, enhancing employee job performance through supportive leadership, diversity management, and employee commitment: the mediating role of affective commitment.

- Relationship Management in Sales – Presentation of a Model with Which Sales Employees Can Build Interpersonal Relationships with Customers

- Promoting Digital Technologies to Enhance Human Resource Progress in Banking

- Enhancing Customer Loyalty in the E-Food Industry: Examining Customer Perspectives on Lock-In Strategies

- Self-Disruption As A Strategy For Leveraging A Bank’s Sustainability During Disruptive Periods: A Perspective from the Caribbean Financial Institutions

- Chinese Direct Investments in Germany

- Slide Share

Understanding statistical analysis: A beginner’s guide to data interpretation

Statistical analysis is a crucial part of research in many fields. It is used to analyze data and draw conclusions about the population being studied. However, statistical analysis can be complex and intimidating for beginners. In this article, we will provide a beginner’s guide to statistical analysis and data interpretation, with the aim of helping researchers understand the basics of statistical methods and their application in research.

What is Statistical Analysis?

Statistical analysis is a collection of methods used to analyze data. These methods are used to summarize data, make predictions, and draw conclusions about the population being studied. Statistical analysis is used in a variety of fields, including medicine, social sciences, economics, and more.

Statistical analysis can be broadly divided into two categories: descriptive statistics and inferential statistics. Descriptive statistics are used to summarize data, while inferential statistics are used to draw conclusions about the population based on a sample of data.

Descriptive Statistics

Descriptive statistics are used to summarize data. This includes measures such as the mean, median, mode, and standard deviation. These measures provide information about the central tendency and variability of the data. For example, the mean provides information about the average value of the data, while the standard deviation provides information about the variability of the data.

Inferential Statistics

Inferential statistics are used to draw conclusions about the population based on a sample of data. This involves making inferences about the population based on the sample data. For example, a researcher might use inferential statistics to test whether there is a significant difference between two groups in a study.

Statistical Analysis Techniques

There are many different statistical analysis techniques that can be used in research. Some of the most common techniques include:

Correlation Analysis: This involves analyzing the relationship between two or more variables.

Regression Analysis: This involves analyzing the relationship between a dependent variable and one or more independent variables.

T-Tests: This is a statistical test used to compare the means of two groups.

Analysis of Variance (ANOVA): This is a statistical test used to compare the means of three or more groups.

Chi-Square Test: This is a statistical test used to determine whether there is a significant association between two categorical variables.

Data Interpretation

Once data has been analyzed, it must be interpreted. This involves making sense of the data and drawing conclusions based on the results of the analysis. Data interpretation is a crucial part of statistical analysis, as it is used to draw conclusions and make recommendations based on the data.

When interpreting data, it is important to consider the context in which the data was collected. This includes factors such as the sample size, the sampling method, and the population being studied. It is also important to consider the limitations of the data and the statistical methods used.

Best Practices for Statistical Analysis

To ensure that statistical analysis is conducted correctly and effectively, there are several best practices that should be followed. These include:

Clearly define the research question : This is the foundation of the study and will guide the analysis.

Choose appropriate statistical methods: Different statistical methods are appropriate for different types of data and research questions.

Use reliable and valid data: The data used for analysis should be reliable and valid. This means that it should accurately represent the population being studied and be collected using appropriate methods.

Ensure that the data is representative: The sample used for analysis should be representative of the population being studied. This helps to ensure that the results of the analysis are applicable to the population.

Follow ethical guidelines : Researchers should follow ethical guidelines when conducting research. This includes obtaining informed consent from participants, protecting their privacy, and ensuring that the study does not cause harm.