- Open access

- Published: 02 August 2021

Social engineering in cybersecurity: a domain ontology and knowledge graph application examples

- Zuoguang Wang 1 , 2 ,

- Hongsong Zhu ORCID: orcid.org/0000-0003-3720-7403 1 , 2 ,

- Peipei Liu 1 , 2 &

- Limin Sun 1 , 2

Cybersecurity volume 4 , Article number: 31 ( 2021 ) Cite this article

19k Accesses

22 Citations

2 Altmetric

Metrics details

Social engineering has posed a serious threat to cyberspace security. To protect against social engineering attacks, a fundamental work is to know what constitutes social engineering. This paper first develops a domain ontology of social engineering in cybersecurity and conducts ontology evaluation by its knowledge graph application. The domain ontology defines 11 concepts of core entities that significantly constitute or affect social engineering domain, together with 22 kinds of relations describing how these entities related to each other. It provides a formal and explicit knowledge schema to understand, analyze, reuse and share domain knowledge of social engineering. Furthermore, this paper builds a knowledge graph based on 15 social engineering attack incidents and scenarios. 7 knowledge graph application examples (in 6 analysis patterns) demonstrate that the ontology together with knowledge graph is useful to 1) understand and analyze social engineering attack scenario and incident, 2) find the top ranked social engineering threat elements (e.g. the most exploited human vulnerabilities and most used attack mediums), 3) find potential social engineering threats to victims, 4) find potential targets for social engineering attackers, 5) find potential attack paths from specific attacker to specific target, and 6) analyze the same origin attacks.

Introduction

In the context of cybersecurity, social engineering describes a type of attack in which the attacker exploit human vulnerabilities (by means such as influence, persuasion, deception, manipulation and inducing) to breach the security goals (such as confidentiality, integrity, availability, controllability and auditability) of cyberspace elements (such as infrastructure, data, resource, user and operation). Succinctly, social engineering is a type of attack wherein the attacker exploit human vulnerability through social interaction to breach cyberspace security ( Wang et al. 2020 ). Many distinctive features make social engineering to be a quite popular attack in hacker community and a serious, universal and persistent threat to cyber security. 1) Compared to classical attacks such as password cracking by brute-force and software vulnerabilities exploit, social engineering exploits human vulnerabilities to bypass or break through security barriers, without having to combat with firewall or antivirus software by deep coding. 2) For some attack scenarios, social engineering can be as simple as making a phone call and impersonating an insider to elicit the classified information. 3) Especially in past decades when defense mainly focus on the digital domain yet overlooks human factors in security. As the development of security technology, classical attacks become harder and more and more attackers turn to social engineering. 4) Human vulnerabilities seem inevitable, after all, there is not a cyber system doesn’t rely on humans or involve human factors on earth and these human factors are vulnerable obviously or can be largely turned into security vulnerabilities by skilled attackers. Moreover, social engineering threat is increasingly serious along with its evolution in new technical and cyber environment. Social engineering gets not only large amounts of sensitive information about people, network and devices but also more attack channels with the wide applications of Social Networking Sites (SNSs), Internet of Things (IoT), Industrial Internet, mobile communication and wearable devices. And large part of above information is open source, which simplifies the information gathering for social engineering. Social engineering becomes more efficient and automated by technology such as machine learning and artificial intelligence. As a result, a large group of targets can be reached and specific victims can be carefully selected to craft more creditable attack. The spread of social engineering tools decrease the threat threshold. Loose office policy (bring your own device, remote office, etc.) leads to the weakening of area-isolation of different security levels and creates more attack opportunities. Targeted, large-scale, robotic, automated and advanced social engineering attack is becoming possible ( Wang et al. 2020 ).

To protect against social engineering, the fundamental work is to know what social engineering is, what entities significantly constitute or affect social engineering and how these entities relate to each other. Study ( Wang et al. 2020 ) proposed a definition of social engineering in cybersecurity based on systematically conceptual evolution analysis. Yet only the definition is not enough to get insight into all the issue above, and further, to server as a tool for analyzing social engineering attack scenarios or incidents and providing a formal, explicit, reusable knowledge schema of social engineering domain.

Ontology is a term comes from philosophy to describe the existence of beings in the world and adopted in informatics, semantic web, knowledge engineering and Artificial Intelligence (AI) fields, in which an ontology is a formal, explicit description of knowledge as a set of concepts within a domain and the relationships among them (i.e. what entities exist in a domain and how they related). It defines a common vocabulary for researchers who need to share information and includes definitions of basic concepts in the domain and their relations ( Noy and McGuinness 2001 ). In an ontology, semantic information and components such as concept, object, relation, attribute, constraints and axiom are encoded or formally specified, by which an ontology is machine-readable and has capacity for reasoning. In this way, ontology not only introduce a formal, explicit, shareable and reusable knowledge representation but also can add new knowledge about the domain.

Thus, we propose a domain ontology of social engineering to understand, analyze, reuse and share domain knowledge of social engineering.

Organization: “ Methodology to develop domain ontology ” section describes the background material and methodology to develop domain ontology. “ Material and ontology implementation ” section presents the material and ontology implementation. “ Result: domain ontology of social engineering in cybersecurity ” section is the result: domain ontology of social engineering in cybersecurity. “ Evaluation: knowledge graph application examples ” section is the evaluation and application of the ontology and knowledge graph. “ Discussion ” section is the discussion. “ Conclusion ” section concludes the paper.

Methodology to develop domain ontology

There is no single correct way or methodology for developing ontologies ( Noy and McGuinness 2001 ). Since ontology design is a creative process and many factors will affect the design choices, such as the potential applications of the ontology, the designer’s understanding and view of the domain, different domain features, anticipations of the ontology to be more intuitive, general, detailed, extensible and / or maintainable.

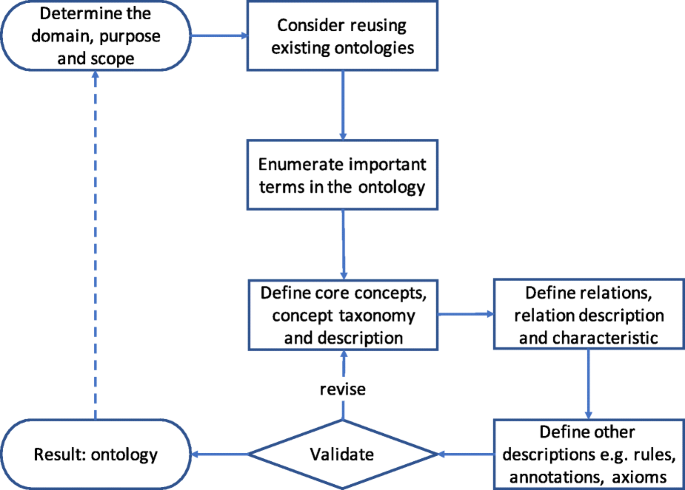

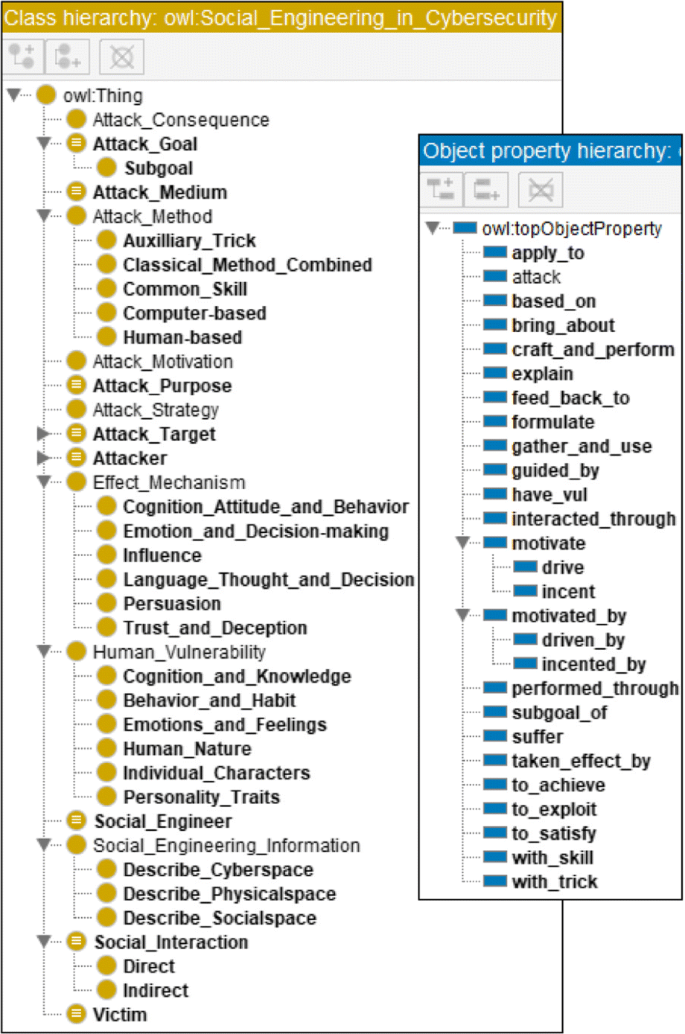

In this paper, we design the methodology to develop domain ontology of social engineering based on the method reported in work ( Noy and McGuinness 2001 ) with some modification. Protégé 5.5.0 ( Musen and Protégé Team 2015 ) is used to edit and implement the ontology. It should be noted that "entity" in real word are described as "concept" in ontology and "class" in Protégé; "relation" is described as "object property" in Protégé. The methodology is described as Fig. 1 .

Overview of methodology to develop domain ontology of social engineering

(1) Determine the domain, purpose and scope.

As described before, the domain of the ontology is social engineering in cybersecurity. The purpose of the ontology, i) for design is to present what entities significantly constitute or affect social engineering and how these entities relate to each other, ii) and for application is to server as a tool for understanding social engineering, analyzing social engineering attack scenarios or incidents and providing a formal, explicit, reusable knowledge schema of social engineering domain. Thus, social engineering itself as a type of attack, measures regarding social engineering defense will not be included here although they are important. Defense will be the theme in our future work.

(2) Consider reusing existing ontologies.

We did a systematic literature survey on social engineering and accumulated a literature database which contains 450+ studies from 1984.9 (time of the earliest literature available where the term "social engineering" was found in cybersecurity ( Wang et al. 2020 )) to 2020.5 Footnote 1 . Few work focus on the social engineering ontology, yet a lot of terms can be obtained from literature survey.

(3) Enumerate important terms in the ontology.

"Initially, it is important to get a comprehensive list of terms without worrying about overlap between concepts they represent, relations among the terms..." ( Noy and McGuinness 2001 ). These terms are useful to intuitively and quickly get a sketchy understanding on a domain, and helpful to develop a core concepts set after due consideration. A total of 350 relevant terms are enumerated from the literature database mentioned in (2). Table 1 shows these terms in a compact layout by length order Footnote 2 .

The next two steps are the most important steps in the ontology design process ( Noy and McGuinness 2001 ).

(4) Define core concepts, concept taxonomy and description.

In work ( Noy and McGuinness 2001 ), this step is to create the class hierarchy for a single concept "Wine". However, the "class, sub-class" hierarchy is a structure typically used to classification, in which only the relation "is a" or "is type of" is described. This is not the purpose of this paper. Thus, differently, we define a set of concepts for entities which significantly constitute or affect social engineering domain and discuss their taxonomy. Then, we define more expressive relations among concepts in next step.

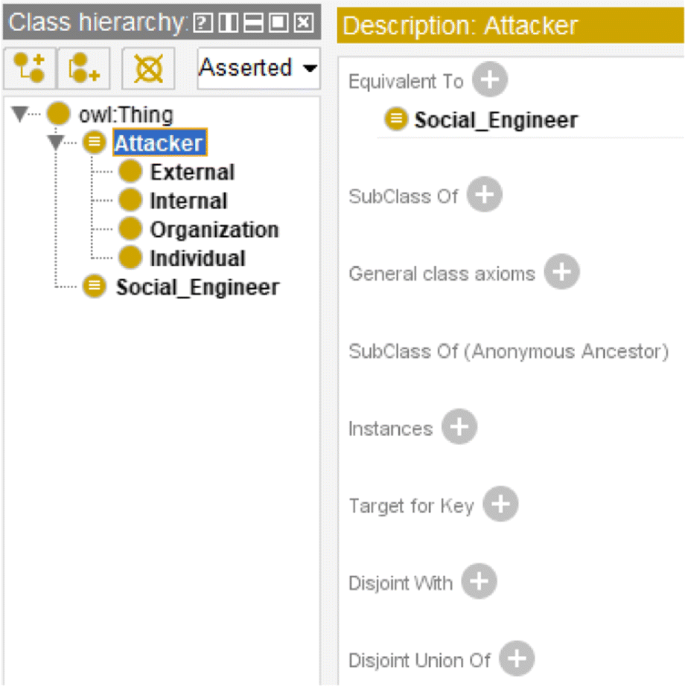

For each core concept, a definition is provided and relevant synonym terms are mentioned, to facilitate the reuse and sharing of domain knowledge. For example, attacker (a.k.a. social engineer) is the party to conduct social engineering attack; it can be an individual or an organization, and internal or external. In Protégé, these concepts are edited in the "Classes" tab. Two Classes "Attacker" and "Social Engineer" are created and because they represent the same class (concept), a description (class axiom) "Equivalent To" is set between them in the "Description" tab. As Fig. 2 shows.

Edit concepts and their description

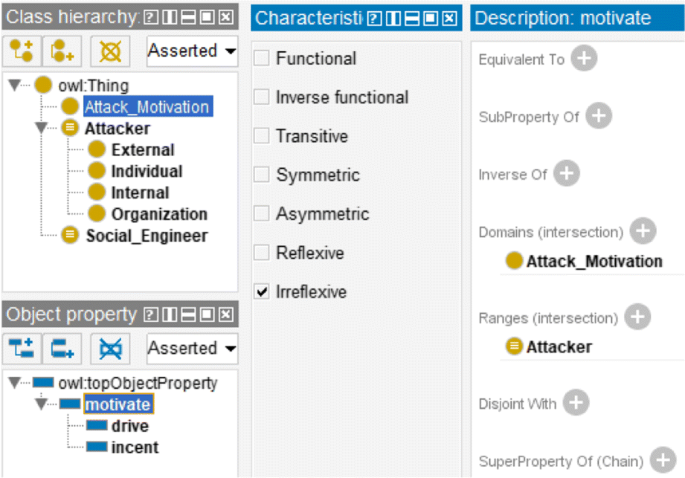

(5) Define relations, relation description and characteristic.

This step we create the relations among concepts based on their definitions. Some relations directly expressed in the definition while some may be implicit and need a explicit description. For example, attack motivation is the factors that motivate (incent, drive, cause or prompt) the attacker to conduct a social engineering attack; thus, a concise relation "motivate" from "attack motivation" to "attacker" can be created. And to be more compatible, two sub-relation "incent" and "drive" or another equivalent relation can be added. In Protégé, these relations are edited in the "Object properties" tab. For above example, "motivate" as an Object property is created; "Attack Motivation" is its Domain and "Attacker" is its Range. Because it represents that a class points to another different class, the relation characteristic "Irreflexive" is set. As Fig. 3 shows.

Edit relations, relation description and characteristic

(6) Define other descriptions.

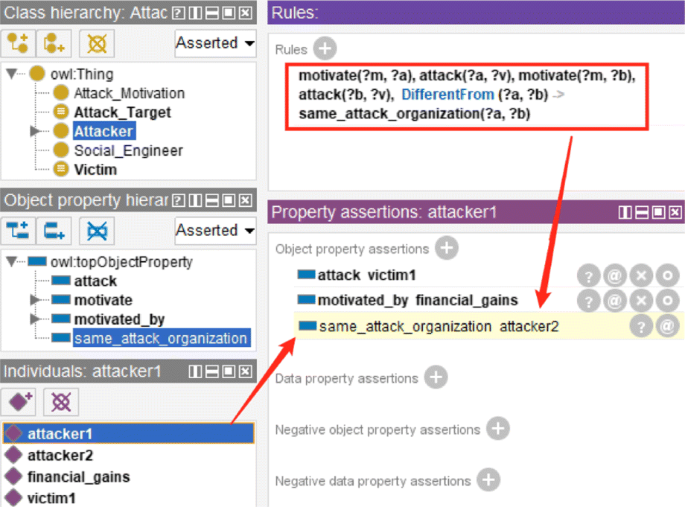

Besides above, other descriptions can be added, such as annotations, axioms, rules. Examples are as follows. For class "Attacker", its definition can be added as a comment in Annotations tab with "rdfs:comment", to facilitate conceptual understanding and later debug. Axioms are statements that are asserted to be true. For relation "motivate", we can create an inverse relation "motivated by" and then set the description (object property axiom) "Inverse Of" against "motivate", to facilitate the knowledge retrieval like "attacker is motivated by certain attack motivation". Ontology can also generate new knowledge by reasoning with rules. Assume that "different attackers are regarded as from the same attack organization if they motivated by the same motivation and attack the same victim", then the following rule can be defined to implement the reasoning. Rule: motivate(?m, ?a) ∧ attack(?a,?v) ∧ motivate(?m, ?b) ∧ attack(?b,?v) ∧ differentFrom(?a, ?b) → same_attack_organization(?a, ?b) . As Fig. 4 shows.

Define and apply rules to knowledge reasoning

(7) Validate and revise.

After defining the concepts, relations and related descriptions, a domain ontology is created. Yet it is initial and imperfect. Minor mistakes such as misplacement and typing error may be occurred when large amount of items existed. Illogical or contradictory descriptions may be defined. Some class, relations or descriptions may be absent or superfluous. Thus, an iterative process is necessary for ontology development, validation and revision.

By virtue of the ontology is formal and explicit encoded, any faults that cause logical inconsistency can be found. The built-in reasoner HermiT is used for this reasoning validation. Further, we create instances as the actual data to conduct a deductive validation, as Fig. 4 shows. This is an intuitive method to test whether the ontology (e.g. the rules) is effective, and it also provides a way helpful to adjust descriptions and revise the ontology to achieve the purpose previously.

(8) Result: Ontology.

Finally, a domain ontology of social engineering is developed after iterative revision and validation.

Material and ontology implementation

The background material regarding literature and terms have been mentioned in “ Methodology to develop domain ontology ” section and we will not repeat them here. This section presents the key material and procedures for the ontology implementation, i.e. defining the concepts, relations and other descriptions related.

Define core concepts in the domain ontology

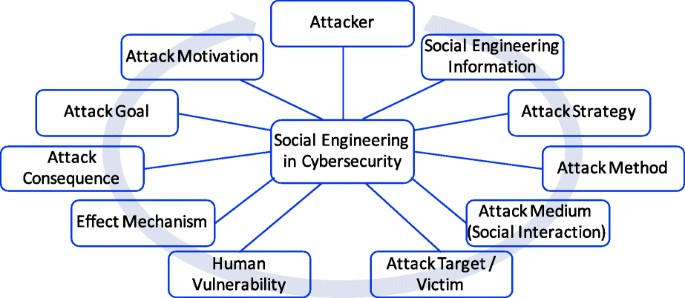

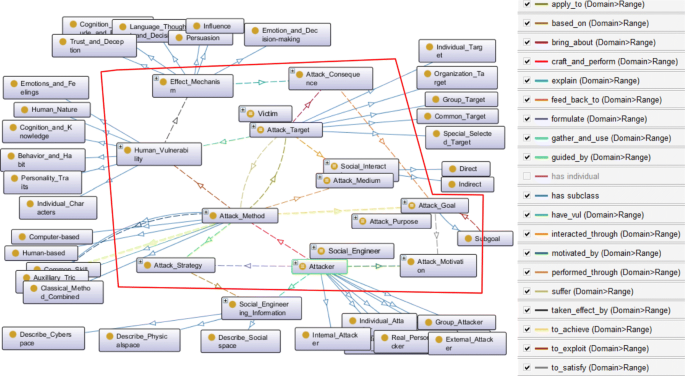

This subsection details 11 core concepts corresponding to entities that significantly constitute or affect social engineering domain. For each concept, the concept definition, synonym term, taxonomy and some other properties are described. Figure 5 shows these entities (concepts). The circular arrow represents an approximate attack cycle for typical attack scenarios: 1) the attacker motivated by certain factors 2) to gather specific information, formulate attack strategy, craft attack method 3) and then through certain medium the attack method is performed and the attack target is interacted with 4) to exploit their vulnerabilities which take effect and lead to attack consequences; 5) the consequence feed back to the attack goal predetermined to satisfy the attack motivation.

Core entities (concepts) in social engineering domain

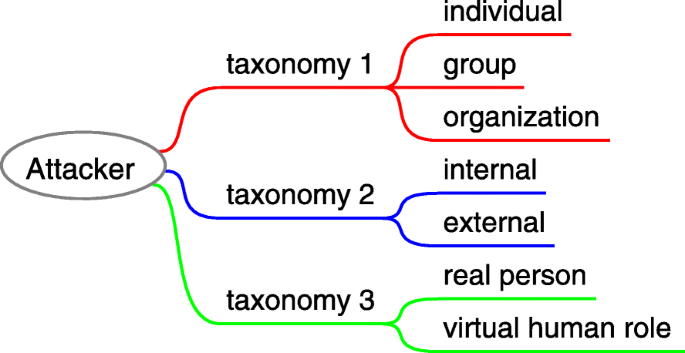

For social engineering, the attacker (a.k.a. social engineer) is the party to conduct a social engineering attack; it is typically motivated by certain factors discussed in “ Attack motivation ” section. Social engineering attackers appear in various forms in reality, such as hackers, phreakers, phishers, disgruntled employees, identity thieves, penetration testers, script kiddies, malicious users. Different criteria can also be used for the attacker’s taxonomy. The attacker identified as an individual person is familiar to the public, yet it does not have to be an individual. The attacker can also be a group or an organization. The attacker can be a real person, or a virtual human role (e.g. a bot), and it can be from internal or external. As Fig. 6 shows.

Taxonomy of attacker (social engineer)

Attack motivation

Attack motivation is the factors that motivate (incent, drive, cause or prompt) the attacker to conduct a social engineering attack. It can be intrinsic or extrinsic. Considering that this simple taxonomy does not seem to be significantly helpful to the social engineering analysis, a common list of attack motivations in social engineering may be more intuitive. It includes but is not limited to: 1) financial gain ( Research 2011 ), 2) competitive advantage ( Chitrey et al. 2012 ), 3) revenge ( Research 2011 ), 4) external pressure, 5) personal interest, 6) intellectual challenge, 7) increasing followers or friends in SNSs, 8) image spoiling (denigration, reputation destruction, stigmatization), 9) prank, 10) fun or pleasure, 11) politics, 12) war, 13) religious belief, 14) fanaticism, 15) social disorder, 16) cultural disruption ( Indrajit 2017 ), 17) terrorism, 18) espionage, 19) security test.

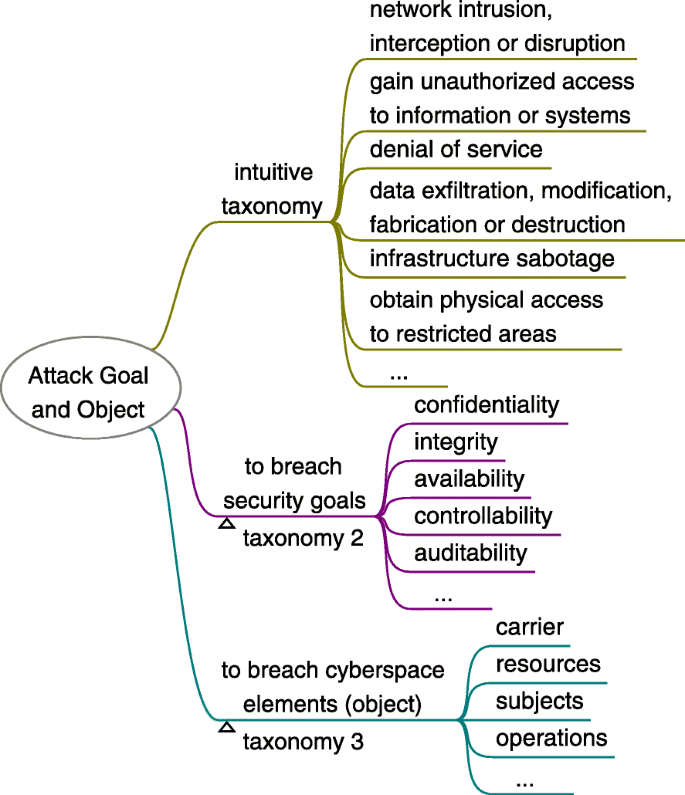

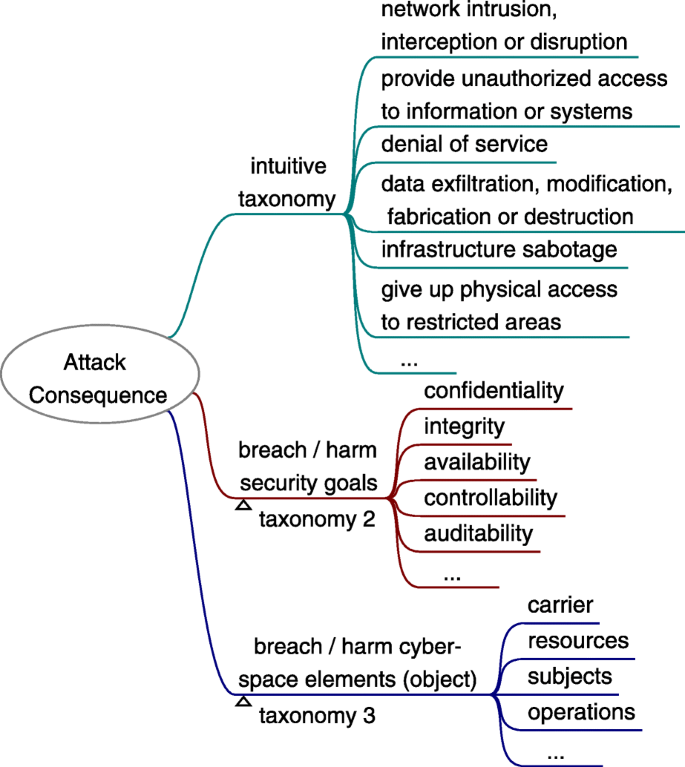

Attack goal and object

The attack goal (a.k.a. attack purpose) is something that the attacker wants to achieve by specific attack methods so that the attack motivation can be satisfied. For social engineering, it is some kinds of breaching against cyberspace security. In general, to breach cyberspace security is to breach the security goals (confidentiality, integrity, availability, controllability, auditability, etc.) of the four basic elements of cyberspace (i.e. attack object) ( Wang et al. 2020 ). These four basic elements are Carrier (the infrastructure, hardware and software facilities of cyberspace), Resources (the objects, data content that flows through the cyberspace), Subjects (the main body roles and users, including human users, organizations, equipment, software, websites, etc.), and Operations (all kinds of activities of processing Resources, including creation, storage, change, use, transmission, display, etc.) ( Fang 2018a ; 2018b ). For complex attack scenarios, there may be sub-goals (precondition) exist, which themselves may not breach the cybersecurity.

Social engineering attack goal includes but is not limited to: 1) network intrusion, interception or disruption, 2) gain unauthorized access to information or systems, 3) denial of service, 4) data exfiltration, modification, fabrication or destruction, 5) infrastructure sabotage, 6) obtain physical access to restricted areas. Thus, it can be simply classified as above categories or use other taxonomies as Fig. 7 shows.

Taxonomy of social engineering attack goal

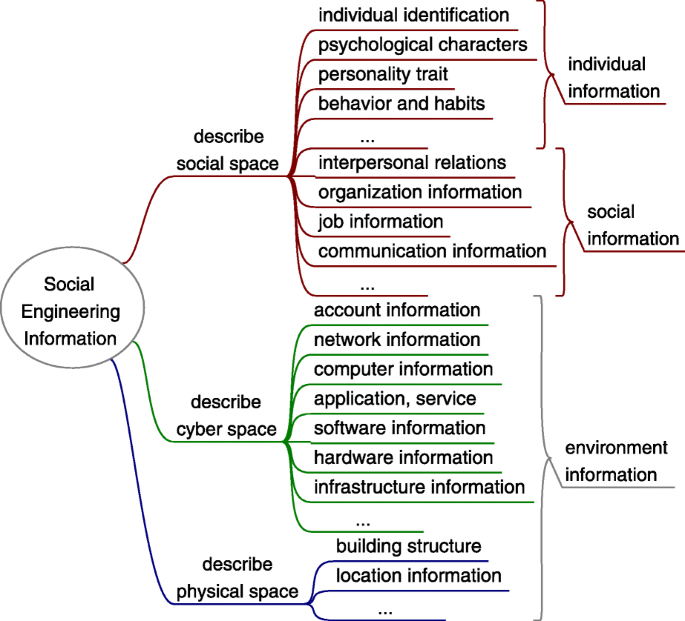

Social engineering information

In many attack scenarios, the success of social engineering relies heavily on the information gathered, such as personal information of the targets (victims), organization information, network information, social relation information. In a broad sense, every bit of information posted publicly or leaked in cyberspace or in reality might provide attackers the resource, such as to learn the environment, to discover targets, to find vulnerable human factors and cyber vulnerabilities, to formulate attack strategy, and to craft attack methods. This is also a feature of social engineering compared with classical computer attack. Thus, this paper use "social engineering information" to represent any information that helps the attacker to conduct a social engineering attack.

Social engineering information includes but is not limited to: 1) person name, 2) identity 3) photograph, 4) habits and characteristics, 5) hobbies or interests, 6) job title, 7) job responsibility, 8) schedule, 9) routines, 10) new employee, 11) organizational structure, 12) organizational policy, 13) organizational logo, 14) company partner, 15) lingo, 16) manuals, 17) interpersonal relations, 18) family information, 19) profile in SNSs, 20) posts in social media, 21) connections in SNSs, 22) SNSs group information, 23) (internal) phone numbers, 24) email information (address, format, footer, etc.), 25) username, 26) password, 27) network information, 28) computer name, 29) IP addresses, 30) server name, 31) application information, 32) version information, 33) hardware information, 34) IT infrastructure information, 35) building structure, 36) location information.

Figure 8 presents a taxonomy based on what space the information describes, in which the last level may be more intuitive. Other taxonomies can be also workable, such as publicly accessible information, restricted information; personal information, social relations information and other various environments (cyber, cultural, physical) information.

Taxonomy of social engineering information

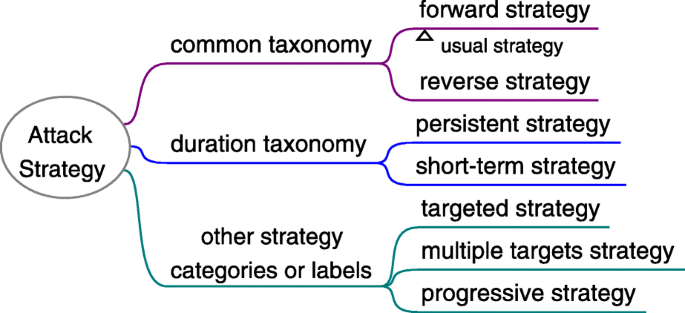

Attack strategy

Attack strategy is a plan, pattern, or guidance of actions formulated by the attacker for certain attack goal. It is necessary especially for complex social engineering attacks. Usually, social engineering attackers formulate the attack strategy based on their comprehensive understanding on the attack situation, such as resources, environments, targets, vulnerabilities and mediums. There are two common social engineering strategies in literature: forward (usual) strategy and reverse strategy. In forward attack strategy, the attacker directly contacts the targets and delivers attack payloads to them, waiting the targets to trigger the attack and be compromised. However, in reverse social engineering, the targets are prompted to contact the attacker actively for a request or help, and the attacker usually pretends to be a party of legitimate, authoritative, expert or trustworthy in advance. As a result, a higher degree of trust is established and the targets are more likely to be attacked. E.g. The attacker first makes a network failure and then pretends to be a technical support staff; when the targets seek for a help, the attacker convinces them with certain excuses into revealing the password or installing a malicious software.

From the duration perspective, attack strategy can be persistent strategy or short-term strategy. Some other categories are also helpful to label the attack strategies, as Fig. 9 shows.

Taxonomy of social engineering attack strategy

Attack method

When the attack strategy existed, attack method is generally according to or guided by it. Attack method is the way, manner or means of carrying an attack out; the attacker crafts and performs it to achieve specific attack goal. Synonyms such as attack vector, attack technique and attack approach are used to convey the same meaning. A common taxonomy in literature is to divide social engineering attacks into human-based and computer-based (or technology-based) ( Damle 2002 ; Redmon 2005 ; Ivaturi and Janczewski 2011 ; Mohd Foozy et al. 2011 ; Maan and Sharma 2012 ). Figure 10 (right) presents 20 attack method instances, in which some methods such as influence, deception, persuasion, manipulation and induction also describe skills frequently used in other methods. In many attack scenarios, multiple social engineering methods can be jointly used; classical attack methods that exploit non-human-vulnerabilities might also be combined to perform social engineering attacks. Besides, there are many auxiliary tricks or cunning actions may be utilized in different methods to assist the attack (e.g. to obtain trust, influence or deceive the targets). Figure 10 shows the overview of these categories and the corresponding instances. It is a non-exhaustive list and it seems impossible to enumerate all the social engineering attack methods, since new attack methods are emerging as the development of cyber technology, the evolution of environment and attackers’ creation.

Taxonomy of social engineering attack method

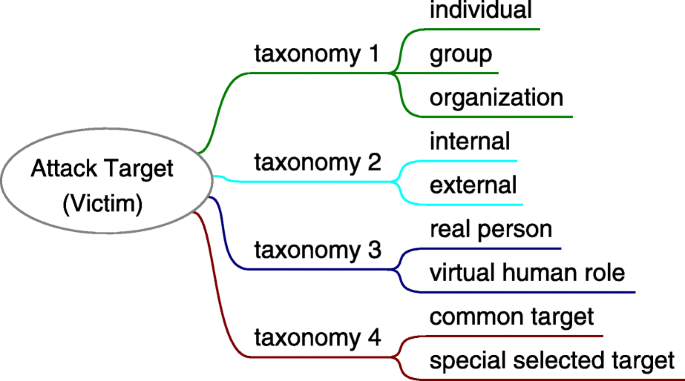

Attack target, victim

Attack target is the party to suffer a social engineering attack and bring about an attack consequence. The attacker applies attack method to the targets, and they become victims once their vulnerabilities were exploited. For attackers, anyone helpful to achieve the attack goal is a potential attack target. And the attacker might select multiple targets in some attack scenarios. The potential attack targets include but is not limited to: 1) new employees, 2) secretaries, 3) help desk, 4) technical support, 5) system administrators, 6) telephone operators, 7) security guards, 8) receptionists, 9) contractors, 10) clients, 11) partners, 12) managers, 13) executive assistants, 14) manufacturers, 15) vendors ( Mitnick and Simon 2011 ). Similar to the attacker, attack target can be an individual, a group or an organization; a real person or a virtual human role; from internal or external. As Fig. 11 shows.

Taxonomy of social engineering attack target

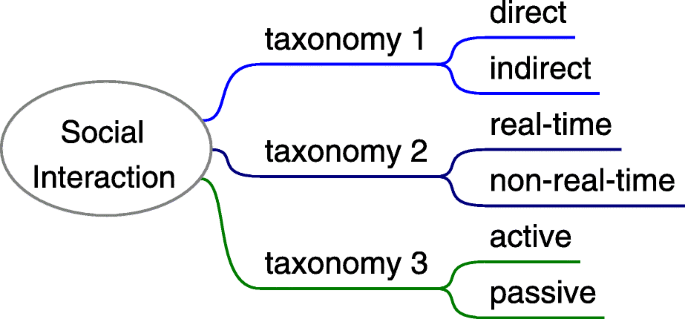

Social interaction and attack medium

Social engineering is a type of attack involves social interaction which is defined as the communication between or joint activity involving two or more human roles ( Wang et al. 2020 ). It covers the interpersonal interaction in the real world and user interaction in cyberspace. Attack medium is not only the entity so that the social interaction can implement (through which the target is contacted), but also the substance or channel through which attack methods are carried out. In some social engineering attacks, several different mediums might be used. E.g. The attacker deceives the target through phone to receive an important document, and then carry out phishing attack in the email.

The taxonomies of social interaction can be various according to different criteria. It can be direct (e.g. face to face in the real world) or indirect (e.g. email), real-time (e.g. phone talking) or non-real-time (e.g. email), active or passive (e.g. reverse social engineering). As Fig. 12 shows.

Taxonomy of social interaction in social engineering

The attack mediums include but is not limited to: 1) the real world, 2) attach files, 3) letter, 4) manual, 5) card, 6) picture, 7) video, 8) RFID tag, 9) QR code, 10) phone, 11) email, 12) website, 13) software, 14) Bluetooth, 15) pop-up window, 16) instant messenger, 17) cloud service, 18) Voice over IP (VoIP), 19) portable storage drives, 20) short message service (SMS), 21) mobile communication devices, 22) SNSs.

Human vulnerability

Human vulnerability is the human factor exploited by the attacker to conduct a social engineering attack through various kinds of attack methods. This is a distinctive attribute of social engineering compared to classical computer attacks. For social engineering, other types of vulnerability (e.g. software vulnerabilities) can be exploited together with human vulnerability, yet they are non-necessary ( Wang et al. 2020 ). A wide range of human factors can be exploited in social engineering, and a skilled social engineer (attacker) can transform common or inconspicuous human factors into security vulnerabilities exploitable in specific attack scenarios.

In general, human vulnerabilities in social engineering fall into four aspects: 1) cognition and knowledge, 2) behavior and habit, 3) emotion and feeling, and 4) psychological vulnerabilities. And the psychological vulnerabilities can be further divided into three levels: 1) human nature, 2) personality trait and 3) individual character from the evolution perspective of human wholeness to individuation ( Wang et al. 2021 ). Following is a non-exhaustive list of human vulnerabilities, which contains 43 instances of these six categories.

Cognition and Knowledge (8 instances): ignorance, inexperience, thinking set and stereotyping, prejudice / bias, conformity, intuitive judgement, low level of need for cognition, heuristics and mental shortcuts.

Behavior and Habit (4 instances): laziness / sloth, carelessness and thoughtlessness, fixed-action patterns, behavioral habits / habitual behaviors.

Emotions and Feelings (11 instances): fear / dread, curiosity, anger / wrath, excitement, tension, happiness, sadness, disgust, surprise, guilt, impulsion, fluke mind.

Human nature (6 instances): self-love, sympathy, helpfulness, greed, gluttony, lust.

Personality traits (5 dimensions): conscientiousness, extraversion, agreeableness, openness, neuroticism.

Individual characters (9 instances): credulity / gullibility, friendliness, kindness and charity, courtesy, humility, diffidence, apathy / indifferent, hubris, envy.

Effect mechanism

Social engineering effect mechanism describes the structural relation that what, why or how specific attack effect (consequence) corresponds to specific human vulnerability, in specific attack situation ( Wang et al. 2021 ). Given the attack scenarios and human vulnerabilities, it explains or predicts the attack consequence. E.g. Impression management theory and reciprocity norm explain why new employees (inexperience, helpfulness, etc.) are more vulnerable to give up their username and password to technical support staffs pretended by the attacker, who helps to resolve their network failure first and then request an information disclosure with certain excuses. Social engineering effect mechanisms involve lots of principles and theories in multiple disciplines such as sociology, psychology, social psychology, cognitive science, neuroscience and psycholinguistics. Study ( Wang et al. 2021 ) summarizes six aspects of social engineering effect mechanisms: 1) persuasion, 2) influence, 3) cognition, attitude and behavior, 4) trust and deception, 5) language, thought and decision, 6) emotion and decision-making. Following is a non-exhaustive list of effect mechanisms, which contains 38 instances of these six aspects.

Persuasion (7 instances): similarity & liking & helping in persuasion, distraction in persuasion and manipulation, source credibility and obey to authority, the central route to persuasion, the peripheral route to persuasion, Elaboration Likelihood Model of persuasion, recipient’s need for cognition in persuasion.

Influence (8 instances): group influence and conformity, normative influence (social validation), informational influence (social proof), social exchange theory, reciprocity norm, social responsibility norm, moral duty, self-disclosure and rapport relation building.

Cognition, Attitude and Behavior (9 instances): impression management theory, cognitive dissonance, commitment and consistency, foot-in-the-door effect, diffusion of responsibility, bystander effect, deindividuation in group, time pressure and thought overloading, scarcity: perceived value and fear arousing.

Trust and Deception (5 instances): trust and take risk, factor affecting trust, factor affecting deception, integrative model of organizational trust, interpersonal deception theory (IDT).

Language, Thought and Decision (4 instances): relation between language and thinking, framing effect and cognitive bias, language invoke confusion: induce and manipulation, indirectness of thought and negative conception expression in language.

Emotion and Decision-making (5 instances): neurophysiological mechanism of emotion & decision, emotion and feelings influence decision making, facial expression & deception leakage, facial action coding, micro expression identify and deception detecting.

Attack consequence

Attack consequence is something that follows as a result or effect of a social engineering attack. The attacker feed it back to the attack goal to decide whether a further attack is required. The taxonomy of attack consequence is similar with the taxonomy of attack goal, as Fig. 13 shows.

Taxonomy of social engineering attack consequence

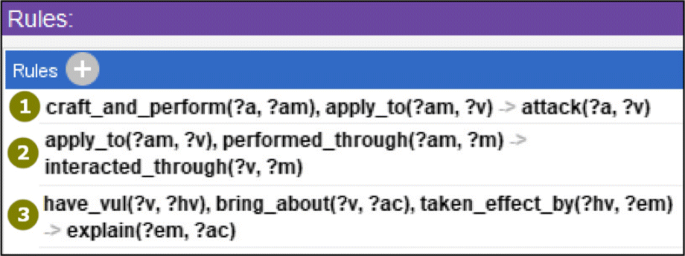

Due to the subclass name in protégé will be converted to node labels in later knowledge graph, considering the intuitive demonstration and data feature, multiple different taxonomies can be used to assist knowledge analysis. Figure 14 (left) shows the implementation of concepts defined above. Table 2 shows the related concepts descriptions set as class axioms in protégé yet not reflected in the Fig. 14 Footnote 3 .

Overview of concepts and relations defined in Protégé

Define relations in the domain ontology

Based on the definitions presented in “ Define core concepts in the domain ontology ” section, we extract 22 kinds of relations among the core concepts. Table 3 shows these relations and their Domain (start), direction and Range (end). Figure 14 (right) shows the implementation of these relations in Protégé, and Table 4 shows the related concepts descriptions set as object property (relation) axioms yet not reflected in the Fig. 14 and Table 3 .

Define other descriptions in the ontology

Besides the axioms descriptions for concepts and relations in Tables 2 and 4 , annotations are optional to facilitate the ontology implementation and many comments (a type of annotation) for instances are added in “ Create instances, knowledge base and knowledge graph ” section to help the instances edition and knowledge analysis.

Here three reasoning rules are defined for simple scenario analysis such as unique attacker, victim and attack consequence. Figure 15 . The rule 1 is used to add a new relation: if 1) an attacker crafts and performs certain attack method and 2) the attack method is applied to a target, then a relation "attack" will be created from the attacker to the target (victim). The rules 2 and 3 are used to automatically complete the relations that are not designated explicitly in the instance data but have defined in ontology. This is useful to improve knowledge base and convenient for the instances’ creation. The built-in reasoner HermiT can be used to implement the reasoning. For complex attack analysis, these rules might need some adjustments and other reasoning tools can also be used.

Rules defined in the ontology

Above is the key material and ontology implementation after the ontology revise and validation. The supplementary material will lead reviewers / independent researcher to reproduce the result.

Result: domain ontology of social engineering in cybersecurity

Figure 16 shows the domain ontology of social engineering in cybersecurity developed in Protégé 3 . The core concepts and their relations is marked inside the red polygon, the outside shows the taxonomies (also as the labels) used, and the right area is the legend for relations (the directed color connection in the figure). To be intuitive and integrative, Fig. 17 presents the ontology in a more clear and concise way.

The domain ontology of social engineering in cybersecurity developed in Protégé

The domain ontology of social engineering in cybersecurity

Overall, 11 core concepts and 22 kinds of relations among them are formally and explicitly encoded / defined in Protégé, together with related description, rules and annotations. For this domain ontology, it can be exported with multiple ontology description language and file formats, such as RDF / XML, OWL / XML, Turtle and JSON-LD, to reuse and share the domain knowledge schema.

Evaluation: knowledge graph application examples

The best way to evaluate the quality of the ontology developed may be problem-solving methods or using it in applications which reflect the design goal ( Noy and McGuinness 2001 ). Corresponding to the purpose of the ontology development presented in “ Methodology to develop domain ontology ” section, this section evaluates the domain ontology by its knowledge graph application for analyzing social engineering attack scenarios or incidents. First, the ontology serve as a machine processable knowledge schema is used to create the instances, generate the knowledge base and build a knowledge graph. Then, 7 knowledge graph application examples are presented for social engineering attack analysis.

Create instances, knowledge base and knowledge graph

An ontology together with a set of instances organized by the knowledge schema defined by the ontology constitutes a knowledge base, which further serve as the data source of a knowledge graph. For this paper, a dataset of social engineering attack scenarios that contains the necessary instance classes such as attacker, victim / target, human vulnerability, social interaction (medium) and attack goal is in demand. Yet there is not such a public dataset available now. Thus, the attack incidents and typical attack scenarios described in work ( Wang et al. 2020 ) and ( Wang et al. 2021 ) are adopted and expanded as material to create instances for each concept defined in the ontology and build the knowledge base. Overall, 15 attack scenarios (Table 5 ) in 14 social engineering attack types are used to generate a relatively medium-small size knowledge base.

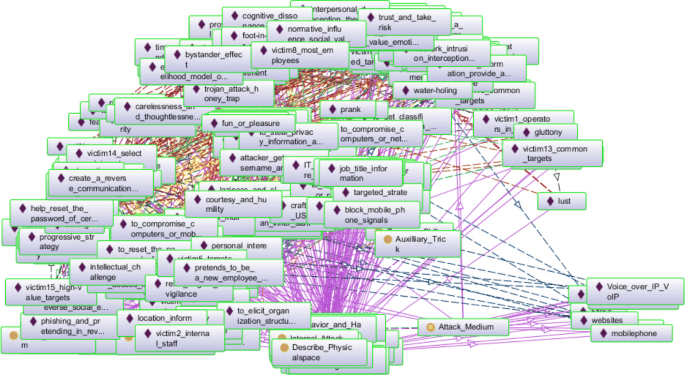

The instances and their interrelations described in every attack scenario are dissected and edited in Protégé also, since it is convenient to check the data consistency and revise errors according to the ontology. In this process, we add many comments (for instances of attacker, attack method and victim) to assist the instances creation and knowledge analysis. Figure 18 shows the overview of the knowledge base in Protégé. A total of 224 instances are created in the knowledge base Footnote 4 .

The overview of the knowledge base generated in Protégé

Due to the limited functionality of Protégé for data analysis and visualization, we select Neo4j (community-3.5.19) ( Neo4j community edition 3.5.19 2020 ) as the tool to display the knowledge graph and analyze social engineering attacks. Neo4j is easier and faster to represent, retrieve and navigate connected data. And the Neo4j CQL (cypher query language) commands are declarative pattern-matching, which is in human-readable format and easy to learn.

There are mainly two steps to migrate data from Protégé to Neo4j. First, export the ontology and instances in Protégé to RDF/XML or OWL/XML file, with the reasoner enabled to infer and complete the knowledge according to the axioms and rules defined. Then, import the RDF/XML 4 file into Neo4j by the plugin neosemantics (version 3.5.0.4). The detailed scripts and commands used to build the knowledge graph is submitted as supplementary material.

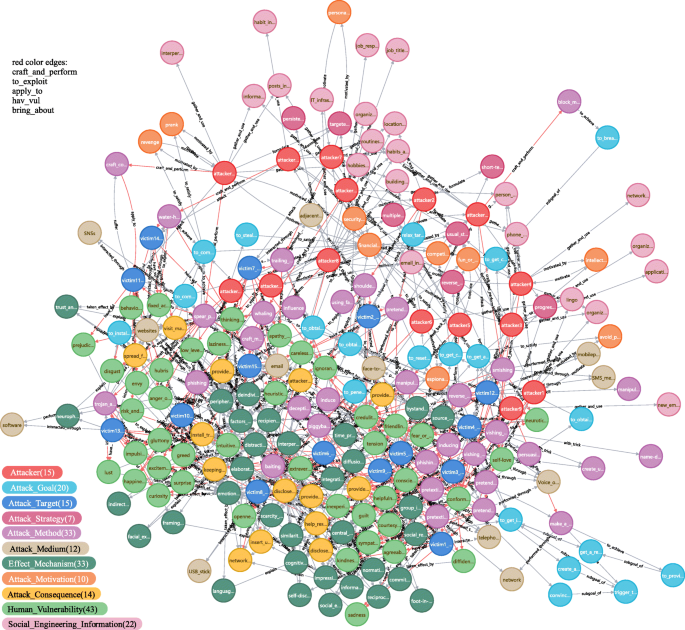

According to the statistic in Neo4j, 1785 triples were imported and parsed, and 344 resource nodes and 939 relations were created in the whole knowledge graph. Figure 19 shows the knowledge graph consist of all instances nodes and their interrelations. The legend for node color is in the left bottom.

The knowledge graph generated in Neo4j

In the knowledge graph, the relations craft and perform, apply to, to exploit, have vul, bring about among nodes attacker, attack method, victim, human vulnerability, attack consequence are colored with red, to abstract and denote an attack occurrence (Fig. 19 ), for the convenience of attack analysis.

knowledge graph application examples

By virtue of the domain ontology and knowledge graph, there are at least 7 application examples (in 6 patterns) available to analyze social engineering attack scenarios or incidents Footnote 5 .

Analyze single social engineering attack scenario or incident

The components of a specific social engineering attack scenario can be dissected into 11 classes of nodes with different color. These nodes are interconnected and constitute an intuitive and vivid knowledge graph. By this way, the security researchers can get an insight of an attack quickly from the whole to the part.

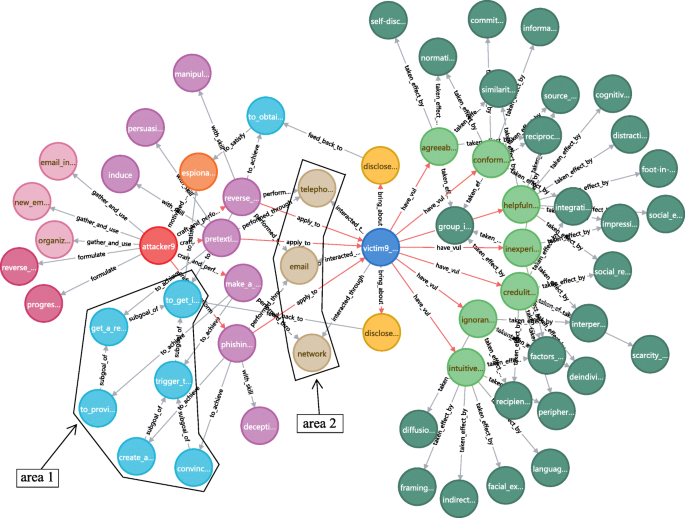

A case in point is the knowledge graph of attack scenario 9 (a reverse social engineering attack) as Fig. 20 shows. The left part (of area 2) depicts the contents surrounding the attacker: the attacker9 motivated by espionage to gather and use information about organization structure, new employee and email address; formulate reverse and progressive strategy; craft and perform (red arrow) multiple attack methods to elicit password or other sensitive information, or get access or help to breach cybersecurity. Goal and sub-goals in area 1 form an attack tree structure, which enables to describe the multi-step attacks in progressive strategy or other complex attack scenarios. The middle part (area 2) depicts the attack mediums through which the attack methods are performed, and also the interaction form with targets (victims). The right part depicts the nodes related to victim: the victim9 brings about certain attack consequences, due to he / she has vulnerabilities such as conformity, inexperience and helpfulness, which (are exploited by attack methods and) are taken effect by mechanisms displayed in the right edge nodes. Some relations (suffer, to exploit, explain) are not displayed here to get a clear view, which can be returned by adding CQL expressions, clicking the node (expand / collapse relations) or using the setting "connect result nodes".

Analyze single social engineering attack scenario (e.g. scenario 9) by knowledge graph

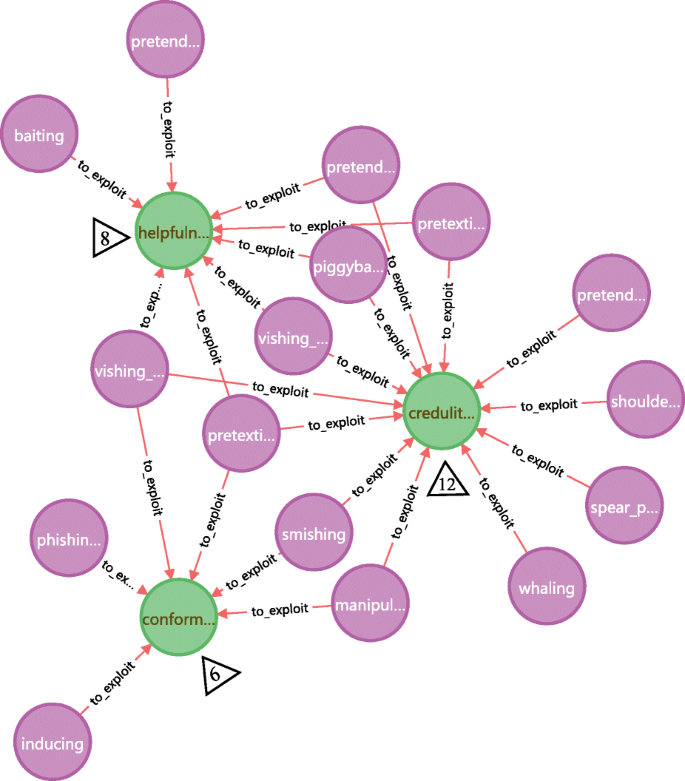

Analyze the Most exploited human vulnerabilities

As one of the confrontational focuses between social engineering attack and defense, human vulnerability is what attackers want to exploit and what defenders / victims want to eliminate or mitigate. Knowing the frequently exploited human vulnerabilities is of great significance for social engineering defense. The exploited frequency for each human vulnerability in the knowledge base can be counted and ranked by CQL expressions (MATCH, COUNT, ORDER). Figure 21 extracts the top 3 human vulnerabilities most exploited by various kinds of attack methods: credulity, helpfulness and conformity. This suggests that these human vulnerabilities should be watched out in security-related issues and paid more attention in defense measures such as security awareness training.

Find the most exploited (top 3) human vulnerabilities

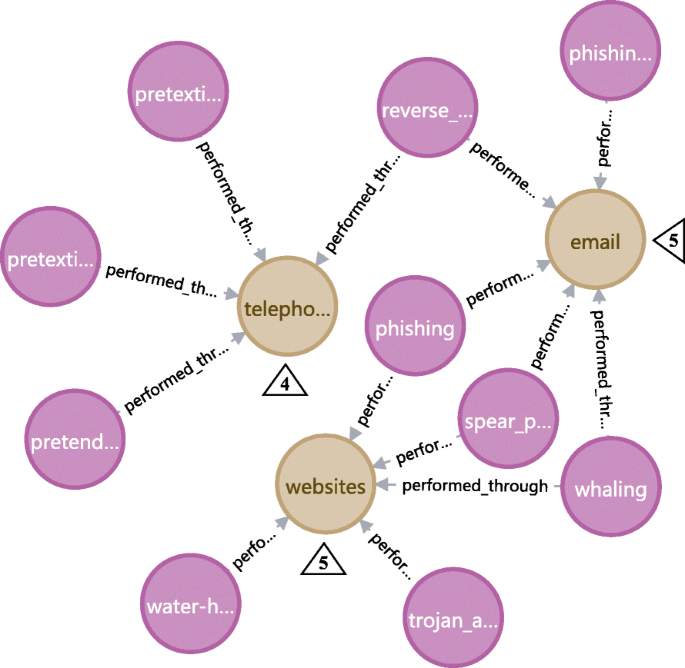

Analyze the Most used attack mediums and interaction forms

Similar to the analysis pattern in “ Analyze the Most exploited human vulnerabilities ” section, the statistic analysis of attack mediums and interaction forms can be executed to get an understanding of where the social engineering attacks are frequently occurred. Figure 22 presents the top 3 mediums most used to perform social engineering attack in the knowledge base: email, website and telephone. This reflects that many social engineering attacks are performed through network and electronic communication, meanwhile reminds us to beware social engineering threat when using these communication mediums.

Find the most used (top 3) attack mediums and social interaction

Find additional (potential) threats for victims (targets)

For specific victim (target), knowledge graph can be used to find additional (potential) threats beyond the given scenario. The following analysis pattern can be extracted from the domain ontology and attack scenario analysis:

Namely: the attacker a2 can also employ the attack methods am2 to attack victim v1 (i.e. exploited the victim v1’s vulnerabilities hv ), if a victim v1 has certain human vulnerabilities hv and exploited in scenario S1 meanwhile the hv are found also exploited in another scenario S2 by attacker a2 through attack method am2 .

Figure 23 shows this application where victim7 serves as an example. It depicts that the victim7 has five human vulnerabilities and exploited by attacker7 in scenario7; besides, three of these vulnerabilities can be also exploited by another 5 pairs of attacker and attack method. In short, for victim7 there are 5 additional and potential attack threats, and precautions should be taken against them.

For specific victim, find additional threats beyond the given scenario

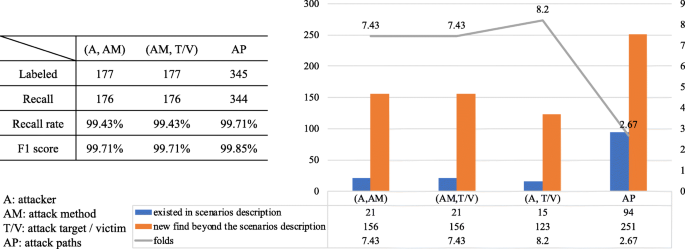

To evaluate this and the latter two analysis patterns, we extracted all the undirected and acyclic graphs (among red color edges) from an attacker to a victim in the knowledge graph. This treatment generated a clear labeled dataset, meanwhile avoided the subjectivity in the process of labeling. In total, 345 reachable paths (i.e. attack paths) were labeled. Among these attack paths, 177 (attacker, attack method) pairs are labeled. For all the 15 victims, this analysis pattern find 156 new (attacker, attack method) threat pairs beyond the 21 pairs described in Table 5 . Besides, the above analysis pattern recalls 176 pairs without wrong cases. The recall rate is 99.43% and the F1 score is 99.71%. One pair was omitted due to one attack method’s edges to exploit hv were divided and assigned to other attack methods in the same scenario.

Find potential targets for attackers

For specific attacker, knowledge graph can be used to find additional or potential targets beyond the given scenario. Similar to the previous analysis pattern, the following logic was extracted:

Namely: the victim v2 can be also attacked by the attacker a1 through attack method am1 or am2 , if a victim v1 has certain human vulnerabilities hv and exploited by attack method am1 crafted by attacker a1 in scenario S1 meanwhile the victim v2 is found also has the same vulnerabilities hv in scenario S2 exploited by attack method am2 .

Figure 24 shows this application where attacker10 serves as an example. It presents that the attacker10 crafts and performs phishing to exploit victim10’s vulnerabilities in scenario10; moreover, another 6 targets have the same vulnerabilities that victim10 has and can be also exploited by attacker10 through phishing (or attack methods in other scenarios). In brief, 6 potential targets are found for attacker10. For practice, it is helpful to notify all the potential targets if attacker10 or phishing is a serious security threat. If this is a penetration testing, Fig. 24 will offer testers more attack targets and attack methods.

For specific attacker, find potential targets (victims) beyond the given scenario

For all the 15 attackers, this analysis pattern find 123 new exploitable targets beyond the 15 victims described in Table 5 , and 156 new (attack method, targets) pairs beyond the 21 pairs described in Table 5 . This analysis pattern recalls 176 (attack method, targets) pairs without wrong cases. The recall rate is 99.43% and the F1 score is 99.71%. One pair was omitted due to one attack method’s edges to exploit hv were divided and assigned to other attack methods in the same scenario..

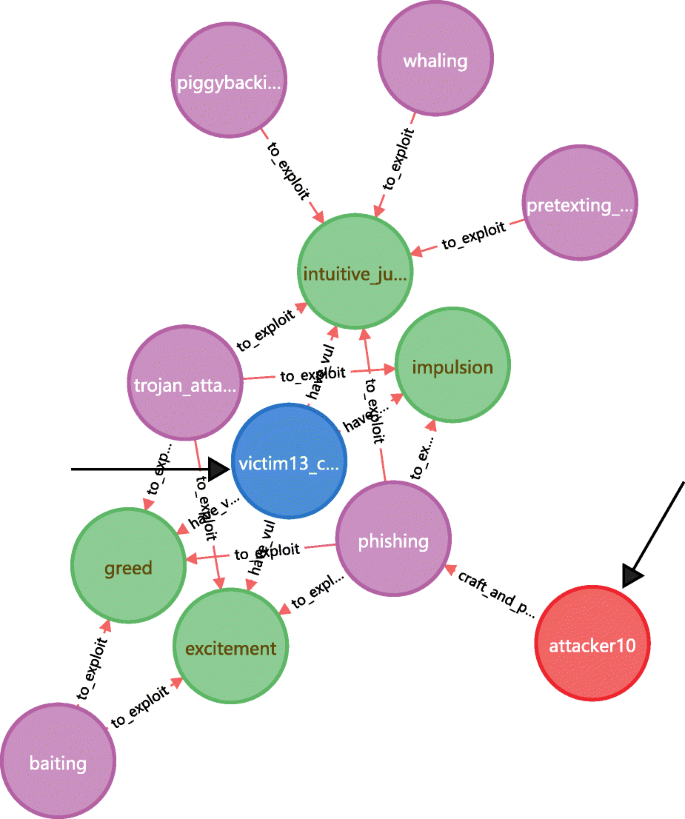

Find paths from specific attacker to specific target

For specific attacker and specific victim which are not in the same attack scenario, knowledge graph can be used to check or find feasible attack paths and potential attack methods. This is a combination of the previous two analysis patterns, and the following pattern was extracted:

Namely, the attack path from attacker a1 to target v2 is feasible, if attacker a1 can successfully exploit human vulnerability hv by attack method am1 , meanwhile the target v2 is found has the vulnerability hv .

Figure 25 shows this application where attacker10 and victim13 serve as the examples. The following 4 attack paths is extracted from the knowledge base: (attacker10)-[craft and perform] → (phishing)-[to exploit] → (4 human vulnerabilities) ← [has]-(victim13) . In addition, another 5 attack methods that exploit the victim13’s vulnerabilities but not within the attack paths are also presented in Fig. 25 . These methods are potentially available for attacker10 to reach victim13.

For specific attacker and victim, find potential attack paths and methods

For all the 15 attackers and 15 targets, this analysis pattern find 251 new attack paths beyond the 94 paths described in Table 5 , and 123 new (attacker, targets) pairs beyond the 15 pairs described in Table 5 . For all 345 labeled attack paths, this analysis pattern recalls 344 attack paths without wrong cases. The recall rate is 99.71% and the F1 score is 99.85%. One attack path was omitted due to one attack method’s edges to exploit hv were divided and assigned to other attack methods in the same scenario.

Figure 26 summarizes the experiment results and statistic analysis of “ Find additional (potential) threats for victims (targets) ”, “ Find potential targets for attackers ” and “ Find paths from specific attacker to specific target ” section.

Experiment results and statistic analysis of “ Find additional (potential) threats for victims (targets) ”, “ Find potential targets for attackers ” and “ Find paths from specific attacker to specific target ” sections

Analyze the same origin attack

In general, the attack method am1 and am2 are similar or related if they have some common features; am1 and am2 might be launched by the same attacker if they have certain crucial common features, e.g they point to the same domain address controlled (by attacker). Further, am1 and am2 is likely to be same-origin and the attacker a1 and a2 is likely in the same attack organization, if above (am1, am2) are launched respectively by two different attackers (a1, a2) who are motivated by the same motivation m to attack different victims (v1, v2) who have the same affiliation. Based on above cognition or assumption, Fig. 27 shows the knowledge graph application example to analyze same origin attack.

Analyze the same origin attack by knowledge graph

Besides returning the graph existed in the knowledge base, new relations and nodes can be created. A new relation "same affiliation" is created between victim10 and victim15, since they both have the data property "affiliation" with the equal value. There is a potential relation "same origin attack" between whaling and phishing nodes, because in the whaling attack Trojan horse or back door with encoded domain address "att.eg.net" is used meanwhile this address is also found in the malicious link of phishing attack. Furthermore, due to attacker15 and attacker10 have the same motivation "financial gain" and victim15 and victim10 in the same "Company A", given all these, it can be inferred that these two scenarios compose a same-origin and organized attack. Thus, we create new relation "same origin attack" between the two attack method nodes and relation "in the same organization" between the two attacker nodes.

There are some studies related to social engineering ontology. Simmonds et al. (2004) proposed a conceptualization / ontology for network security attacks, in which components (access, actor, attack, threat, motive, information, outcome, impact, intangible, system administrator) are included. Although some components (e.g. actor, motive, information) are similar to concepts in this paper, the ontology ( Simmonds et al. 2004 ) focuses on network security (and access control), which cannot be used to describe social engineering domain. Oosterloo (2008) presented an ontological chart, in which concepts such as attacker, threat, risk, stakeholder and asset are involved. But this chart is served as a model to summarize and organize aspects related to social engineering risk management, and the purpose is not a formal and explicit description of concepts and relations in social engineering domain. Vedeshin (2016) discussed three phases (orchestration, exploitation, and compromise) of social engineering attacks, in which some classes (such as target, actor, goal, techniques, medium, execution steps and maintaining access) are discussed. However, this taxonomy is used to classify different social engineering attacks. Mouton et al. (2014) described an ontological model of social engineering attack consisted of six entities: social engineer, target, medium, goal, compliance principles and techniques. However, the concept definitions of these entities were not presented and the relations among these entities were also not specified. That is, it does not constitute a domain ontology. Besides, the social engineering definition in Mouton et al. (2014) is proposed form the perspective of persuasion, which describes only a part of social engineering ( Wang et al. 2020 ). As another result, the model does not include some important entities (e.g. human vulnerability) and aspects (e.g. deception and trust). Tchakounté et al. (2020 ) discussed a certain spear phishing scenario / flow and its description logic (DL), yet other social engineering attack types were not involved. Li and Ni (2019) discussed the difficulty to distinguish social engineering attacks (methods) collected from six studies. They identified some core concepts to characterize social engineering attack by aligning these concepts with existing security concepts, and then provided a description logic for a security ontology and attack classification. In the security ontology, social engineer, social engineering attack, human and human vulnerability were respectively aligned as subclass of attacker, attack, asset and vulnerability ; another two concepts attack media and social engineering techniques were also included. However, human is the target yet not the asset that social engineering attacks aim to harm , and according to their text and ontology implementation, social engineering attack and technique seem to refer the same concept. This might be reasons why the concepts’ relations in their work were not aligned. Besides, the domain ontology of social engineering is not the focus of study ( Li and Ni 2019 ), and the above six (or five) concepts are not sufficient to analyze relatively complex social engineering attack incidents / scenarios. Alshanfari et al. (2020) gathered some terms related to social engineering and attempted to organize them by Protégé using method described in Noy and McGuinness (2001) . However, the terms were extracted only from 30 publications from 2015 to 2018 and only three entity classes (attack type, threat and countermeasures) were presented, in which some terms are just related to the class yet are not the instances of it (e.g. guilt, websites in attack type ; sensitive information, password in threat ). Besides, relations among these classes were not described clearly. Thus, this work is mainly oriented to the terms and classification. Nevertheless, we would like to appreciate above works and other researchers who make efforts in this field.

We develop a domain ontology of social engineering in cybersecurity and conducts ontology evaluation by knowledge graph application.

The domain ontology describes what entities significantly constitute or affect social engineering and how they relate to each other, provides a formal and explicit knowledge schema, and can be used to understand, analyze, reuse and share domain knowledge of social engineering.

The 7 analysis examples by knowledge graph not only show the ontology evaluation and application, but also present new means to analyze social engineering attack and threat.

In addition, the way that 1) use Protégé to develop ontology, create instances and knoledge base 2) and then employ Neo4j to import RDF/OWL data, optimize knoledge base and construct knoledge graph for better data analysis and visualization also provides a reference for related research.

In the ontology, some taxonomies (subclasses) or relations might be verbose or omitted. But as mentioned before, subclass name will be converted to node labels and inverse relations can facilitate the knowledge retrieval, and therefore, users can add or delete them based on specific application requirements.

The material of attack scenarios and the data of ontology+instances offer a dataset can be used for future related research. The knowledge graph dataset (224 instances nodes, 344 resource nodes and 939 relations of 15 attack scenarios) seems small. Yet it covers 14 kinds of social engineering types, and the 6 kinds of analysis patterns have demonstrated the various feasibilities of the proposed ontology and knowledge graph in analyzing social engineering attack and threat.

To the best of our knowledge, this is the first work which completes a domain ontology for social engineering in cybersecurity, and further provides its knowledge graph application for attack analysis.

Due to the complexity of social engineering domain, the ontology seems impossible perfect in the only once establishment. We throw out a brick to attract a jade and look forward superior studies by researchers in this field.

This paper develops a domain ontology of social engineering in cybersecurity, in which 11 concepts of core entities that significantly constitute or affect the social engineering domain together with 22 kinds of relations among these concepts are defined. It provides a formal and explicit knowledge schema to understand, analyze, reuse and share domain knowledge of social engineering. Based on this domain ontology, this paper builds a knowledge graph using 15 social engineering attack incidents / typical scenarios. The 7 knowledge graph application examples (in 6 kinds of analysis patterns) demonstrate that the ontology together with the knowledge graph can be used to analyze social engineering attack scenarios or incidents, to find (the top ranked) threat elements (e.g. the most exploited human vulnerabilities, attack mediums), to find potential attackers, targets and attack paths, and to analyze the same origin attacks.

Availability of data and materials

The data and materials of this study are available from the corresponding author upon reasonable request.

The literature database was submitted as supplementary material for review.

Term lists organized by alphabetical order and semantic groups were submitted as supplementary material for review.

The implementation file was submitted as supplementary material (SEiCS-Ontology+instances.owl) for review.

The implementation file was submitted as supplementary material (SEiCS-Ontology+instances-inferred.owl) for review.

All the CQL scripts for these application were submitted as supplementary material for review.

Alshanfari, I, Ismail R, N.Zaizi J. M, Wahid FA (2020) Ontology-based formal specifications for social engineering. Int J Technol Manag Inform Syst 2:35–46.

Chitrey, A, Singh D, Singh V (2012) A comprehensive study of social engineering based attacks in india to develop a conceptual model. Int J Inform Netw Secur 1:45.

Google Scholar

Damle, P (2002) Social engineering: A tip of the iceberg. Inform Syst Control J 2:51–52.

Fang, B (2018a) The Definitions of Fundamental Concepts In: Cyberspace Sovereignty : Reflections on building a community of common future in cyberspace, 1–52.. Springer, Singapore. https://doi.org/10.1007/978-981-13-0320-3_1 .

Fang, B (2018b) Define cyberspace security. Chin J Netw Inform Secur 4:1–5.

Indrajit, RE (2017) Social Engineering Framework: Understanding the Deception Approach to Human Element of Security. Int J Comput Sci Issues (IJCSI) 14:8–16.

Ivaturi, K, Janczewski L (2011) A taxonomy for social engineering attacks In: International Conference on Information Resources Management, Centre for Information Technology, Organizations, and People, 1–12. https://aisel.aisnet.org/cgi/viewcontent.cgi?article=1015&context=confirm2011. Accessed 24 Sept 2017.

Li, T, Ni Y (2019) Paving Ontological Foundation for Social Engineering Analysis. In: Giorgini P Weber B (eds)Advanced Information Systems Engineering, 246–260.. Springer International Publishing, Cham.

Chapter Google Scholar

Maan, PS, Sharma M (2012) Social engineering: A partial technical attack. Int J Comput Sci Issues 9:1694–0814. https://pdfs.semanticscholar.org/7e51/0456042c26cade06d74ea755c774713c46cf.pdf .

Mitnick, KD, Simon WL (2011) The Art of Deception: Controlling the Human Element of Security. Wiley, New York.

Mohd Foozy, CF, Ahmad R, Abdollah M, Robiah Y, Masud Z (2011) Generic Taxonomy of Social Engineering Attack In: MUiCET, 1–7.. UTHM, Batu Pahat.

Mouton, F, Leenen L, Malan MM, Venter HS (2014) Towards an Ontological Model Defining the Social Engineering Domain In: ICT and Society, IFIP Advances in Information and Communication Technology, 266–279.. Springer, Berlin. https://link.springer.com/chapter/10.1007/978-3-662-44208-1_22 .

Musen, MA, Protégé Team (2015) The Protégé Project: A Look Back and a Look Forward. AI Matters 1:4–12. https://pubmed.ncbi.nlm.nih.gov/27239556 . Accessed Aug 2020.

Article Google Scholar

Neo, 4j community edition 3.5.19 (2020). https://neo4j.com/download-center/#community . Accessed Aug 2020.

Noy, NF, McGuinness DL (2001) Ontology Development 101: A Guide to Creating Your First Ontology, Technical Report, Knowledge Systems Laboratory. https://protege.stanford.edu/publications/ontology_development/ontology101.pdf . Accessed Aug 2020.

Oosterloo, B (2008) Managing social engineering risk: making social engineering transparant, Ph.D. thesis, University of Twente. http://essay.utwente.nl/59233/1/scriptie_B_Oosterloo.pdf . Accessed Oct 2017.

Redmon, KC (2005) Mitigation of Social Engineering Attacks in Corporate America. East Carolina University, Greenville.

Research, D (2011) The Risk of Social Engineering on Information Security: A Survey of IT Professionals, Technical Report, Dimensional Research. https://www.stamx.net/files/The-Risk-of-Social-Engineering-on-Information-Security.pdf . Accessed Aug 2020.

Simmonds, A, Sandilands P, van Ekert L (2004) An Ontology for Network Security Attacks. In: Manandhar S, Austin J, Desai U, Oyanagi Y, Talukder AK (eds)Applied Computing, 317–323.. Springer Berlin Heidelberg, Berlin.

Tchakounté, F, Molengar D, Ngossaha JM (2020) A Description Logic Ontology for Email Phishing. Int J Inform Secur Sci 9:44–63.

Vedeshin, A (2016) Contributions of Understanding and Defending Against Social Engineering Attacks. Department of Computer Science, Tallinn University of Technology, Tallinn. https://digikogu.taltech.ee/testimine/et/Download/081abe95-55b2-4d56-b552-9ef9b5106ada/Kaitsetehnosotsiaalsesahkerdamisevastu.pdf . Accessed Nov 2020.

Wang, Z, Sun L, Zhu H (2020) Defining Social Engineering in Cybersecurity. IEEE Access 8:85094–85115. https://doi.org/10.1109/access.2020.2992807 .

Wang, Z, Zhu H, Sun L (2021) Social Engineering in Cybersecurity: Effect Mechanisms, Human Vulnerabilities and Attack Methods. IEEE Access 9:11895–11910. https://doi.org/10.1109/ACCESS.2021.3051633 .

Download references

This work was supported in part by the National Key Research and Development Program of China (2017YFB0802804) and in part by the Joint Fund of the National Natural Science Foundation of China (U1766215).

Author information

Authors and affiliations.

School of Cyber Security, University of Chinese Academy of Sciences, Beijing, 100049, China

Zuoguang Wang, Hongsong Zhu, Peipei Liu & Limin Sun

Beijing Key Laboratory of IoT Information Security Technology, Institute of Information Engineering, Chinese Academy of Sciences (CAS), Beijing, 100093, China

You can also search for this author in PubMed Google Scholar

Contributions

Zuoguang Wang: investigation, conceptualization, methodology, materials, writing, editing, experiment, validation, review, resources, supervision. Hongsong Zhu: resources, supervision, discussion, funding acquisition. Peipei Liu: discussion, conceptualization. Limin Sun: resources, funding acquisition. All authors read and approved the final manuscript.

Corresponding authors

Correspondence to Zuoguang Wang or Hongsong Zhu .

Ethics declarations

Competing interests.

The authors declare that they have no competing interests.

Additional information

Publisher’s note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/ .

Reprints and permissions

About this article

Cite this article.

Wang, Z., Zhu, H., Liu, P. et al. Social engineering in cybersecurity: a domain ontology and knowledge graph application examples. Cybersecur 4 , 31 (2021). https://doi.org/10.1186/s42400-021-00094-6

Download citation

Received : 24 February 2021

Accepted : 28 April 2021

Published : 02 August 2021

DOI : https://doi.org/10.1186/s42400-021-00094-6

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Social engineering attack

- Cyber security

- Knowledge graph

- Attack scenarios

- Threat analysis

- Attack path

- Attack model

- Composition and structure

- DOI: 10.1201/b11355-9

- Corpus ID: 265731431

Social Engineering

- Yavor Papazov

- Published 2016

- Computer Science, Political Science, Sociology

31 References

A typology of social engineering attacks - an information science perspective, handling human hacking: creating a comprehensive defensive strategy against modern social engineering, a study of social engineering in online frauds, towards an ontological model defining the social engineering domain, information technology social engineering: an academic definition and study of social engineering - analyzing the human firewall.

- Highly Influential

The Underestimated Social Engineering Threat in IT Security Governance and Management

The art of deception: controlling the human element of security, handcrafted fraud and extortion: manual account hijacking in the wild, auntietuna: personalized content-based phishing detection, domain-based message authentication, reporting, and conformance (dmarc), related papers.

Showing 1 through 3 of 0 Related Papers

Information

- Author Services

Initiatives

You are accessing a machine-readable page. In order to be human-readable, please install an RSS reader.

All articles published by MDPI are made immediately available worldwide under an open access license. No special permission is required to reuse all or part of the article published by MDPI, including figures and tables. For articles published under an open access Creative Common CC BY license, any part of the article may be reused without permission provided that the original article is clearly cited. For more information, please refer to https://www.mdpi.com/openaccess .

Feature papers represent the most advanced research with significant potential for high impact in the field. A Feature Paper should be a substantial original Article that involves several techniques or approaches, provides an outlook for future research directions and describes possible research applications.

Feature papers are submitted upon individual invitation or recommendation by the scientific editors and must receive positive feedback from the reviewers.

Editor’s Choice articles are based on recommendations by the scientific editors of MDPI journals from around the world. Editors select a small number of articles recently published in the journal that they believe will be particularly interesting to readers, or important in the respective research area. The aim is to provide a snapshot of some of the most exciting work published in the various research areas of the journal.

Original Submission Date Received: .

- Active Journals

- Find a Journal

- Journal Proposal

- Proceedings Series

- For Authors

- For Reviewers

- For Editors

- For Librarians

- For Publishers

- For Societies

- For Conference Organizers

- Open Access Policy

- Institutional Open Access Program

- Special Issues Guidelines

- Editorial Process

- Research and Publication Ethics

- Article Processing Charges

- Testimonials

- Preprints.org

- SciProfiles

- Encyclopedia

Article Menu

- Subscribe SciFeed

- Recommended Articles

- Google Scholar

- on Google Scholar

- Table of Contents

Find support for a specific problem in the support section of our website.

Please let us know what you think of our products and services.

Visit our dedicated information section to learn more about MDPI.

JSmol Viewer

A study on the psychology of social engineering-based cyberattacks and existing countermeasures.

1. Introduction

2. social engineering attacks, 2.1. phishing attack, 2.2. dumpster diving, 2.3. scareware, 2.4. water hole, 2.5. reverse social engineering, 2.6. deepfake, 3. influence methodologies, 3.1. social influence, 3.2. persuasion, 3.3. attitude and behavior, 3.4. trust and deception, 3.5. language and reasoning, 3.6. countering social engineering-based cyberattacks, 3.7. machine learning-based countermeasures, 3.7.1. deep learning, 3.7.2. reinforcement learning, 3.7.3. natural language processing, 4. discussion, 5. conclusions, author contributions, institutional review board statement, informed consent statement, conflicts of interest, abbreviations.

| SE | social engineering |

| BEC | business email compromise |

| RAT | remote access Trojan |

| ML | machine learning |

| DSD | distributed spam distraction |

| GANs | generative adversarial networks |

| ANNs | artificial neural networks |

| TPB | theory of planned behavior |

| IDT | interpersonal deception theory |

| SMS | short message service |

| NLP | natural language processing |

| DL | deep learning |

| DNN | deep neural network |

| LSTM | long short-term memory |

| RL | reinforcement learning |

| CRM | cyber-resilient mechanism |

- Abroshan, H.; Devos, J.; Poels, G.; Laermans, E. Phishing happens beyond technology: The effects of human behaviors and demographics on each step of a phishing process. IEEE Access 2021 , 9 , 44928–44949. [ Google Scholar ] [ CrossRef ]

- Siddiqi, M.A.; Mugheri, A.; Oad, K. Advanced persistent threats defense techniques: A review. Pak. J. Comput. Inf. Syst. 2016 , 1 , 53–65. [ Google Scholar ]

- Wang, Z.; Zhu, H.; Sun, L. Social engineering in cybersecurity: Effect mechanisms, human vulnerabilities and attack methods. IEEE Access 2021 , 9 , 11895–11910. [ Google Scholar ] [ CrossRef ]

- Albladi, S.M.; Weir, G.R.S. Predicting individuals’ vulnerability to social engineering in social networks. Cybersecurity 2020 , 3 , 7. [ Google Scholar ] [ CrossRef ]

- Saudi Aramco Confirms Data Leak after Reported Cyber Ransom. Available online: https://www.bloomberg.com/news/articles/2021-07-21/saudi-aramco-confirms-data-leak-after-reported-cyber-extortion (accessed on 6 August 2021).

- Marriott Discloses Data Breach Possibly Affecting over 5 Million Customers. Available online: https://edition.cnn.com/2020/04/01/business/marriott-hack-trnd/index.html (accessed on 10 August 2021).

- Marriott Data Breach FAQ: How Did It Happen and What Was the Impact? Available online: https://www.csoonline.com/article/3441220/marriott-data-breach-faq-how-did-it-happen-and-what-was-the-impact.html (accessed on 7 July 2021).

- Hughes-Larteya, K.; Li, M.; Botchey, F.E.; Qin, Z. Human factor, a critical weak point in the information security of an organization’s Internet of things. Heliyon 2021 , 7 , 6522–6535. [ Google Scholar ] [ CrossRef ]

- Siddiqi, M.A.; Ghani, N. Critical analysis on advanced persistent threats. Int. J. Comput. Appl. 2016 , 141 , 46–50. [ Google Scholar ]

- Americans Lost $ 29.8 Billion to Phone Scams Alone over the Past Year. Available online: https://www.cnbc.com/2021/06/29/americans-lost-billions-of-dollars-to-phone-scams-over-the-past-year.html (accessed on 8 August 2021).

- Widespread Credential Phishing Campaign Abuses Open Redirector Links. Available online: https://www.microsoft.com/security/blog/2021/08/26/widespread-credential-phishing-campaign-abuses-open-redirector-links/ (accessed on 11 October 2021).

- Twitter Hack: Staff Tricked by Phone Spear-Phishing Scam. Available online: https://www.bbc.com/news/technology-53607374 (accessed on 10 August 2021).

- Shark Tank Host Barbara Corcoran Loses $ 380,000 in Email Scam. Available online: https://www.forbes.com/sites/rachelsandler/2020/02/27/shark-tank-host-barbara-corcoran-loses-380000-in-email-scam/?sh=73b0935a511a (accessed on 7 October 2021).

- Toyota Parts Supplier Hit by $ 37 Million Email Scam. Available online: https://www.forbes.com/sites/leemathews/2019/09/06/toyota-parts-supplier-hit-by-37-million-email-scam/?sh=733a2c6e5856 (accessed on 7 October 2021).

- Fraudsters Used AI to Mimic CEO’s Voice in Unusual Cybercrime Case. Available online: https://www.wsj.com/articles/fraudsters-use-ai-to-mimic-ceos-voice-in-unusual-cybercrime-case-11567157402 (accessed on 11 October 2021).

- Google and Facebook Duped in Huge ‘Scam’. Available online: https://www.bbc.com/news/technology-39744007 (accessed on 15 October 2021).

- Facebook and Google Were Conned out of $ 100m in Phishing Scheme. Available online: https://www.theguardian.com/technology/2017/apr/28/facebook-google-conned-100m-phishing-scheme (accessed on 12 October 2021).

- Govindankutty, M.S. Is human error paving way to cyber security? Int. Res. J. Eng. Technol. 2021 , 8 , 4174–4178. [ Google Scholar ]

- Siddiqi, M.A.; Pak, W. Optimizing filter-based feature selection method flow for intrusion detection system. Electronics 2020 , 9 , 2114. [ Google Scholar ] [ CrossRef ]

- Human Cyber Risk—The First Line of Defense. Available online: https://www.aig.com/about-us/knowledge-insights/human-cyber-risk-the-first-line-of-defense (accessed on 12 August 2021).

- Pfeffel, K.; Ulsamer, P.; Müller, N. Where the user does look when reading phishing mails—An eye-tracking study. In Proceedings of the International Conference on Human-Computer Interaction (HCII), Orlando, FL, USA, 26–31 July 2019. [ Google Scholar ]

- Gratian, M.; Bandi, S.; Cukier, M.; Dykstra, J.; Ginther, A. Correlating human traits and cyber security behavior intentions. Comput. Secur. 2018 , 73 , 345–358. [ Google Scholar ] [ CrossRef ]

- Dhillon, G.; Talib, Y.A.; Picoto, W.N. The mediating role of psychological empowerment in information security compliance intentions. J. Assoc. Inf. Syst. 2020 , 21 , 152–174. [ Google Scholar ] [ CrossRef ]

- 12 Types of Phishing Attacks and How to Identify Them. Available online: https://securityscorecard.com/blog/types-of-phishing-attacks-and-how-to-identify-them (accessed on 16 August 2021).

- Social Engineering Attack Escalation. Available online: https://appriver.com/blog/201708social-engineering-attack-escalation (accessed on 11 September 2021).

- Cross, M. Social Media Security: Leveraging Social Networking While Mitigating Risk , 1st ed.; Syngress Publishing: Rockland, MA, USA, 2014; pp. 161–191. [ Google Scholar ]

- Grover, A.; Berghel, H.; Cobb, D. Advances in Computers ; Academic Press: Burlington, MA, USA, 2011; Volume 83, pp. 1–50. [ Google Scholar ]

- Malin, C.H.; Gudaitis, T.; Holt, T.J.; Kilger, M. Phishing, Watering Holes, and Scareware. In Deception in the Digital Age: Exploiting and Defending Human Targets through Computer-Mediated Communications , 1st ed.; Academic Press: Burlington, MA, USA, 2017; pp. 149–166. [ Google Scholar ]

- Malin, C.H.; Gudaitis, T.; Holt, T.J.; Kilger, M. Viral Influence: Deceptive Computing Attacks through Persuasion. In Deception in the Digital Age: Exploiting and Defending Human Targets through Computer-Mediated Communications , 1st ed.; Academic Press: Burlington, MA, USA, 2017; pp. 77–124. [ Google Scholar ]

- Social Engineering: What You Can Do to Avoid Being a Victim. Available online: https://www.g2.com/articles/social-engineering (accessed on 26 August 2021).

- Social Engineering Technique: The Watering Hole Attack. Available online: https://medium.com/@thefoursec/social-engineering-technique-the-watering-hole-attack-9ee3d2ca17b4 (accessed on 26 August 2021).

- Shi, Z.R.; Schlenker, A.; Hay, B.; Bittleston, D.; Gao, S.; Peterson, E.; Trezza, J.; Fang, F. Draining the water hole: Mitigating social engineering attacks with cybertweak. In Proceedings of the Thirty-Second Innovative Applications of Artificial Intelligence Conference (IAAI-20), New York, NY, USA, 9–11 February 2020. [ Google Scholar ]

- Parthy, P.P.; Rajendran, G. Identification and prevention of social engineering attacks on an enterprise. In Proceedings of the International Carnahan Conference on Security Technology (ICCST), Chennai, India, 1–3 October 2019. [ Google Scholar ]

- Irani, D.; Balduzzi, M.; Balzarotti, D.; Kirda, E.; Pu, C. Reverse social engineering attacks in online social networks. In Proceedings of the Detection of Intrusions and Malware, and Vulnerability Assessment (DIMVA), Berlin, Germany, 7–8 July 2011. [ Google Scholar ]

- Albahar, M.; Almalki, J. Deepfakes: Threats and countermeasures systematic review. J. Theor. Appl. Inf. Technol. 2019 , 97 , 3242–3250. [ Google Scholar ]

- Chi, H.; Maduakor, U.; Alo, R.; Williams, E. Integrating deepfake detection into cybersecurity curriculum. In Proceedings of the Future Technologies Conference (FTC), Virtual Platform, San Francisco, CA, USA, 5–6 November 2020. [ Google Scholar ]

- Gass, R.H. International Encyclopedia of the Social & Behavioral Sciences , 2nd ed.; Elsevier: Houston, TX, USA, 2015; pp. 348–354. [ Google Scholar ]

- Myers, D. Social Psychology , 10th ed.; Mc Graw Hill: New York, NY, USA, 2012; pp. 266–304. [ Google Scholar ]

- Mamedova, N.; Urintsov, A.; Staroverova, O.; Ivanov, E.; Galahov, D. Social engineering in the context of ensuring information security. In Proceedings of the Current Issues of Linguistics and Didactics: The Interdisciplinary Approach in Humanities and Social Sciences (CILDIAH), Volgograd, Russia, 23–28 April 2019. [ Google Scholar ]

- Foa, E.B.; Foa, U.G. Handbook of Social Resource Theory , 2012th ed.; Springer: New York, NY, USA, 2012; pp. 15–32. [ Google Scholar ]